Your Code is Fine. Your Architecture Isn’t.

Why great code isn’t enough - discover the architectural patterns that make or break software projects.

Code is easy. Sort of. You learn a language, practice algorithms, wrestle with frameworks. You build things. They work. Mostly. But then things get… complicated. Features pile up. Teams grow. The market shifts. Suddenly, that elegant codebase feels like wading through treacle. Deployments become terrifying rituals. Adding a simple feature requires touching ten different files, each change rippling outwards with unpredictable consequences. Sound familiar? This isn't just about bad code. This is about architecture. Or the lack thereof.

Architecture is the skeleton. It's the city plan. It’s the unseen structure that dictates how your software lives, breathes, and evolves. Get it wrong, and your beautiful code becomes a prison, trapping you in technical debt and slowing you to a crawl. Get it right, and you unlock agility, scalability, and resilience. It’s the difference between a shack built on sand and a skyscraper with deep foundations. At 1985, we've seen both sides of this coin countless times, helping businesses navigate the often-murky waters of system design. It’s not about dogma; it’s about making informed choices that align with your specific context – your team, your product, your future.

Many teams skip this crucial step, diving headfirst into coding, assuming architecture will "emerge." Sometimes it does, often resembling something dredged from the bottom of a swamp. An upfront investment in architectural thinking isn't an academic exercise; it's a pragmatic necessity for building anything non-trivial that needs to last. It dictates how components interact, how data flows, how failures are handled, and ultimately, how easy (or painful) it will be to adapt to future needs. Ignoring it is like building a house without blueprints – you might get walls and a roof, but good luck adding a second story later.

The Ghost in the Machine: When Architecture Goes Wrong (and Right)

The consequences of poor architectural choices aren't always immediately obvious. They manifest slowly, insidiously. Technical debt accumulates like compound interest, eventually crippling development velocity. A system tightly coupled like strands of overcooked spaghetti makes changes risky and time-consuming. A design that can't scale horizontally means throwing increasingly expensive hardware at performance problems, a strategy with rapidly diminishing returns. Maintenance becomes a nightmare, requiring heroic efforts from developers who understand the system's arcane secrets – until they leave, taking that knowledge with them. According to a Stripe report, developers spend over 17 hours per week on maintenance tasks, including debugging and refactoring, much of which can be attributed to technical debt stemming from architectural issues. That's nearly half their time not building new value.

Conversely, well-architected systems exhibit distinct advantages. Maintainability improves because components are logically separated and have clear responsibilities, making it easier to understand, modify, and debug specific parts without affecting the whole. Scalability becomes achievable by design, allowing different parts of the system to be scaled independently based on load. Testability is enhanced because individual components or layers can be tested in isolation. Perhaps most importantly, team velocity increases. Clear boundaries and contracts allow teams to work more autonomously, reducing coordination overhead and merge conflicts. As stated in the book Accelerate by Nicole Forsgren, Jez Humble, and Gene Kim, loosely coupled architectures are a key predictor of high-performing technology organizations, enabling faster delivery and greater stability.

Think about the cost. It's not just developer hours. It's lost market opportunities because you couldn't ship features fast enough. It's customer churn due to poor performance or reliability. It's the inability to pivot your business model because your software is too rigid. Good architecture isn't a cost center; it's a strategic enabler. It provides the flexibility to adapt, the robustness to withstand failures, and the foundation for sustainable growth. It’s about building systems that empower the business, not constrain it.

Blueprints for Digital Structures: Common Architectural Patterns

Software architects don't start from scratch every time. They draw upon established patterns – proven solutions to recurring problems. These aren't rigid prescriptions but rather conceptual frameworks that offer different trade-offs. Understanding these patterns is crucial for making informed decisions. Let's skip the absolute basics and dive into the nuances of some prevalent ones.

The Monolith: Not Always a Monster

The monolith gets a bad rap these days. It’s often portrayed as an outdated relic. But let's be honest: it's the default starting point for many applications, and sometimes, it's perfectly adequate, even advantageous. A monolithic architecture packages all the application's functionality into a single deployment unit. Think of a single executable or web archive file containing the user interface, business logic, and data access layers.

The initial appeal is simplicity. Development can be faster initially because there's no network latency between components, transactions are typically straightforward (often handled by the database), and debugging can involve tracing execution within a single process. Deployment is conceptually simple: build the artifact, deploy the artifact. For small teams working on relatively simple domains or building Minimum Viable Products (MVPs), this can be highly efficient. You avoid the "distributed computing tax" that comes with more complex patterns.

However, the cracks appear as the system grows. Tight coupling becomes a major issue. A change in one module can inadvertently break another. Scaling becomes an all-or-nothing proposition – if one part of the application needs more resources, you have to scale the entire monolith, which is often inefficient. Deployments become risky and infrequent; a small change requires redeploying the entire application, increasing the blast radius of potential failures. Technology adoption gets tricky; upgrading a library or framework requires coordinating changes across the entire codebase, potentially locking you into older technology stacks. A well-structured "modular monolith," however, can mitigate some of these issues by enforcing strong internal boundaries, but it still remains a single deployment unit.

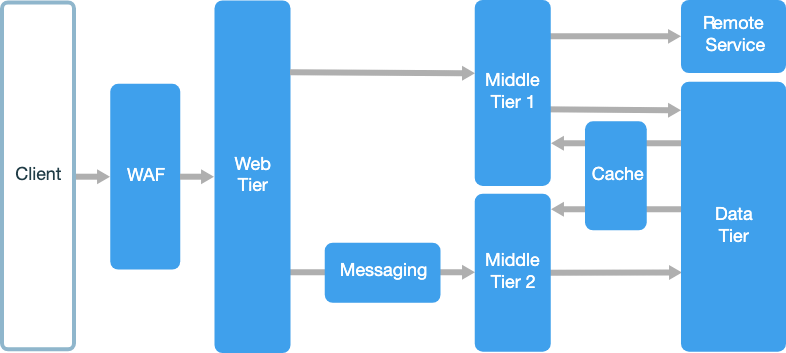

Microservices: Small Pieces, Loosely Joined

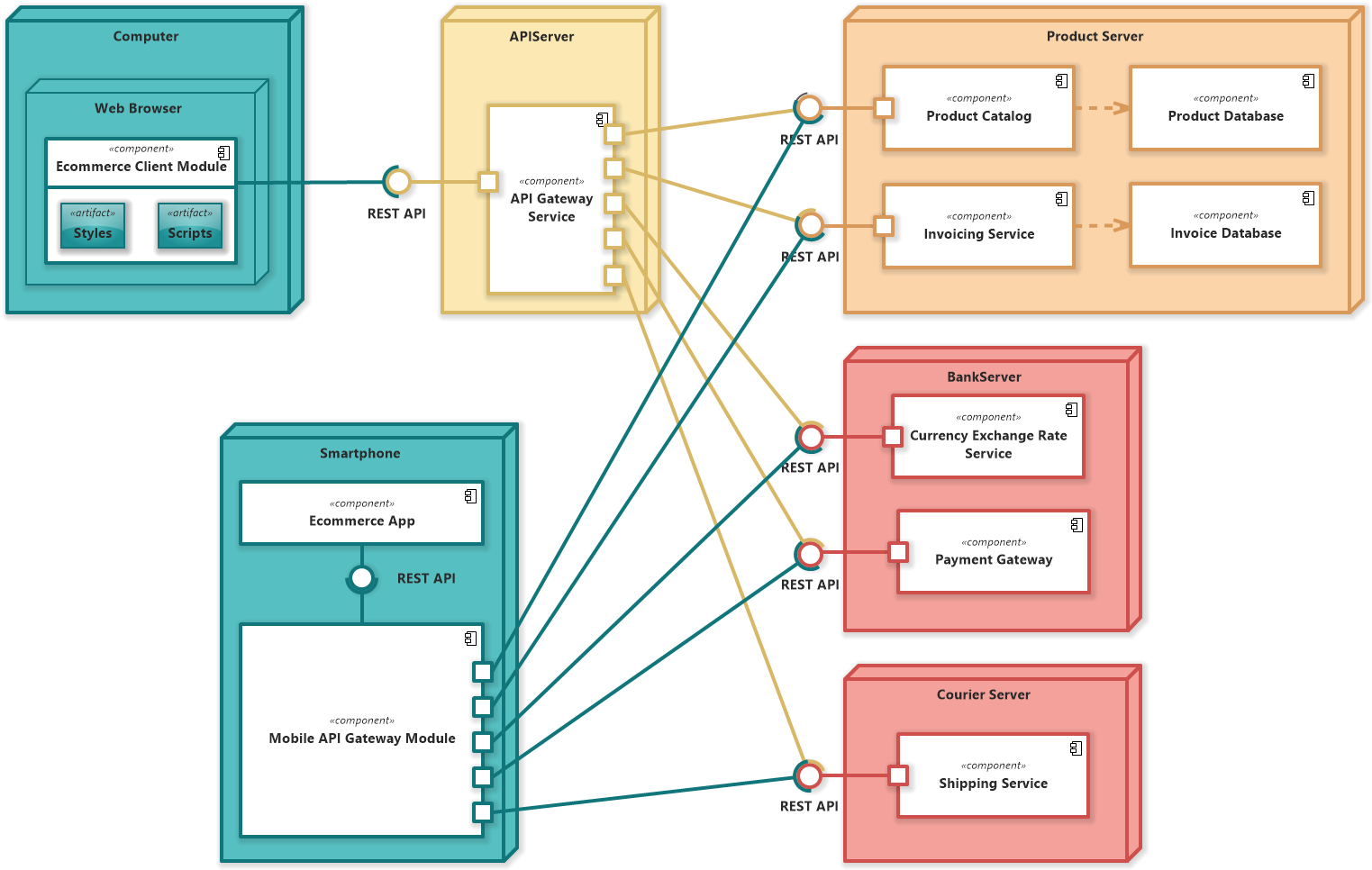

Microservices emerged as a direct response to the challenges of large monoliths. The core idea is to decompose an application into a collection of small, independent, and loosely coupled services. Each service focuses on a specific business capability, runs in its own process, and communicates with other services typically over a network (often using lightweight protocols like HTTP/REST or asynchronous messaging).

The benefits are compelling, particularly for large, complex systems. Independent Scalability is a huge win; you can scale individual services based on their specific needs. Technology Diversity allows teams to choose the best tools (languages, databases, frameworks) for their particular service, fostering innovation. Team Autonomy is enhanced; small, focused teams can own their services end-to-end, leading to faster development cycles (aligning with Conway's Law, which states that organizations design systems mirroring their communication structure). Resilience improves; the failure of one service doesn't necessarily bring down the entire application, assuming proper fault tolerance mechanisms (like circuit breakers) are in place.

But microservices introduce their own significant complexities. You're now dealing with a distributed system, which is inherently harder to manage. Network Latency and Reliability become concerns. Distributed Transactions require complex patterns like Sagas to maintain data consistency across services. Operational Overhead increases dramatically; you need robust infrastructure for deployment, monitoring, logging, service discovery, and configuration management across potentially hundreds of services. Defining the right Service Boundaries is critical and challenging; getting them wrong can lead to tightly coupled "distributed monoliths" or overly chatty services. Martin Fowler talks about the "Microservice Premium" – you need to be sophisticated enough to handle the complexity to reap the benefits. It's not a free lunch.

Event-Driven Architecture (EDA): Reacting to the Flow

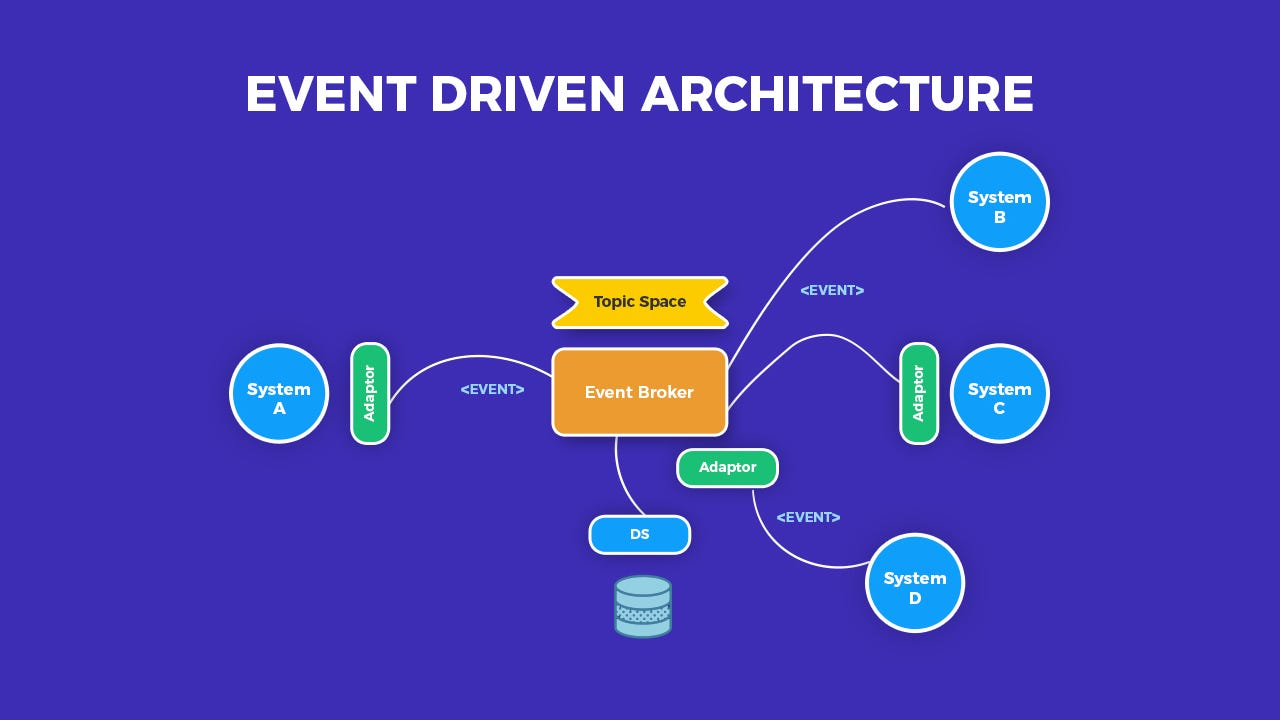

Event-Driven Architecture is less about the static structure of components and more about how they interact dynamically. Instead of services directly calling each other (request-response), they communicate asynchronously by producing and consuming events. An event represents a significant occurrence in the system (e.g., "OrderPlaced," "InventoryUpdated," "PaymentProcessed"). Services publish events to an event broker (like Kafka, RabbitMQ, or cloud-specific services), and other interested services subscribe to those events and react accordingly.

This pattern promotes Loose Coupling to an extreme degree. Producers don't need to know who the consumers are, or even if there are any. This makes the system highly extensible; new services can easily subscribe to existing event streams without modifying the producers. Scalability and Resilience are often enhanced. Event brokers are typically designed for high throughput and fault tolerance. If a consumer service fails, events can often queue up, waiting to be processed when the service recovers (depending on the broker configuration). EDA naturally supports Real-time Responsiveness as services react immediately when relevant events occur.

The challenges lie in the shift in mindset and the complexities of managing asynchronous flows. Debugging and Tracing can be difficult; understanding why a particular outcome occurred might involve tracing a chain of events across multiple services and potentially long time delays. Eventual Consistency becomes the norm; since updates propagate asynchronously via events, different parts of the system might have slightly different views of the data at any given moment, which needs careful handling. Managing Event Schemas and ensuring compatibility as they evolve across different services requires discipline and tooling. Complex business processes might involve intricate choreographies of events, which can be hard to visualize and manage.

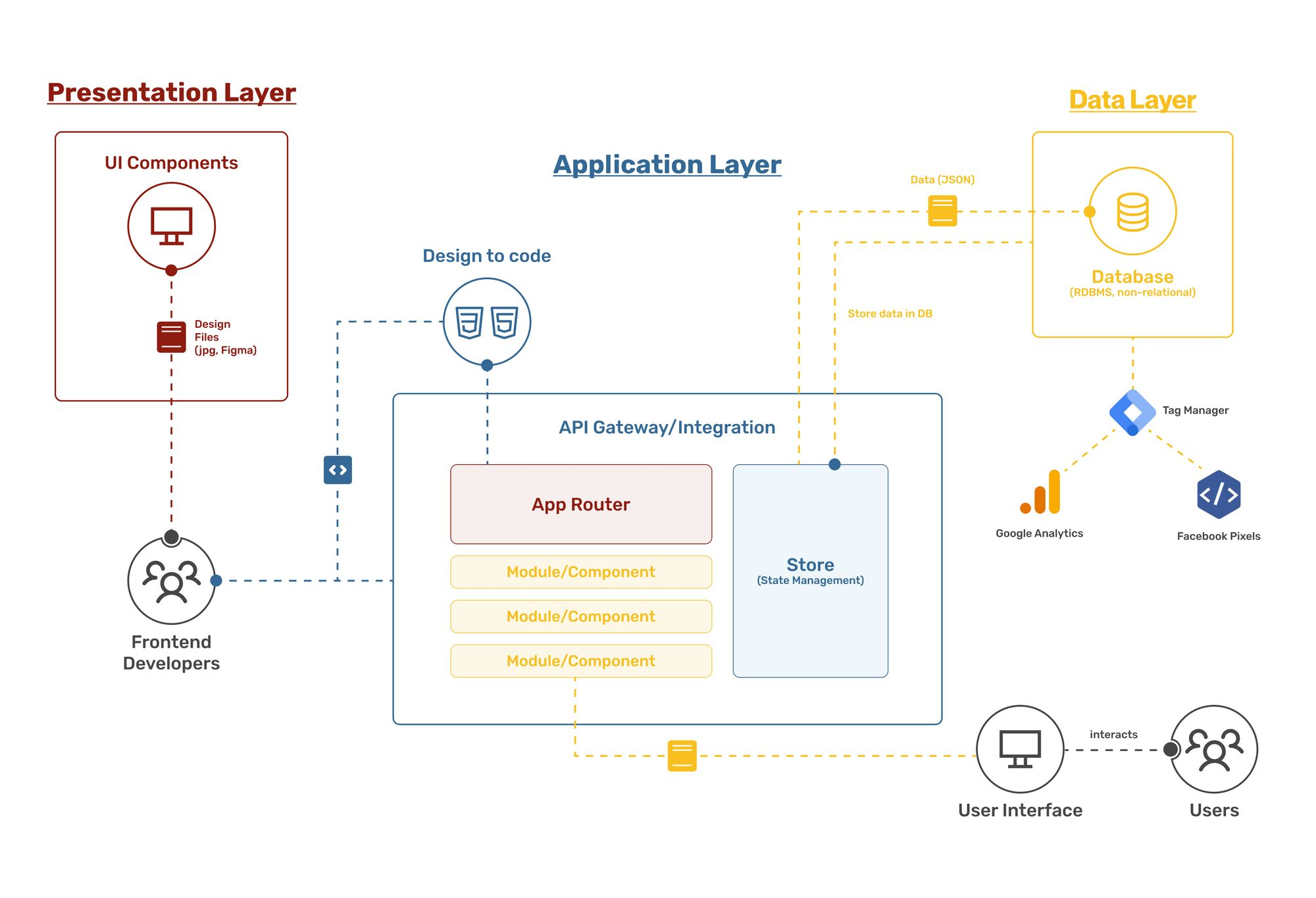

Layered (N-Tier): The Classic Separation

The Layered architecture is one of the oldest and most fundamental patterns, often forming the internal structure within other patterns like monoliths or even individual microservices. It organizes code into horizontal layers, each with a specific responsibility. Common layers include:

- Presentation Layer (UI): Handles user interaction and displays information.

- Business Logic Layer (BLL) / Domain Layer: Contains the core business rules and processes.

- Data Access Layer (DAL) / Persistence Layer: Responsible for communicating with the database or other data stores.

The primary benefit is Separation of Concerns. Each layer focuses on its specific task, making the code easier to understand, maintain, and test. Dependencies typically flow downwards – the presentation layer depends on the business logic layer, which depends on the data access layer. This structure promotes Modularity and allows developers to specialize in specific layers. You can potentially swap out implementations of a layer (e.g., change the database or the UI framework) with less impact on other layers, assuming the interfaces are well-defined.

However, layered architectures aren't without potential pitfalls. Strict adherence can sometimes lead to unnecessary indirection or boilerplate code. A common issue is the creation of "leaky abstractions," where details from a lower layer bleed into a higher layer, compromising the separation. Performance can sometimes be impacted if data has to pass through multiple layers unnecessarily. Over time, without discipline, layers can become blurred, leading back towards a less organized structure. It's a foundational pattern, but relying solely on it for complex, distributed systems is usually insufficient.

Other Notable Patterns

While Monolith, Microservices, EDA, and Layered are very common, other patterns address specific needs:

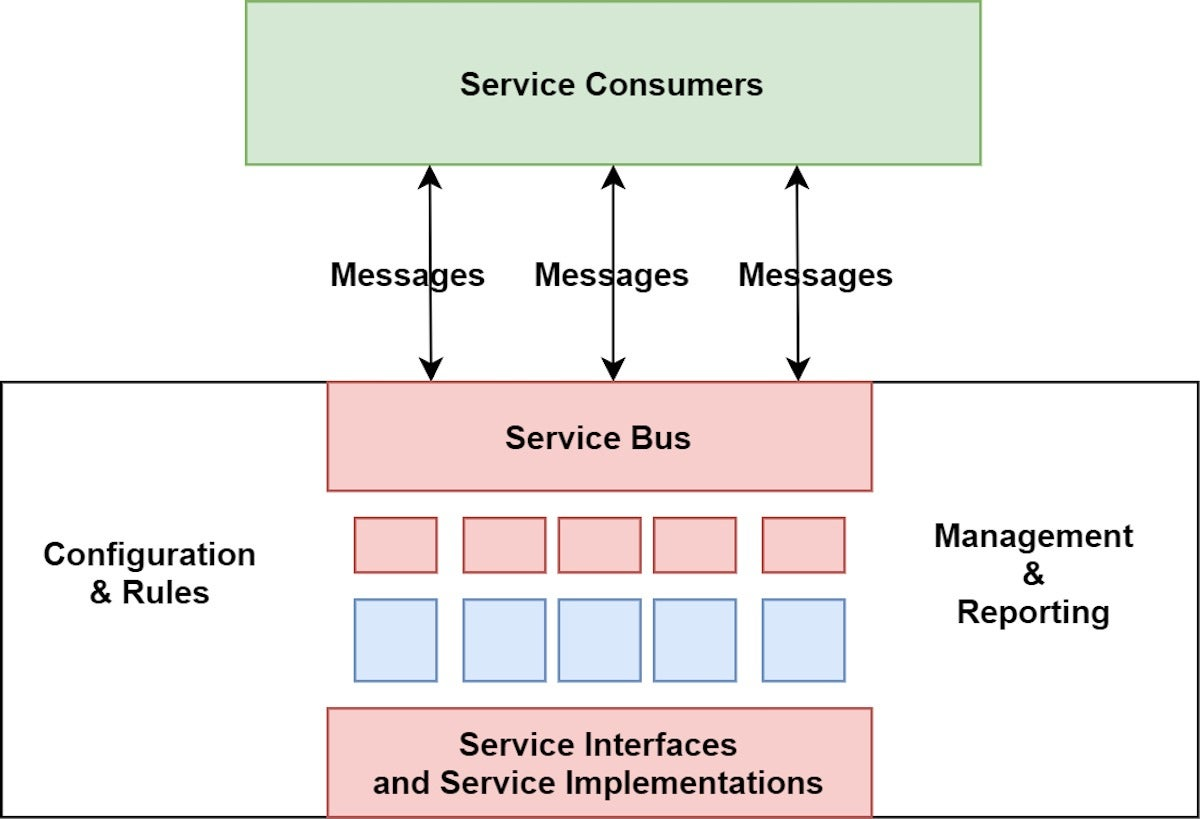

- Service-Oriented Architecture (SOA): Often seen as a precursor to microservices, SOA typically involved larger, enterprise-focused services, often orchestrated via an Enterprise Service Bus (ESB), with stricter contracts (like SOAP/WSDL). While sharing the goal of breaking down monoliths, microservices generally favor lighter-weight communication (REST), decentralized governance, and smaller service scopes compared to traditional SOA.

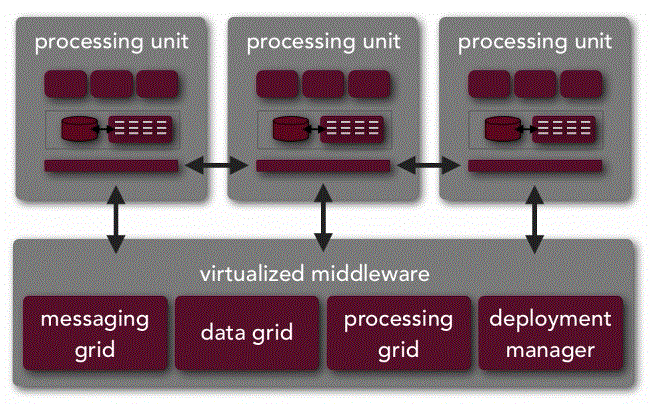

- Space-Based Architecture (SBA): Designed for extreme scalability and elasticity, particularly for applications with unpredictable, high-volume workloads. It avoids a central database bottleneck by partitioning data and processing logic into self-sufficient units (Processing Units) that operate in-memory, coordinated by a virtualized middleware layer. Think high-frequency trading or large-scale e-commerce caching.

Choosing Your Path: Context is King

So, which pattern is "best"? That's the wrong question. There is no silver bullet. The optimal choice depends entirely on context. Trying to apply microservices to a simple CRUD application managed by two developers is likely overkill, introducing unnecessary complexity. Conversely, building a complex, multi-faceted platform with dozens of developers using a single monolith will inevitably lead to pain.

Consider these factors:

| Factor | Considerations | Favors Monolith (Initially) | Favors Microservices/EDA |

|---|---|---|---|

| Team Size & Structure | Small, co-located team vs. multiple distributed teams? Conway's Law implications. | ✓ | ✓ |

| Domain Complexity | Simple, well-understood domain vs. complex, evolving business logic with distinct subdomains? | ✓ | ✓ |

| Scalability Needs | Uniform load vs. highly variable load across different functionalities? Need for independent scaling? | ✓ | |

| Time-to-Market | Need for rapid initial launch (MVP) vs. long-term sustainable development pace? | ✓ | |

| Operational Maturity | Limited DevOps capabilities vs. mature CI/CD, monitoring, logging, infrastructure automation? | ✓ | ✓ |

| Technology Needs | Single tech stack sufficient vs. need for diverse technologies for different problems? | ✓ | ✓ |

| Fault Tolerance | Can the entire system go down if one part fails vs. need for graceful degradation and independent resilience? | ✓ | |

| Data Consistency | Strong consistency easily achievable vs. eventual consistency acceptable/required? | ✓ |

Table: Factors Influencing Architectural Choices

Often, the best approach involves evolution. You might start with a well-structured (modular) monolith to get to market quickly and validate your ideas. As the system grows, the team expands, and specific scaling or organizational needs arise, you can strategically carve out microservices or introduce event-driven communication for parts of the system that will benefit most. This "strangler fig" pattern allows gradual migration, reducing risk compared to a big-bang rewrite.

Beyond Patterns: The Architect's Toolkit

Architectural patterns provide the blueprints, but building robust, maintainable systems requires more. Certain principles and practices are essential complements:

- SOLID Principles: While often discussed at the code level, principles like Single Responsibility, Open/Closed, Liskov Substitution, Interface Segregation, and Dependency Inversion apply architecturally too. They guide the design of components and their interactions, promoting modularity and flexibility.

- Domain-Driven Design (DDD): Especially crucial for complex domains and microservices. DDD provides tools (like Bounded Contexts, Ubiquitous Language, Aggregates) to model the business domain accurately and define logical boundaries for services or modules, ensuring they align with business capabilities. Getting boundaries wrong is a primary cause of microservice failures.

- API Design: In any system with interacting components (especially distributed ones), well-defined, stable, and documented APIs are paramount. Whether RESTful HTTP, gRPC, or message schemas, the contract between components needs careful design and versioning.

- Observability: You can't manage what you can't see. In distributed systems, robust logging, metrics, and distributed tracing are non-negotiable. They are essential for understanding system behavior, diagnosing problems, and monitoring performance. Think of the ELK stack (Elasticsearch, Logstash, Kibana), Prometheus, Grafana, Jaeger, or OpenTelemetry.

- Evolutionary Architecture: Recognize that architecture isn't a one-time decision. Systems need to evolve. Concepts like "fitness functions" (automated checks that verify architectural characteristics) help ensure the architecture doesn't degrade over time as changes are made.

The 1985 Perspective

At 1985, we've built systems across this entire spectrum. We've seen monoliths succeed brilliantly and microservice implementations crumble under their own weight (and vice-versa). Our approach is rooted in pragmatism. We don't advocate for specific patterns blindly. Instead, we work closely with our clients to understand their unique context: their business goals, technical constraints, team capabilities, and tolerance for operational complexity.

We believe in starting simple where possible, often favoring a well-structured modular monolith initially, unless the requirements clearly demand a distributed approach from day one. We emphasize clean internal boundaries, strong separation of concerns, and robust testing, which keeps options open for future evolution. When migrating towards microservices or EDA, we advocate for a gradual, iterative approach, tackling the areas with the most significant pain points or scaling needs first. Our goal is always to deliver solutions that are not just functional but also sustainable, maintainable, and aligned with the long-term strategic objectives of the business.

Build Deliberately

Software architecture is a deep and fascinating field. It's where technology strategy meets practical implementation. Choosing the right architectural patterns – and understanding their trade-offs – is fundamental to building software that can withstand the tests of time, scale, and changing requirements. It’s not about following the latest trend but about making deliberate, informed decisions based on your specific needs and constraints.

Don't let architecture happen by accident. Think about the structure. Consider the communication flows. Plan for failure. Design for evolution. Whether you're building a simple web app or a complex distributed system, investing time in architectural thinking pays dividends throughout the software's lifecycle. Build deliberately. Build thoughtfully. Build systems that last.