The True Cost of AI Infrastructure

Building AI systems? Know the hidden expenses and tips to scale smarter without breaking the bank.

Artificial Intelligence (AI) isn’t just a buzzword anymore. It’s the backbone of competitive advantage in today’s industries, whether you’re in retail, finance, healthcare, or software development. Yet, behind the promise of smart systems and automated workflows lies a reality that many don’t talk about: the hidden costs of AI infrastructure.

If you’re someone running a business—or, like me, an outsourced software development company like 1985—these costs can sneak up on you. They can dent your bottom line or derail your timeline if you’re not prepared. Let’s peel back the curtain and see what it truly takes to implement and sustain AI systems.

Hardware Costs: Beyond the Obvious

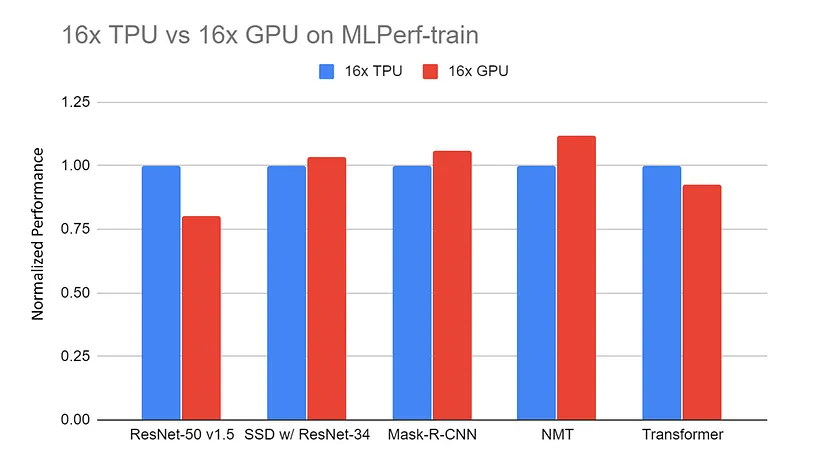

You probably think of GPUs right off the bat. They’re critical for AI workloads like deep learning. But the costs don’t end with purchasing the latest NVIDIA A100 or H100.

The GPU Tax

High-performance GPUs can set you back anywhere between $10,000 and $20,000 per unit. Think you’re done with one or two? Not likely. AI models, especially large language models (LLMs), require parallelized processing. You’re looking at clusters, not single units.

Then there’s the electricity cost. According to estimates, training a single large model like GPT-3 can consume as much energy as 120 U.S. homes use in a year. You’re effectively signing up for an ongoing electricity bill that scales with your ambition.

Data Storage and Bandwidth

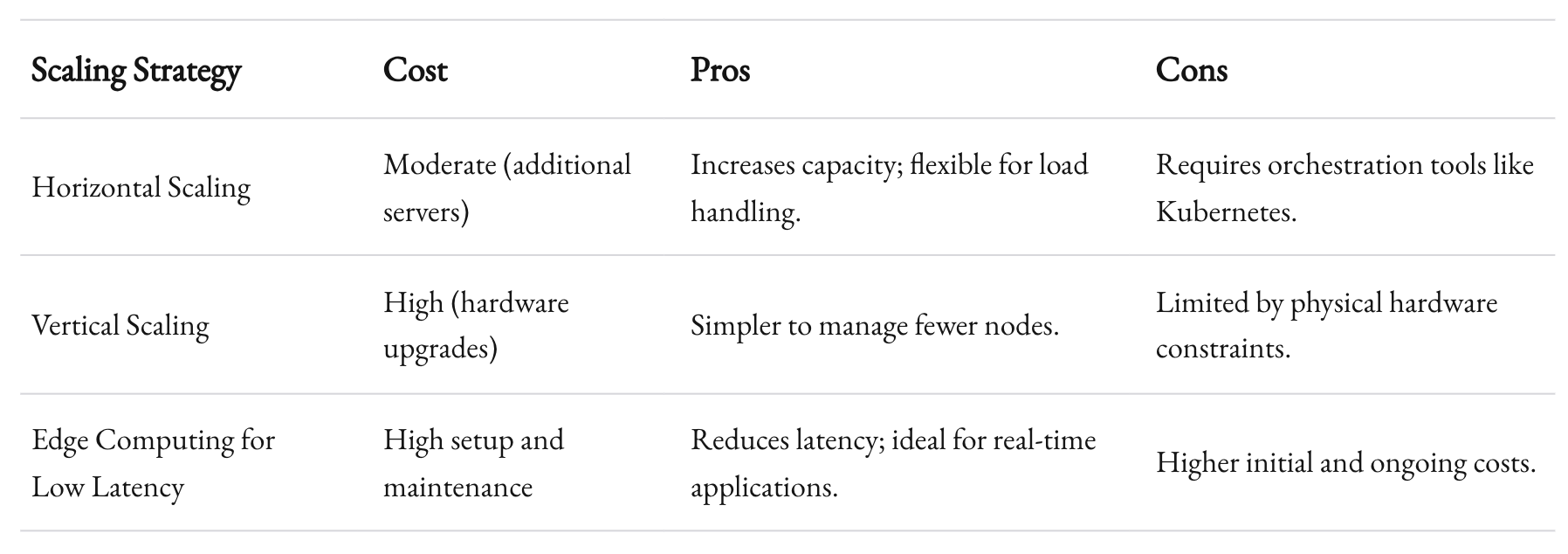

AI demands data—lots of it. Storage systems for AI workloads need to handle both volume and speed. Solutions like NVMe SSDs are fast but expensive. For perspective, high-capacity NVMe drives can cost $200–$300 per terabyte. Now multiply that by the terabytes your models will require.

Bandwidth is another hidden expense. Training models in the cloud often means moving massive datasets. AWS, Google Cloud, and Azure charge significant fees for data egress—sometimes up to $0.09 per GB. Over time, this becomes a serious operational cost.

The Cost of Data: Cleaning and Labeling

AI is only as good as the data it’s trained on. Garbage in, garbage out. But acquiring high-quality, labeled data isn’t as straightforward as downloading a public dataset.

Annotation: The Human Factor

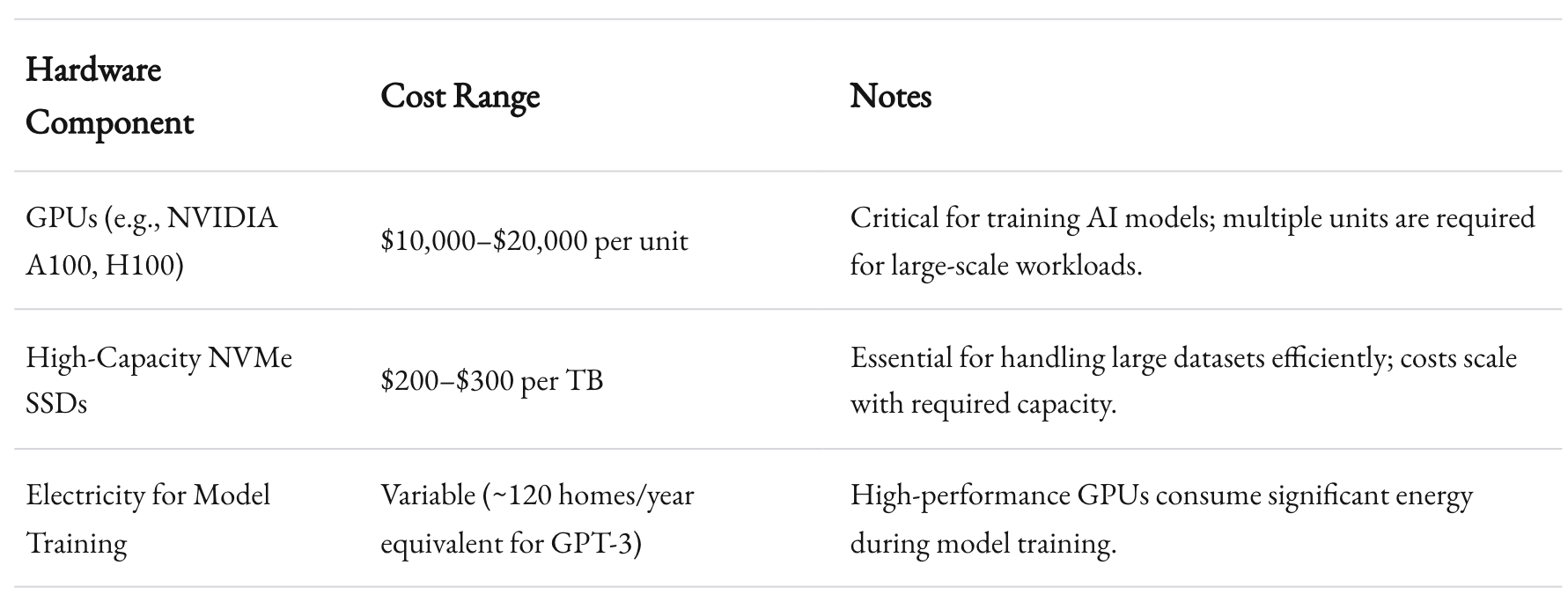

The manual effort involved in annotating data can be a massive drain. Platforms like Labelbox or Scale AI provide services to label data, but they charge per task, and costs can range from $0.05 to $10 per label, depending on complexity.

Let’s say you’re building an image recognition model. Annotating 1 million images at $1 per image? That’s a cool $1 million before you even start training.

Data Cleaning and Preprocessing

Even after labeling, datasets need cleaning. Outliers, duplicates, and errors in datasets can lead to bad model performance. Hiring a data engineer to preprocess data is essential. But skilled engineers don’t come cheap; expect salaries starting at $100,000 annually.

Software and Framework Costs

It’s easy to think that frameworks like TensorFlow and PyTorch are free because they’re open source. But here’s the catch: the software stack surrounding them isn’t.

MLOps Tools

MLOps—Machine Learning Operations—is critical for scaling AI. Tools like Weights & Biases, MLflow, and Kubeflow help monitor experiments, manage models, and automate pipelines. Many operate on a SaaS model. Enterprise plans often start at $1,000–$5,000 per month.

Licensing and API Costs

If you’re integrating third-party APIs, prepare for sticker shock. Using AI services from cloud providers, like AWS Rekognition or Azure Cognitive Services, can cost pennies per call. But at scale, those pennies add up quickly. For instance, transcribing 1 million minutes of audio with AWS costs about $15,000.

Scaling Challenges

Scaling is where costs balloon out of control if not managed properly. Let’s break it down.

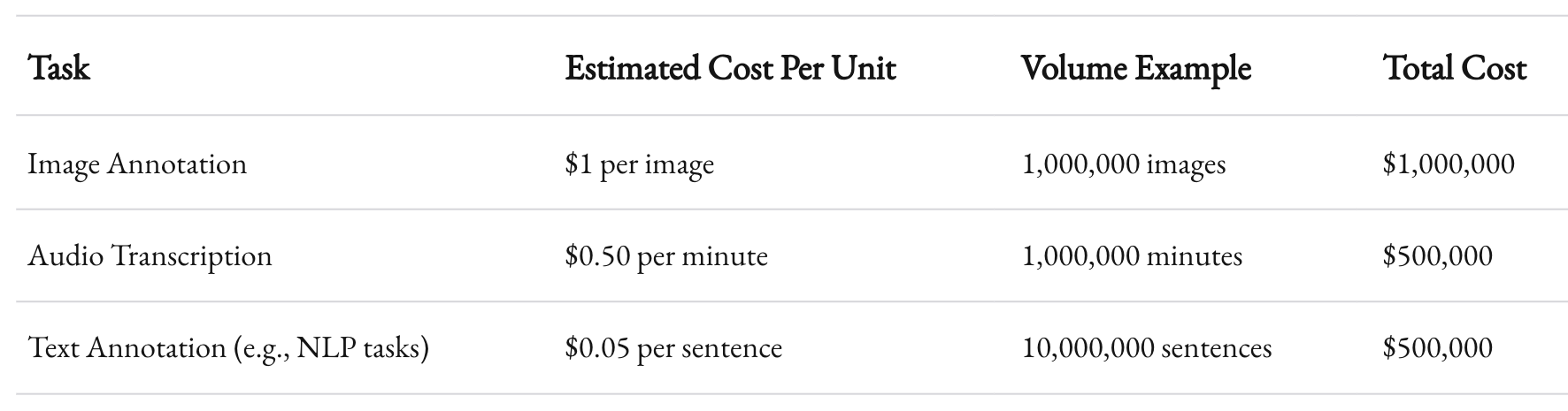

Horizontal and Vertical Scaling

Do you add more servers (horizontal scaling) or upgrade existing ones (vertical scaling)? Each option has pros and cons. Horizontal scaling demands orchestration tools like Kubernetes, which can add management complexity. Vertical scaling, on the other hand, requires high-end hardware upgrades, which cost more upfront.

Latency and Load Balancing

For real-time AI applications, low latency is a must. Achieving it requires edge computing solutions or Content Delivery Networks (CDNs). These systems often charge based on the volume of requests and distance between servers and users, adding recurring expenses to your budget.

Resource Optimization Strategies

It’s not all doom and gloom. You can optimize costs if you’re strategic.

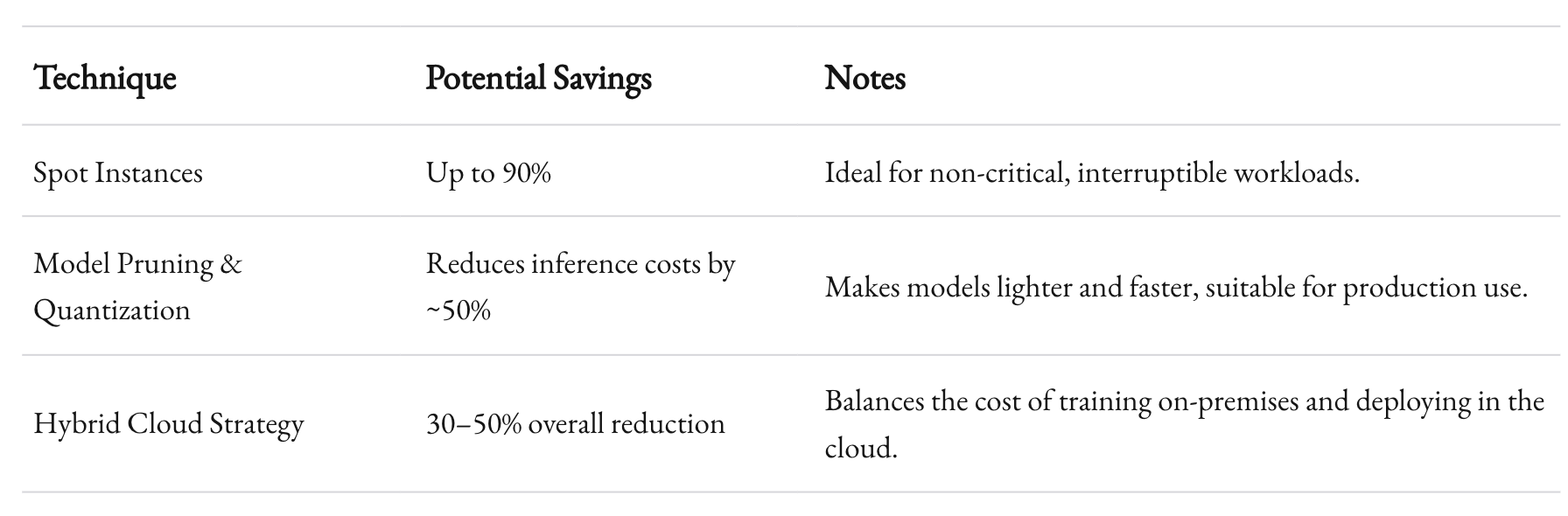

Spot Instances and Reserved Instances

Cloud providers offer significant discounts on spot and reserved instances. Spot instances, though cheaper, come with interruptions. Reserved instances require upfront commitment but can save up to 72% over on-demand pricing.

Model Pruning and Quantization

Not every model needs to run at full capacity. Techniques like pruning and quantization reduce model size and computation requirements. Smaller models not only cost less to train but also run faster in production.

Hybrid Cloud Strategies

If you’re all-in on public cloud, you’re paying a premium. A hybrid strategy—using a mix of on-premises hardware and cloud resources—can reduce costs significantly. For example, training models on-premises and deploying them in the cloud balances cost and scalability.

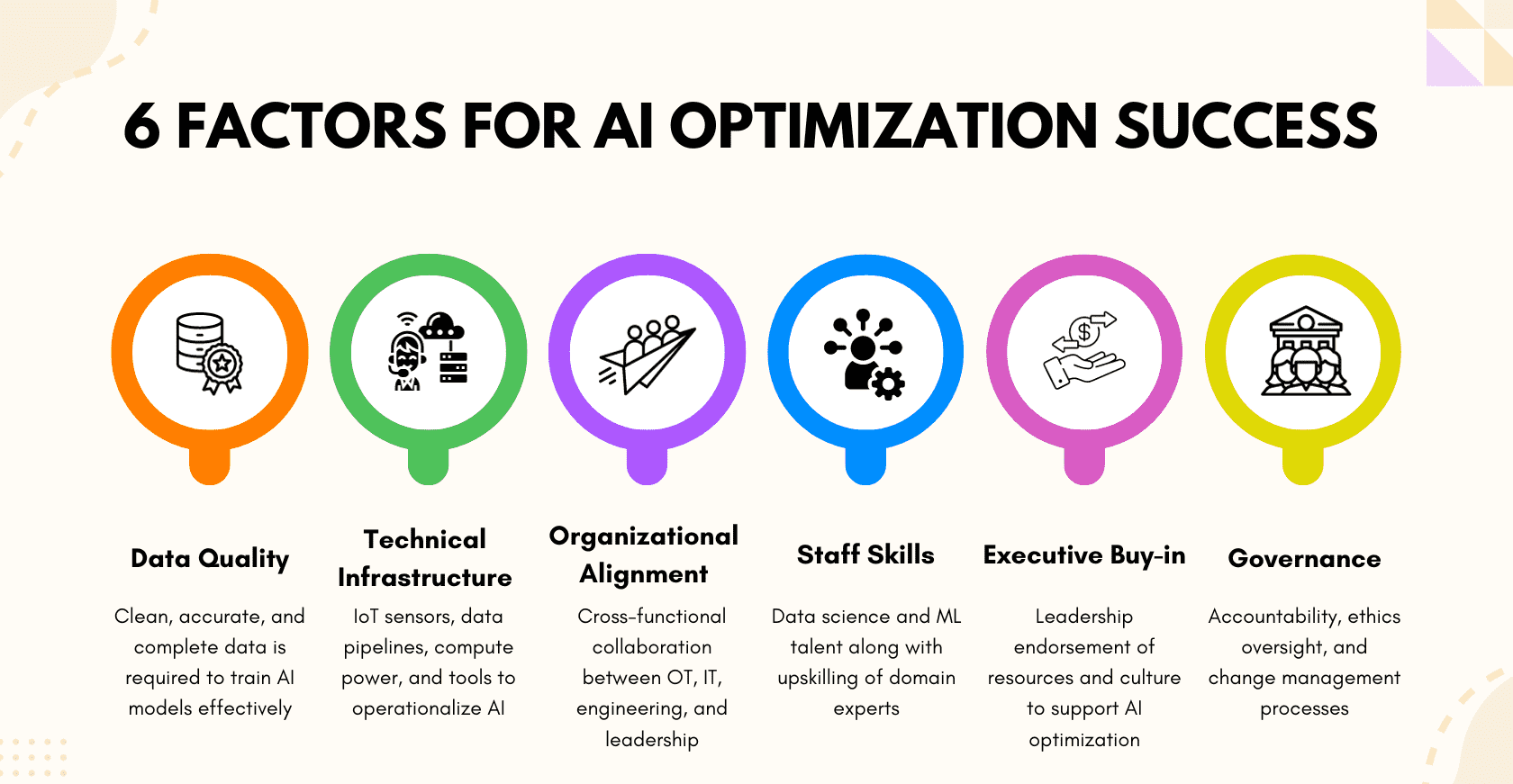

The People Factor

AI isn’t plug-and-play. You’ll need a team, and talent doesn’t come cheap.

Data Scientists and ML Engineers

Top-tier AI talent is in high demand. Salaries for machine learning engineers start at $120,000–$150,000 annually in the U.S. Add bonuses, stock options, and perks, and the real cost of hiring can exceed $200,000 per employee.

DevOps and Infrastructure Teams

Your AI infrastructure needs maintenance. This requires skilled DevOps engineers who understand distributed systems, security, and cloud architectures. Salaries here are comparable to those of AI engineers.

Budget Smarter, Scale Better

AI infrastructure isn’t just about the initial investment. It’s a marathon, not a sprint. The true costs span hardware, software, data, scaling, and talent. If you’re considering diving into AI, plan for the long haul.

At 1985, we’ve seen firsthand how hidden costs can derail projects. But with careful planning and resource optimization, you can extract real value from your AI investments. Think long-term, and focus on strategies that align with your business goals—not just what looks good on paper.