System Testing Secrets the 10x Development Teams Know

Skip system testing and pay 100x more later: insider insights from 1985's software development trenches.

Ever watched a building collapse in slow motion? That's what happens when software goes live without proper system testing. It's not dramatic. It's not sexy. But system testing might just be the difference between your product soaring or crashing on launch day.

At 1985, we've seen it all. The rushed deployments. The skipped test phases. The 3 AM panic calls when production catches fire.

Let's cut through the noise and talk about system testing like professionals who've been in the trenches.

What System Testing Actually Is (And Isn't)

System testing evaluates a complete, integrated system to verify it meets specified requirements. Full stop.

It's not unit testing with a fancy name. It's not something you can skip because your developers "wrote clean code." System testing examines the entire application as users will experience it.

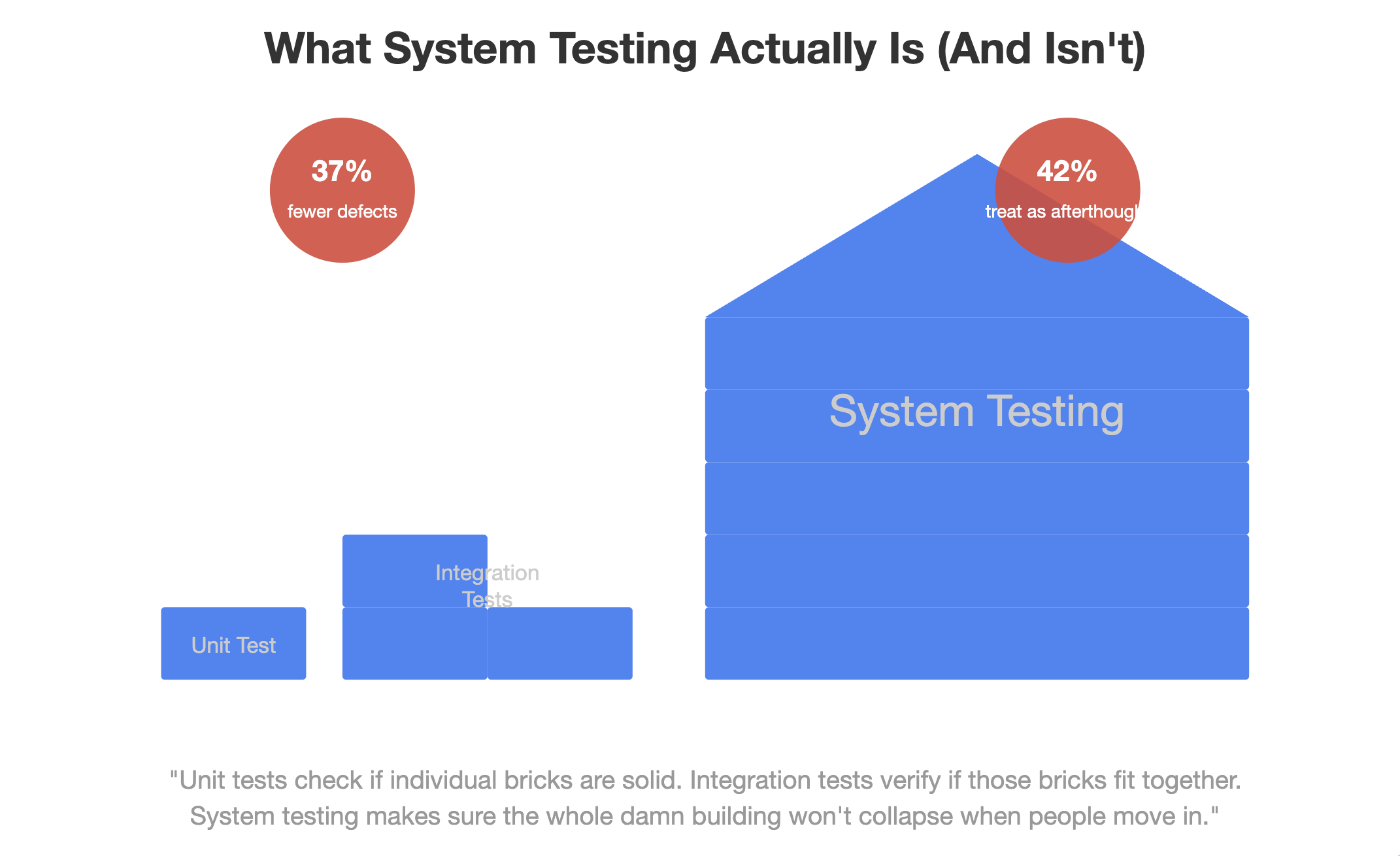

Think of it this way: unit tests check if individual bricks are solid. Integration tests verify if those bricks fit together. System testing makes sure the whole damn building won't collapse when people move in.

According to the 2023 State of Software Quality Report, projects that implement robust system testing experience 37% fewer critical post-release defects. Yet surprisingly, 42% of organizations still treat it as an afterthought.

System testing sits at that critical juncture between development completion and user acceptance. It's the last major verification before your software faces its harshest critics: actual users with actual expectations.

The Real-World System Testing Process

Forget the textbook definitions. Here's how system testing actually unfolds in the wild:

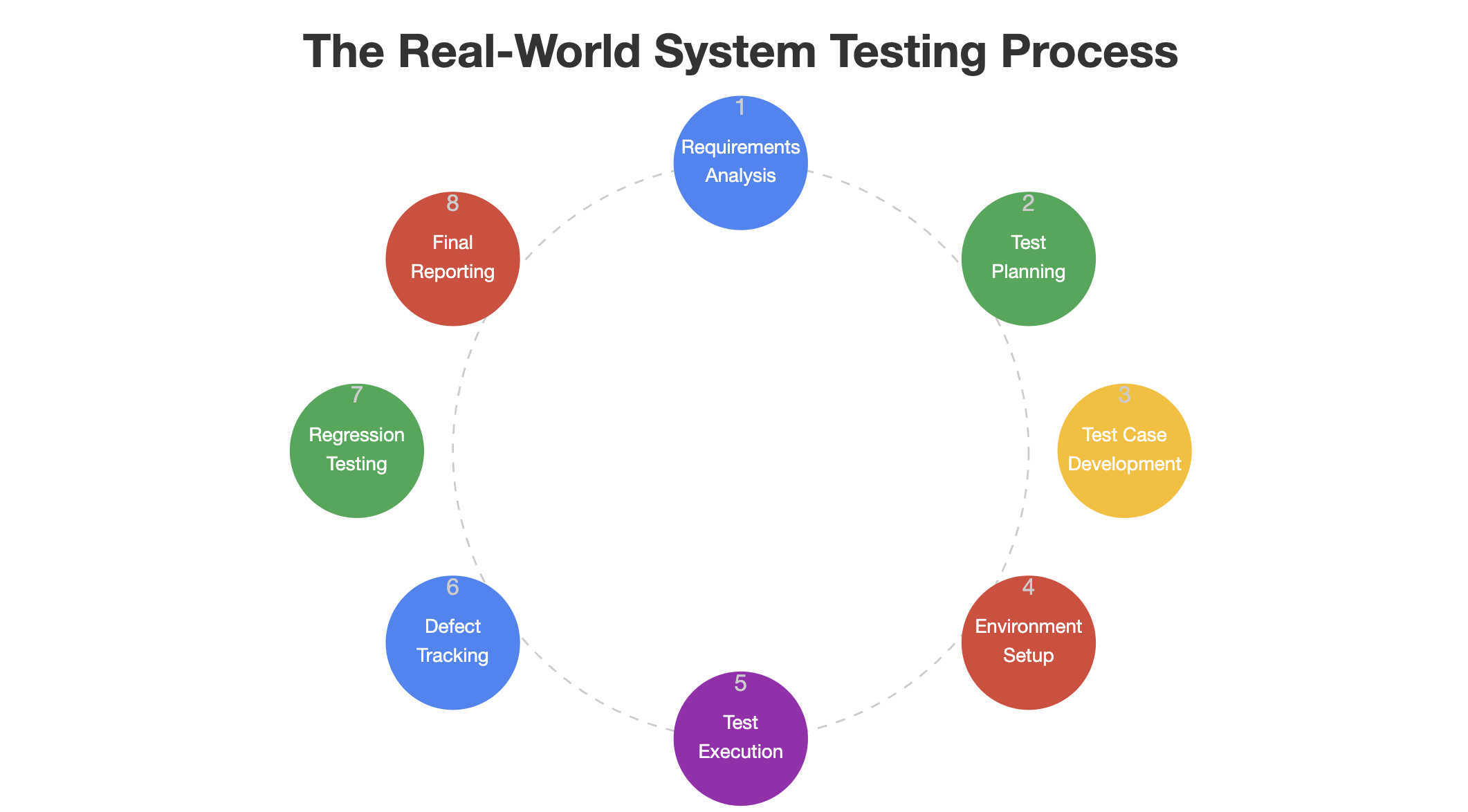

- Requirements Analysis: Dust off those requirements documents. You'll need them.

- Test Planning: Determine what to test, how to test it, and what success looks like.

- Test Case Development: Create detailed test scenarios that cover every functional and non-functional aspect.

- Test Environment Setup: Build an environment that mirrors production as closely as possible.

- Test Execution: Run the tests. Document everything.

- Defect Reporting and Tracking: Log issues with ruthless precision.

- Regression Testing: When fixes are implemented, verify they didn't break something else.

- Final Reporting: Compile results and make the go/no-go recommendation.

At 1985, we've found that the most overlooked step is environment setup. Companies cut corners here, then wonder why software that worked perfectly in testing falls apart in production. Your test environment should be a twin of production – same configurations, same data volumes, same everything.

A client once told us, "We don't have budget for a separate test environment." Six months later, they spent ten times that amount fixing a catastrophic production failure that proper system testing would have caught.

Types of System Testing You Can't Afford to Skip

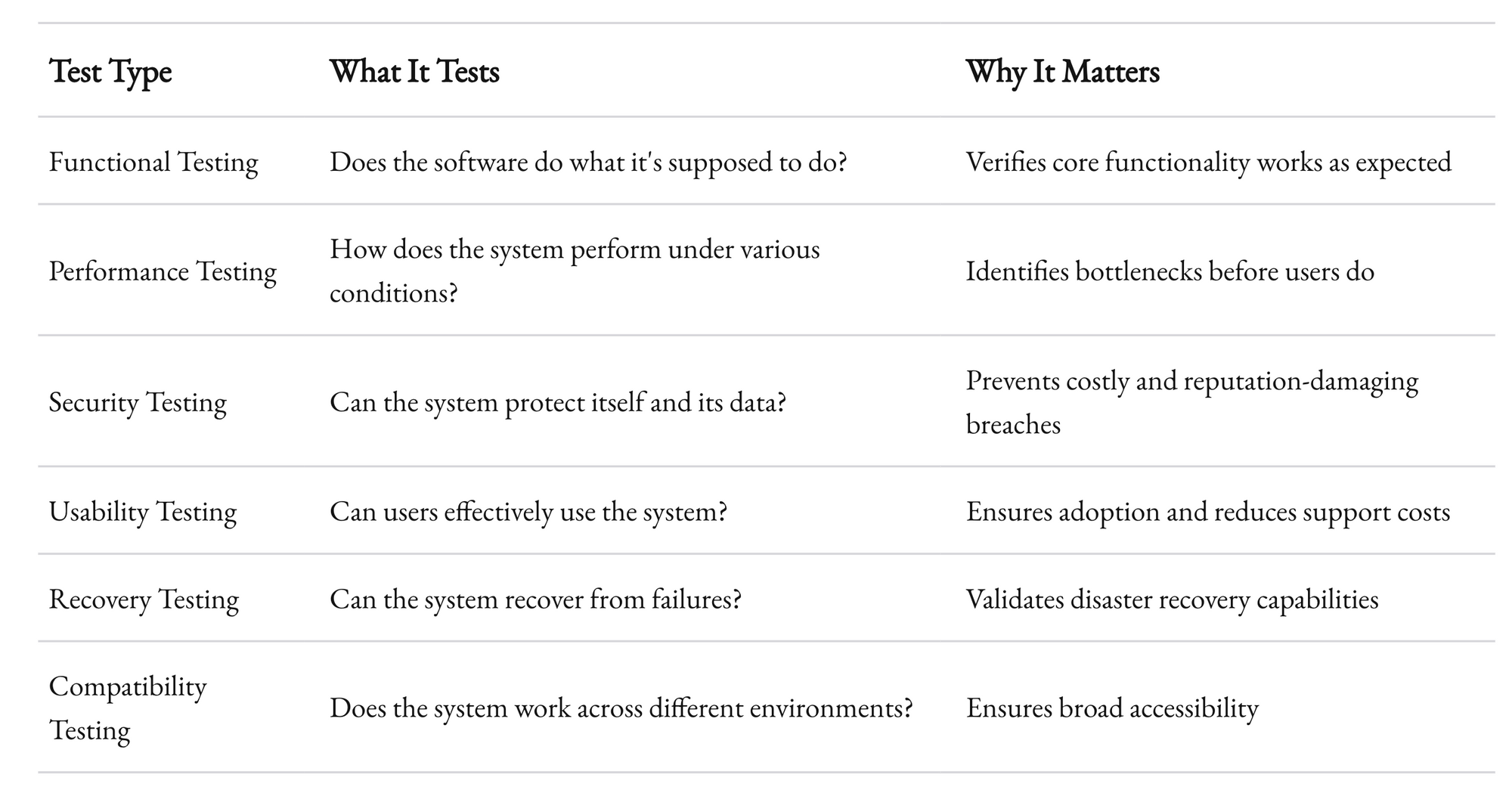

System testing isn't monolithic. It's a collection of specialized techniques, each designed to probe different aspects of your software.

"But we don't have time for all these tests!" I hear you protest. Consider this: Microsoft's research shows that fixing a bug after release costs 100 times more than fixing it during development. System testing isn't an expense – it's an investment with measurable ROI.

At 1985, we've implemented a risk-based approach that prioritizes test types based on business impact. Not all systems need the same testing intensity. A life-critical medical application requires different testing rigor than an internal document management system.

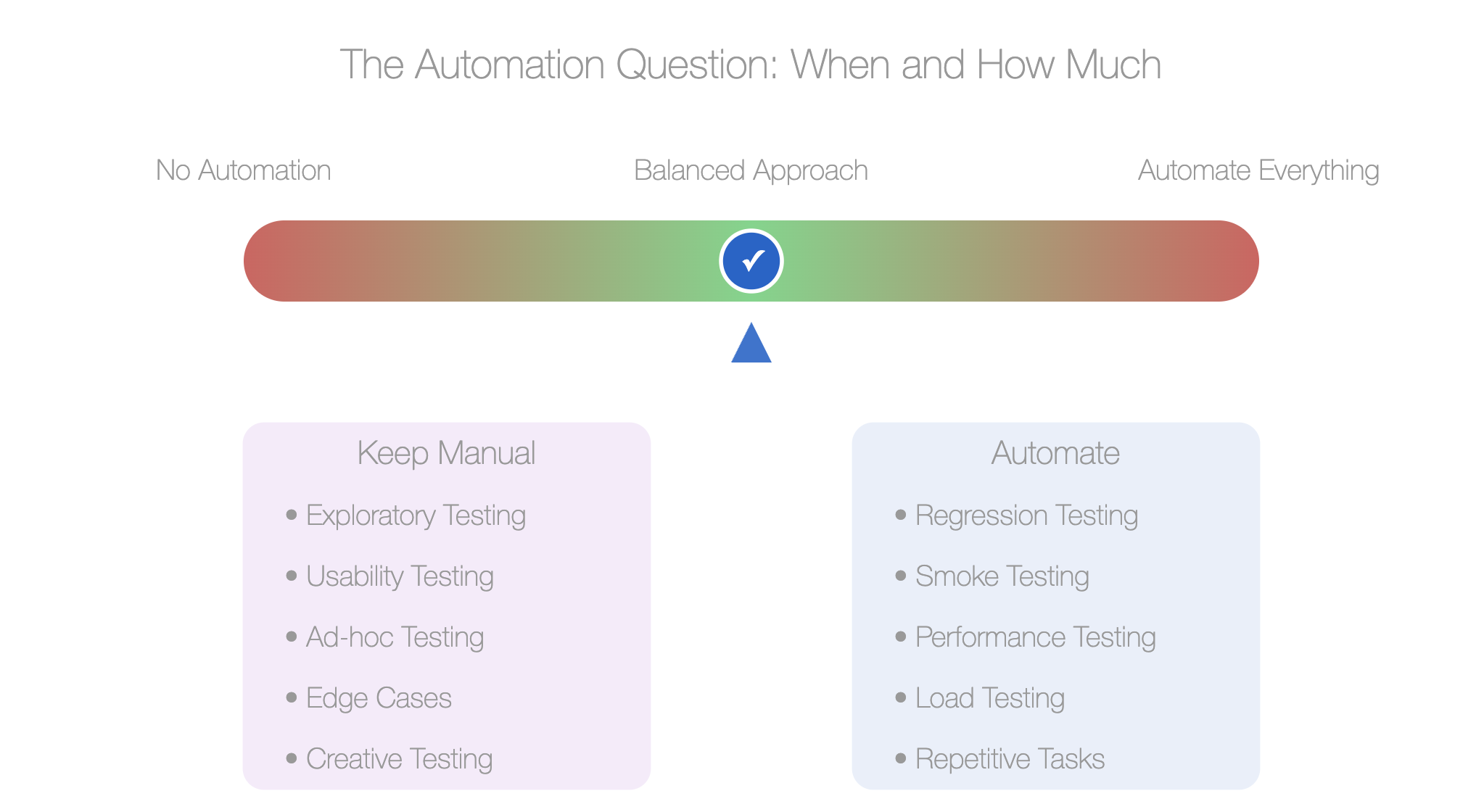

The Automation Question. When and How Much

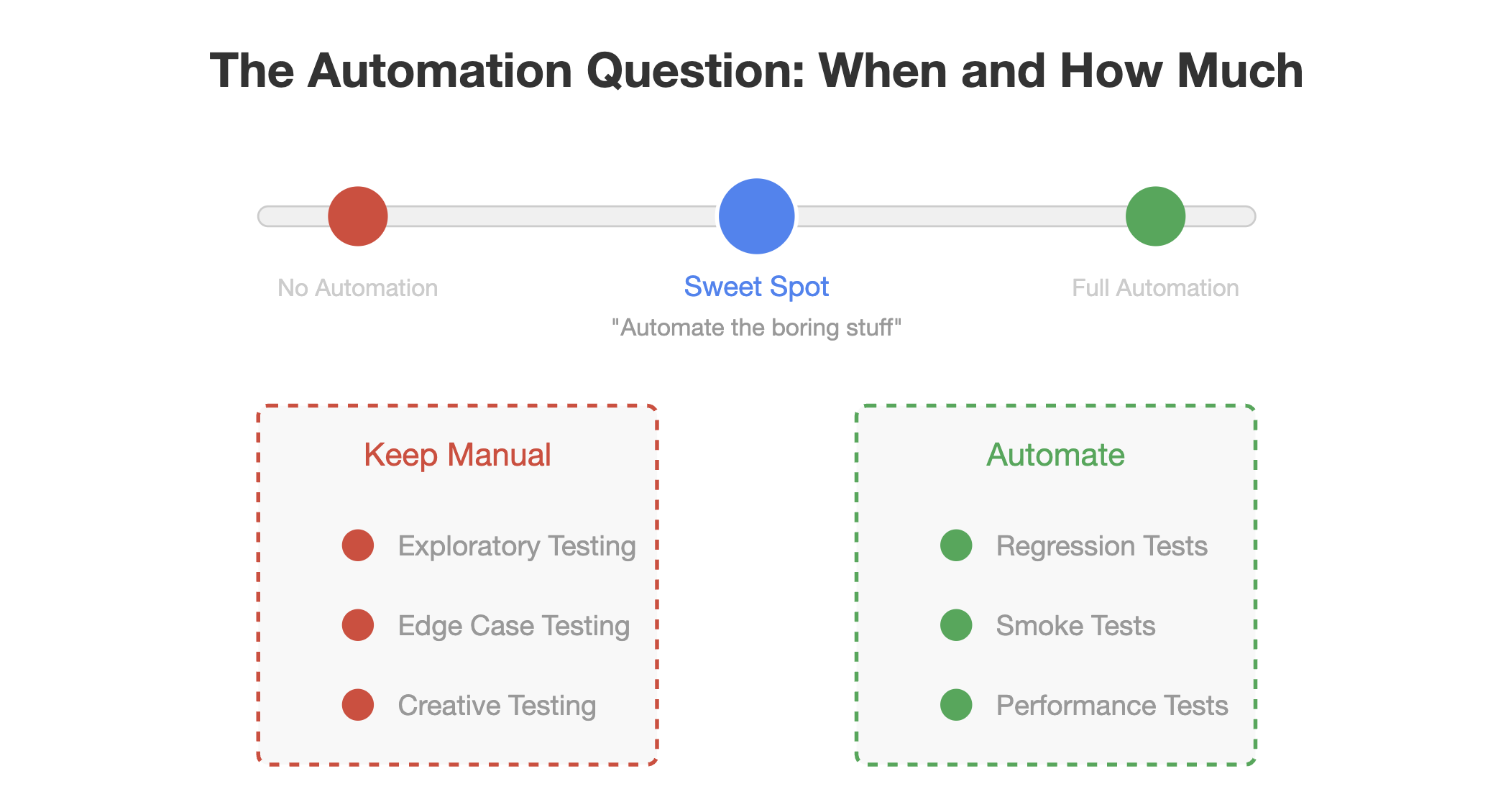

Automation in system testing isn't binary. It's a spectrum.

Some teams make the mistake of trying to automate everything, creating a maintenance nightmare. Others avoid automation entirely, missing significant efficiency gains.

The sweet spot? Automate repetitive, stable test cases while keeping exploratory and edge-case testing manual.

According to Capgemini's World Quality Report, organizations with mature test automation achieve 28% faster time-to-market. But the same report warns that poorly implemented automation can actually increase testing time and costs.

At 1985, we follow a simple rule: automate the boring stuff. Regression tests, smoke tests, and performance tests benefit tremendously from automation. Creative testing that requires human intuition stays manual.

Remember: automation doesn't replace testers – it empowers them to focus on higher-value activities.

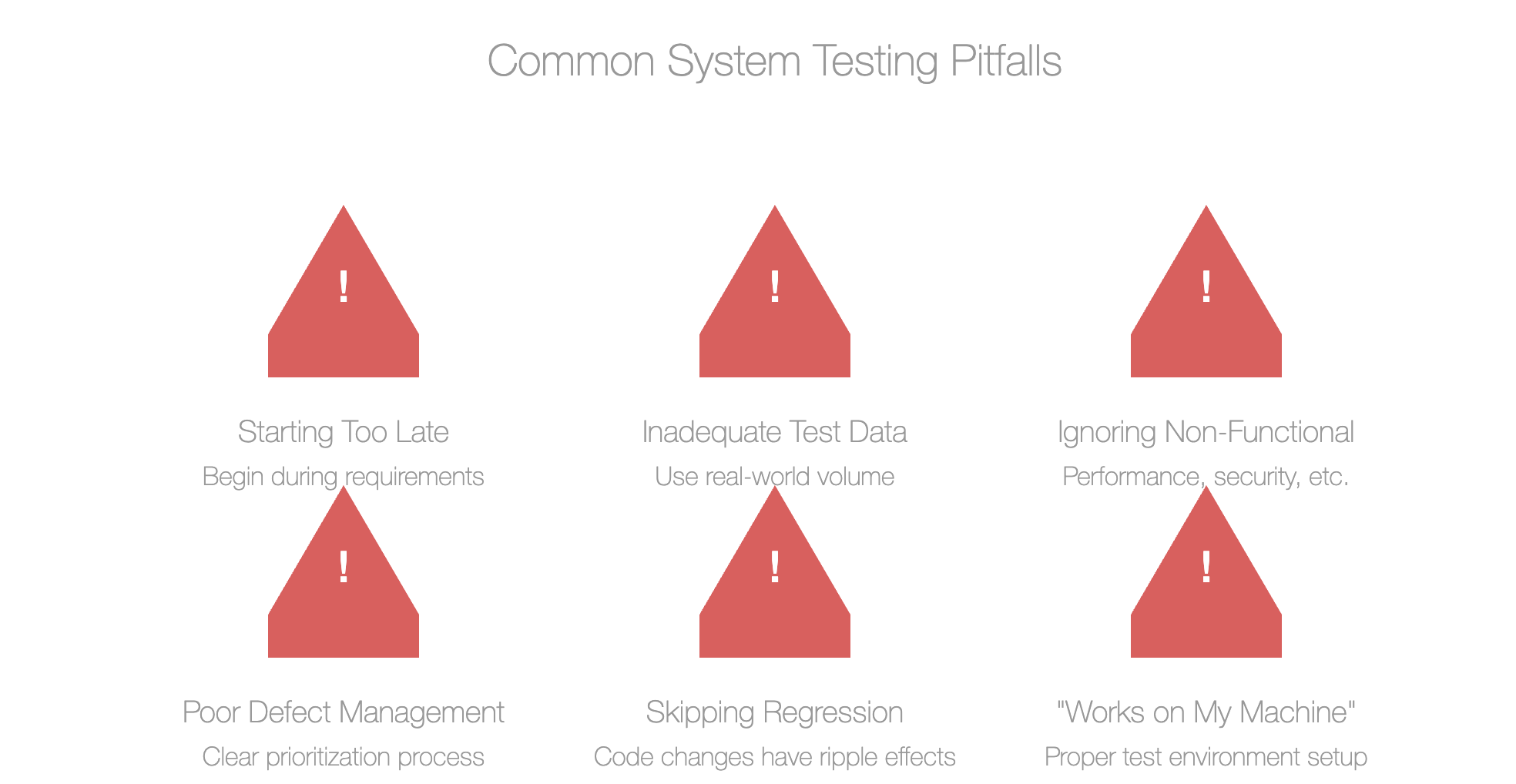

Common System Testing Pitfalls

After overseeing hundreds of system testing cycles, we've identified patterns in what goes wrong:

Starting too late. System testing isn't something you squeeze in before release. It requires planning and preparation that should begin during requirements gathering.

Inadequate test data. Using small, sanitized datasets for testing is like testing a bridge with a bicycle instead of a truck. Your test data should reflect real-world volume and complexity.

Ignoring non-functional requirements. Yes, the application works – but can it handle 10,000 concurrent users? Will it break if network connectivity drops for 30 seconds?

Poor defect management. Finding bugs is only half the battle. You need a clear process for prioritizing, fixing, and verifying fixes.

Skipping regression testing. Just because it worked yesterday doesn't mean it works today. Code changes have ripple effects.

The most insidious pitfall? The "it works on my machine" syndrome. I've seen development teams dismiss critical system test findings because they couldn't reproduce the issue in their environment. This is precisely why proper test environment setup is non-negotiable.

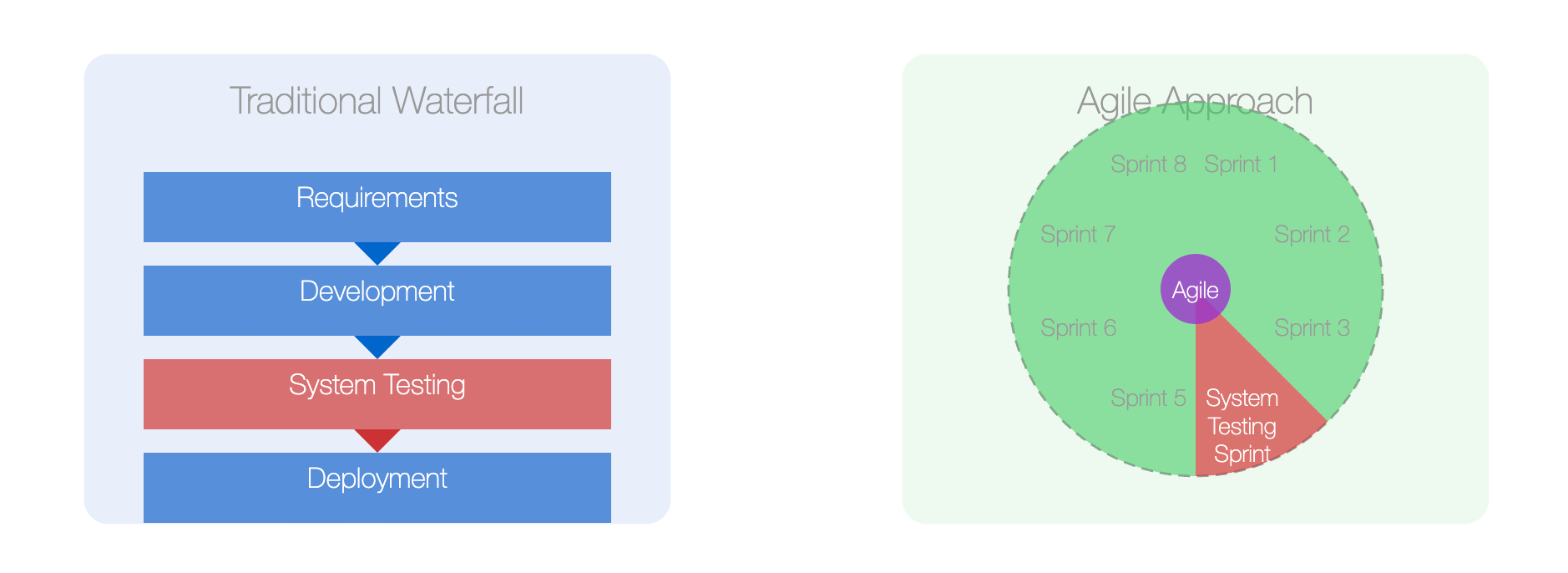

System Testing in Agile. Yes, It's Still Necessary

"We're Agile, we test continuously, so we don't need formal system testing."

I've heard this argument more times than I care to count. It's a dangerous misconception.

Agile doesn't eliminate the need for system testing – it changes how and when you do it. Instead of one massive testing phase at the end, you conduct mini system tests for each increment while still maintaining end-to-end testing for the entire system.

Spotify, often cited as an Agile success story, maintains dedicated system testing phases despite their highly iterative approach. Their engineering blog notes: "Continuous testing doesn't replace system testing; it complements it by catching issues earlier."

At 1985, we've integrated system testing into Agile workflows by:

- Maintaining a continuously updated regression test suite

- Conducting "system testing sprints" after every 2-3 development sprints

- Using feature toggles to test new functionality in production-like environments

- Implementing continuous integration pipelines that include automated system tests

The result? Faster releases with fewer critical defects.

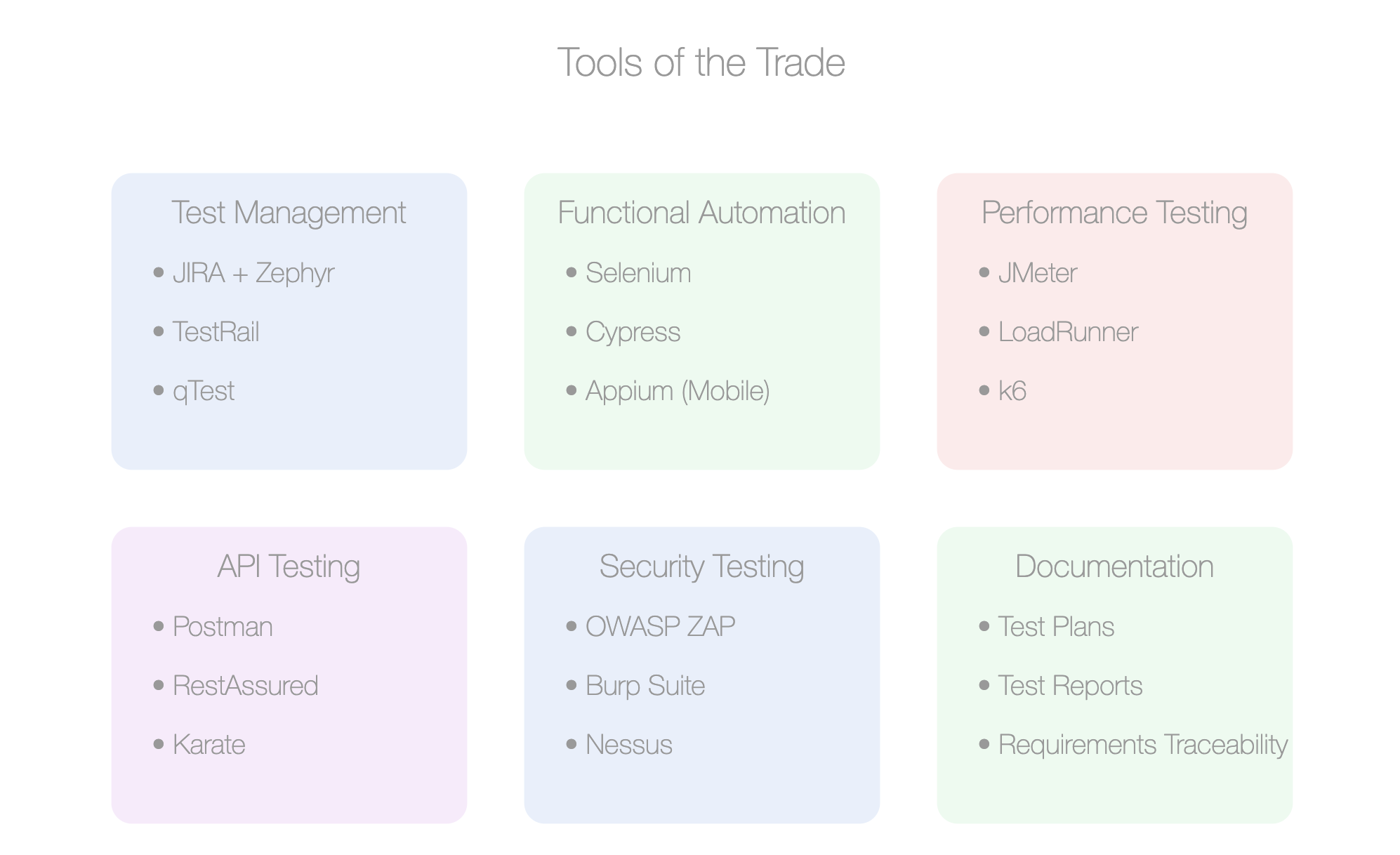

Tools of the Trade

The tooling landscape for system testing is vast and confusing. Here's what we've found effective at 1985:

For Test Management: JIRA + Zephyr, TestRail, or qTest. The key is integration with your existing workflow.

For Functional Automation: Selenium remains the workhorse, but Cypress is gaining ground for web applications. Appium handles mobile testing admirably.

For Performance Testing: JMeter offers the best balance of power and accessibility. LoadRunner is comprehensive but expensive. k6 is excellent for developer-friendly performance testing.

For API Testing: Postman for manual exploration, RestAssured or Karate for automation.

For Security Testing: OWASP ZAP provides an excellent starting point. For more comprehensive testing, Burp Suite is worth the investment.

The most important tool? Documentation. Clear, concise test plans and reports are worth their weight in gold when stakeholders question the need for fixing "minor" issues before release.

Measuring System Testing Effectiveness

How do you know if your system testing is actually working? Look beyond simple metrics like test case pass/fail rates.

Effective measurements include:

Defect Leakage Rate: What percentage of bugs are found after system testing? Lower is better.

Defect Detection Percentage: What percentage of total defects are found during system testing? Higher is better.

Test Coverage: Not just code coverage, but requirements coverage. Are you testing everything that matters?

Mean Time to Detect: How quickly are defects identified after they're introduced?

Cost of Quality: What are you spending on prevention versus fixing issues in production?

At 1985, we've found that organizations fixate on the wrong metrics. They celebrate high pass rates without questioning if they're testing the right things. A 100% pass rate with shallow testing is worse than an 80% pass rate with comprehensive testing.

Building a System Testing Culture

Tools and processes matter, but people make or break system testing.

The most successful testing cultures share these traits:

Blameless reporting. Finding bugs is celebrated, not punished. Testers aren't viewed as the "quality police" but as valuable contributors to product excellence.

Cross-functional involvement. Developers participate in test planning. Testers understand the code. Product managers validate test scenarios against business requirements.

Executive support. Leadership understands that quality isn't negotiable and provides the necessary resources and time.

Continuous learning. Test teams stay current with new techniques and tools through regular training and knowledge sharing.

At 1985, we've transformed testing cultures by implementing "bug bounties" where developers receive recognition for finding issues in their own code before testing begins. This simple practice shifts the mindset from "passing QA" to "building quality."

Making the Business Case

Let's talk money. System testing requires investment – time, tools, and talent don't come free. How do you justify this to stakeholders focused on deadlines and budgets?

The data is compelling:

- IBM reports that defects fixed after release cost 4-5 times more than those fixed during testing.

- According to CISQ, poor software quality cost US companies $2.08 trillion in 2020 alone.

- Gartner research shows that high-performing IT organizations spend 28% less on rework than their peers.

At 1985, we track and report on "cost avoidance" – what would have happened if critical defects had reached production. When a system test catches an issue that would have affected 10,000 users, calculate the support costs, lost productivity, and potential revenue impact. These numbers make compelling arguments for thorough testing.

Remember: The ROI of system testing isn't just about avoiding costs – it's about preserving reputation and customer trust, which are much harder to quantify but far more valuable.

The Future of System Testing

System testing is evolving rapidly. Here's what we're preparing for at 1985:

AI-Augmented Testing: Machine learning algorithms that identify high-risk areas and generate test cases based on user behavior patterns.

Chaos Engineering: Deliberately introducing failures to test system resilience, pioneered by Netflix but increasingly adopted across industries.

Shift-Right Testing: Extending testing into production through feature flags, canary releases, and sophisticated monitoring.

Testing in Production: Controlled experiments with real users on real systems, providing insights that no pre-production testing can match.

Continuous Verification: Moving beyond pass/fail to continuous quality assessment throughout the software lifecycle.

The fundamentals remain unchanged: verify the system works as intended before users depend on it. The methods, however, continue to evolve.

System Testing as Competitive Advantage

In an industry obsessed with speed, system testing can feel like an unnecessary brake on progress. This is shortsighted thinking.

The companies that dominate their markets – Amazon, Google, Microsoft – invest heavily in system testing. They understand that quality at scale is impossible without it.

At 1985, we've seen firsthand how robust system testing transforms from a cost center to a competitive advantage. Our clients who embrace thorough testing release more frequently, with fewer critical issues, and maintain higher customer satisfaction.

System testing isn't just about finding bugs. It's about building confidence – confidence to release, confidence to innovate, confidence to scale.

In software development, as in life, you can pay now or pay later. System testing is paying now, on your terms, rather than paying later, on your users' terms.

The choice seems clear.