Streamlining the Code Review Process: A Deep Dive for Outsourced Dev Teams

Make every review count—learn how to speed up feedback and foster collaboration with our expert tips.

When I first started 1985, I quickly learned that the key to building robust software wasn’t just about writing great code—it was about the conversations that happened around it. One conversation in particular stands out: the code review. It’s a powerful tool for sharing knowledge and catching bugs. But, if not managed well, it can be a bottleneck that slows down progress. In this post, I’ll share my personal experiences and the hard-won lessons from years of working with outsourced software development teams. We’ll explore practical ways to streamline our code review process without sacrificing quality.

At 1985, we deal with distributed teams, tight deadlines, and diverse codebases. I’m not here to offer generic advice. Instead, I’ll dive into nuanced, industry-specific insights that have transformed our review process. Let’s get into it.

Getting Real About Code Reviews

I remember early on. A review session would stretch into hours. Comments piled up. Developers waited for feedback. It wasn’t about blame. It was about slowing down progress. We needed a change.

A streamlined process can do wonders. But it isn’t achieved by simply cutting corners. It’s about creating a system that respects everyone’s time, minimizes friction, and ultimately produces better software. Streamlining is not a buzzword. It’s a commitment to continuous improvement.

There are hard truths here. Even with the best intentions, our review process can stall if it becomes a mere formality rather than a genuine conversation. The trick is to balance thoroughness with speed, to be meticulous but not obsessive, and to avoid turning reviews into “gotcha” sessions. This means reevaluating everything: from how we write code to how we communicate feedback. Every single step matters.

When I talk to peers across the industry, the conversation inevitably turns to wasted hours and missed deadlines because reviews drag on. According to a 2021 report by SmartBear, teams that fail to optimize code reviews see a 20–30% slowdown in their development cycles. We can’t afford that kind of lag, especially in a competitive market. The objective is simple: Make reviews efficient, informative, and actionable.

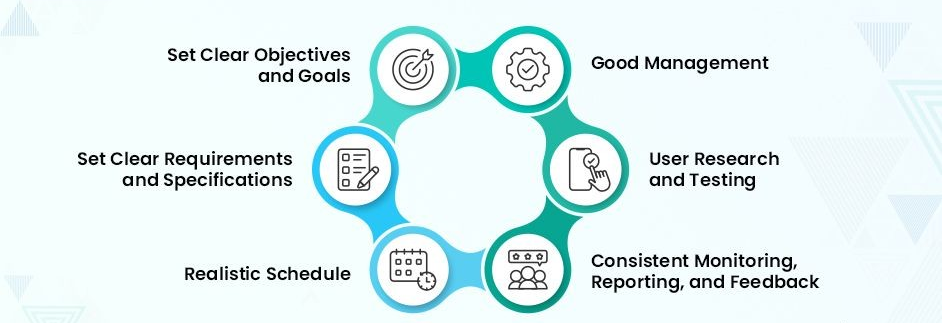

Setting Clear Objectives

Clear objectives form the foundation of any efficient process. If you don’t know what you’re aiming for, how can you measure success? When we at 1985 revamped our review process, we started with clear goals.

What Are We Trying to Achieve?

- Faster Feedback: We need quick turnarounds. Waiting days for a review isn’t an option.

- Quality Assurance: Every change must meet our high standards.

- Knowledge Sharing: Code reviews should educate as much as they inspect.

- Minimal Disruption: The process must integrate seamlessly into our workflow.

Each objective is a pillar that holds up the process. When one pillar wavers, the whole structure is compromised. In one case, a delay in feedback led to a bug that went undetected for too long. We learned then that prompt reviews are not just a luxury—they’re essential for reliability.

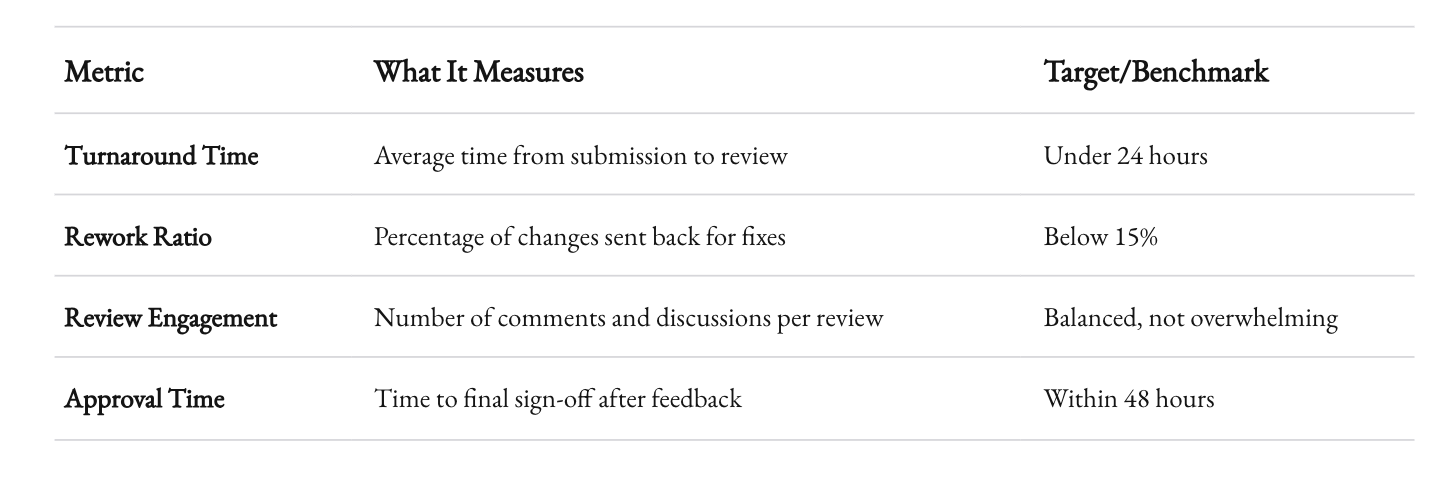

Measuring Success

Metrics matter. They give you tangible feedback on your progress. At 1985, we track the following:

By keeping a close eye on these metrics, we can pinpoint what’s working and what isn’t. For example, if the rework ratio climbs, it might signal that our initial coding guidelines need improvement. Or if turnaround times are slipping, maybe the workload distribution is off.

Metrics are not a tool for policing, but rather for improvement. They provide a mirror to see our process from the outside. When everyone understands the benchmarks, there’s a shared goal—a common vision of efficiency and quality.

Building a Collaborative Culture

The best process in the world will fail if the team isn’t on board. At 1985, we discovered that the culture around code reviews is as important as the technical process itself.

The Role of Communication

Communication is key. Reviews should be conversations, not critiques. I’ve seen too many teams where feedback feels like a personal attack. We’ve worked hard to create an environment where every comment is constructive. That means emphasizing empathy, clarity, and context. If someone’s code isn’t up to par, we focus on the solution rather than the problem.

To foster this environment, we started with weekly check-ins where team members could discuss their experiences with the review process. What was working? What wasn’t? These sessions revealed a surprising amount of frustration over vague comments and unclear guidelines. We took that feedback and overhauled our approach.

For instance, we now include a short “review guideline” document with every project. It outlines what’s expected, the style guidelines, and the rationale behind our decisions. It’s not about micromanagement. It’s about transparency and consistency. When everyone is on the same page, the reviews become more productive and less contentious.

Trust and Autonomy

A significant part of streamlining is trusting your team. Micromanagement is the enemy of creativity and speed. At 1985, we empower our developers to take ownership of their code. Trust them to do the right thing. Of course, that trust is built on a foundation of shared standards and rigorous training.

In our culture, code reviews are seen as opportunities to learn, not as audits waiting to catch mistakes. We celebrate improvements and encourage constructive criticism. As one senior developer said, “I like reviews because they’re a chance to see another’s perspective. It’s a shared journey towards excellence.” This mindset shift has made all the difference.

When every member feels valued, they’re more likely to engage meaningfully in the process. And meaningful engagement leads to higher quality code. We’re not chasing perfection; we’re chasing progress.

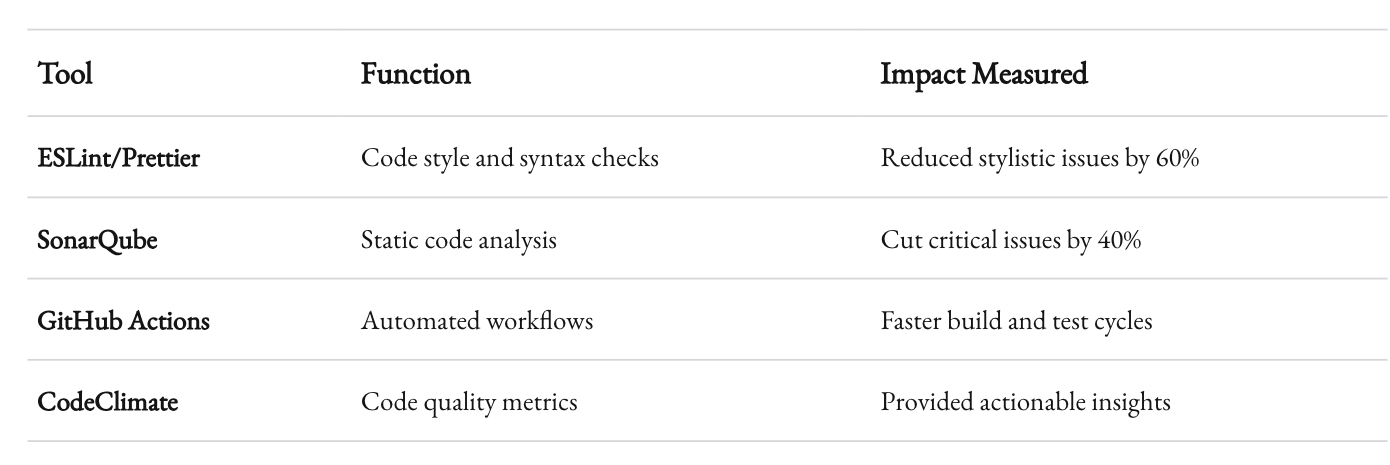

Automating the Mundane

Not all aspects of code review require a human touch. Automation can take care of the mundane, freeing up your team to focus on the nuanced aspects of quality and architecture.

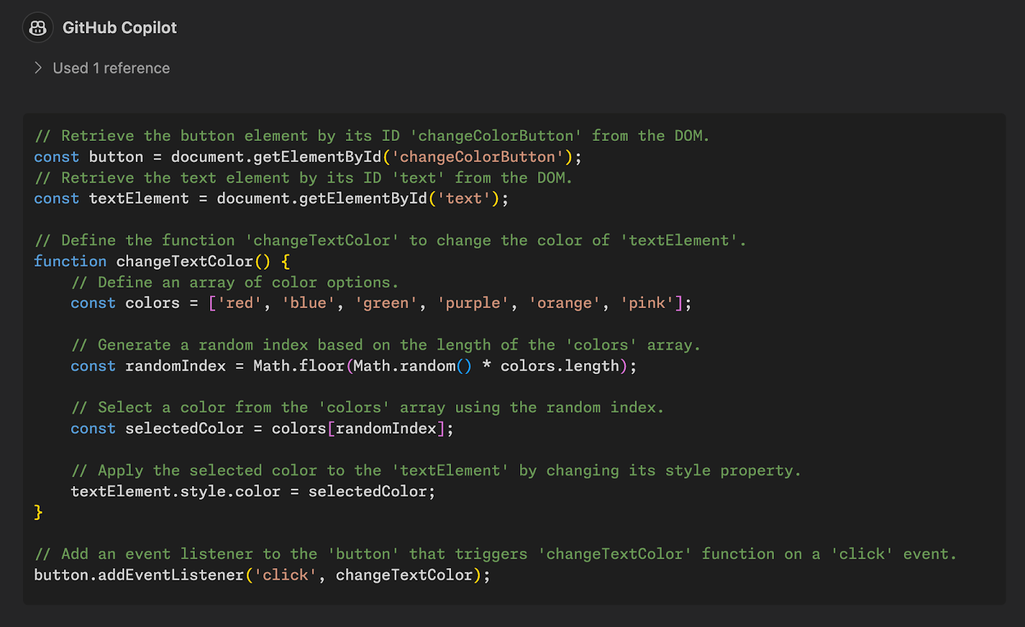

The Use of Tools

Automation tools have become indispensable in our workflow. Tools like GitHub Actions, GitLab CI/CD, and Bitbucket Pipelines help us catch common issues before a human even looks at the code. Linters, formatters, and static code analyzers can enforce style and detect potential errors in seconds.

For example, integrating ESLint and Prettier into our CI pipeline has drastically reduced the number of trivial comments during reviews. Developers now spend less time debating spaces and tabs and more time on design patterns and logic. In one instance, our integration of SonarQube helped us reduce critical issues by 40% within a quarter. The numbers speak for themselves.

Automation doesn’t replace human insight—it augments it. By filtering out the noise, these tools allow developers to focus on the bigger picture. It’s like having a pair of smart glasses that highlight only what matters. We use automation to handle the “grunt work” and then bring in the human touch for complex architectural decisions and nuanced feedback.

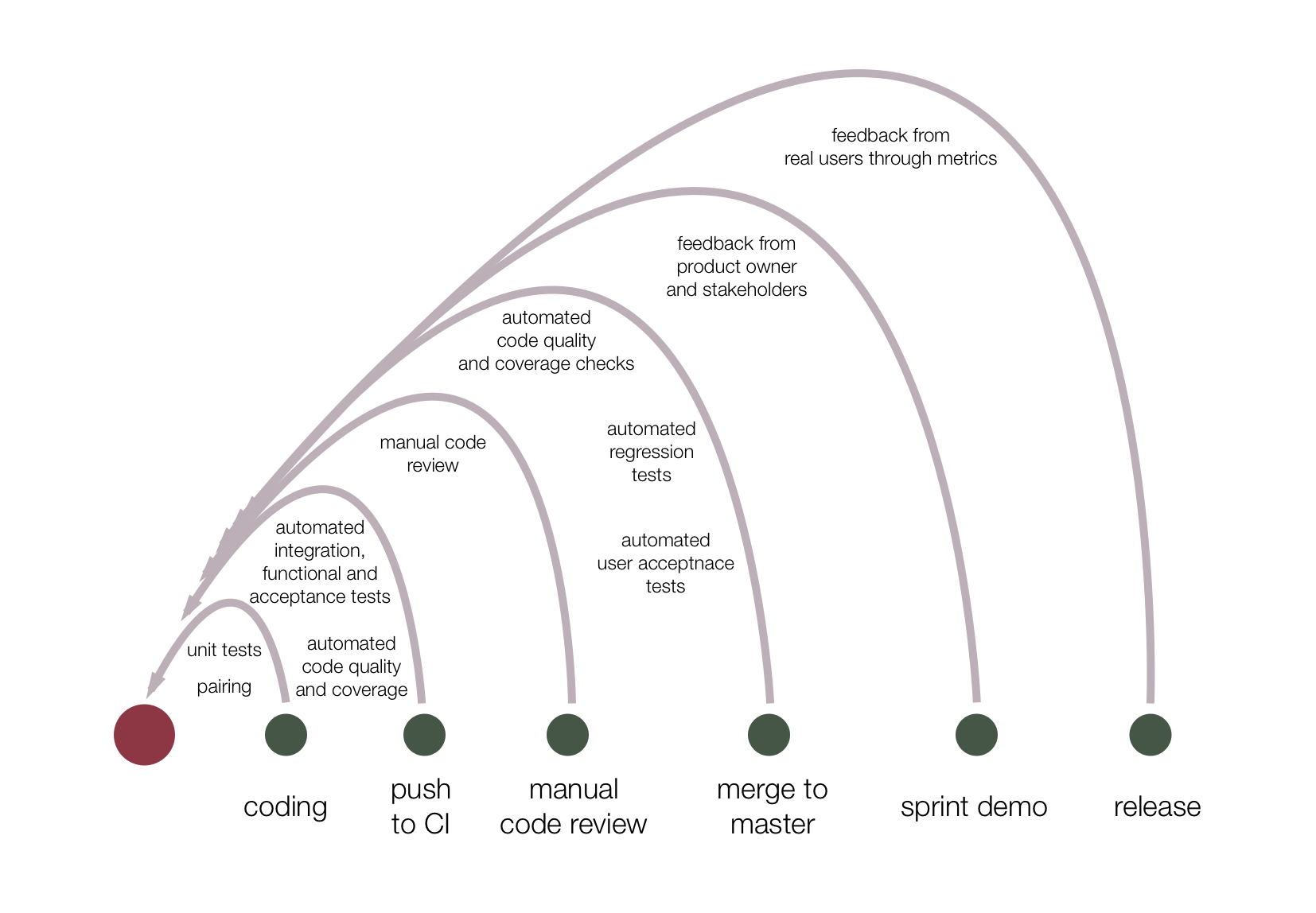

Integrating Automation into the Workflow

The key is seamless integration. Automation should be a natural part of the workflow, not an afterthought. At 1985, we ensure that every commit triggers a series of automated checks. This happens before a code review is even requested. The automated process gives developers immediate feedback. It’s a first line of defense.

We’ve also set up dashboards that display key metrics from these tools. This transparency keeps everyone in the loop and highlights areas for improvement. When issues are detected early, there’s no need for lengthy review discussions to address basics that could have been automated away.

This isn’t about removing accountability; it’s about making the process smarter and more efficient. When you automate the repetitive parts, you free up your team’s creativity and energy. It’s a win-win scenario.

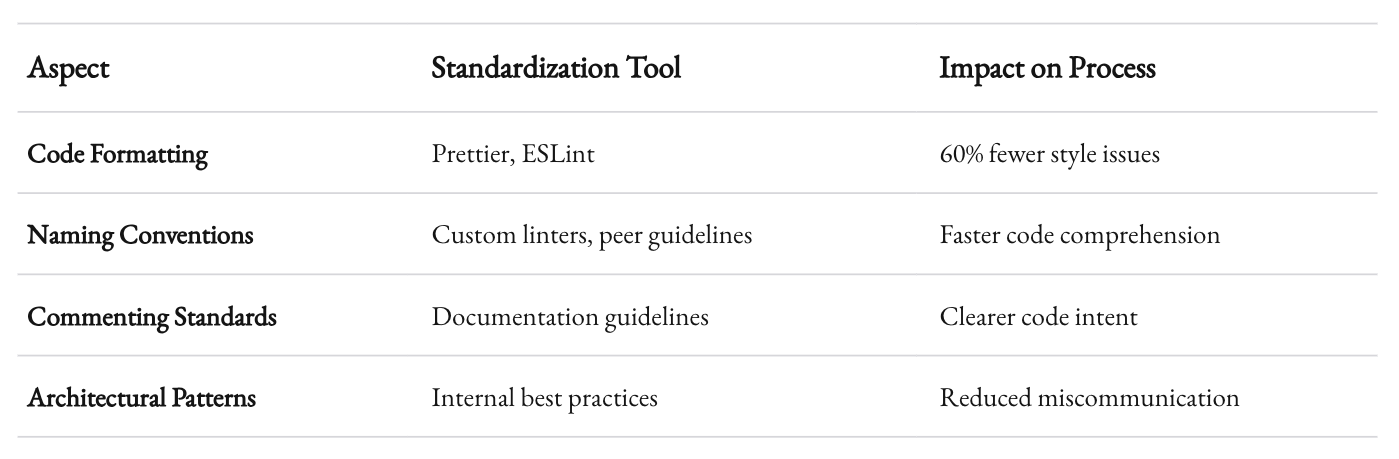

Creating a Consistent Code Style

A consistent code style is essential for a streamlined review process. When every developer writes code in a uniform style, the review process becomes more about the logic and architecture than formatting and naming conventions.

Standardizing Practices

At 1985, we set up detailed coding guidelines. These aren’t generic best practices taken off a blog post—they’re refined through real-world experience. We took into account the nuances of our technology stack and the needs of our distributed teams. The guidelines cover everything from variable naming and commenting practices to architectural patterns and error handling.

The guidelines are a living document. We revisit them quarterly. If a particular rule is slowing down development or causing unnecessary friction, we discuss it openly and adjust. The goal is not rigidity but a framework that supports clarity and consistency. When everyone adheres to the same standard, reviews become smoother and more predictable.

Tooling for Style Enforcement

We use automated linters and formatters as the first checkpoint. This ensures that the codebase stays consistent. Developers see immediate feedback if they stray from the guidelines. This automated enforcement reduces the burden on human reviewers who would otherwise need to point out style issues manually.

There’s also a culture of code ownership. Developers are responsible for ensuring their code meets the standards before it reaches review. Peer reviews then become an opportunity to discuss architecture and functionality, rather than style and formatting. This shift in focus not only speeds up the process but also leads to more meaningful discussions about design and performance.

Consistency in style isn’t just about aesthetics. It’s about making the codebase approachable for everyone. When the code is predictable, reviews can focus on the substance rather than the surface. This clarity is invaluable, especially in a distributed, outsourced environment where developers might only see each other’s work through the lens of a pull request.

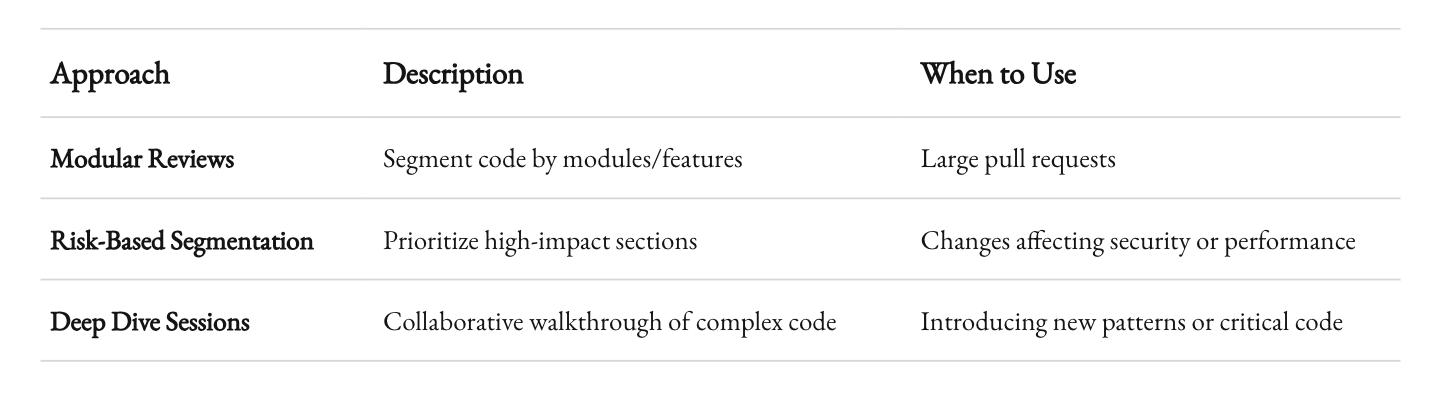

Prioritizing High-Impact Reviews

Not every line of code deserves the same level of scrutiny. We’ve learned to prioritize. At 1985, it’s crucial to focus our attention where it matters most.

Triage Your Reviews

One of the biggest challenges in code review is managing time. With multiple projects and tight deadlines, it’s impossible to give every change the same level of attention. We’ve developed a triage system that categorizes changes based on their potential impact.

- Critical Changes: Features or fixes that have a direct impact on system stability, security, or performance. These get a thorough review with multiple pairs of eyes.

- Routine Changes: Minor bug fixes or non-critical enhancements. These can have a lighter review cycle.

- Low-Impact Changes: Documentation updates, minor refactorings, or stylistic changes. These might go through an automated review with only spot-checking from a peer.

By categorizing reviews, we ensure that critical issues get the attention they deserve without bogging down the process with less impactful changes. This prioritization has reduced our overall review time by nearly 25% and allowed us to deploy high-stakes updates with confidence.

Setting Expectations

It’s vital that everyone on the team understands the triage process. We hold regular training sessions where we discuss what constitutes a critical change versus a routine one. This clarity helps in setting expectations and reduces the friction when a review is fast-tracked or given a lighter touch. When everyone understands the rationale, the process is less likely to be met with resistance.

The feedback loop is shorter for high-impact changes, meaning developers get quicker insights on work that could affect the end product significantly. Meanwhile, lower-impact changes move quickly through the system. It’s all about smart resource allocation. This triage system is supported by our metrics dashboard, which shows the distribution of review types and helps us adjust our process as needed.

Encouraging Constructive Feedback

Feedback is the lifeblood of code reviews. But too often, feedback becomes a list of nitpicks rather than a meaningful dialogue that drives improvement. The challenge is to deliver criticism in a way that’s both constructive and actionable.

Focusing on Solutions

At 1985, we encourage our reviewers to focus on solutions, not just problems. If you spot an issue, suggest how to fix it. This approach turns the review into a learning opportunity. Instead of simply pointing out that something is wrong, you’re contributing to the solution. It’s a subtle shift, but it has a profound impact on morale and productivity.

For instance, when reviewing a particularly tricky piece of logic, one of our senior developers would not only flag the potential flaw but also offer an alternative approach. This kind of feedback is far more helpful than a bare “this doesn’t work” comment. It invites discussion and leads to better, more robust code.

Structured Commenting

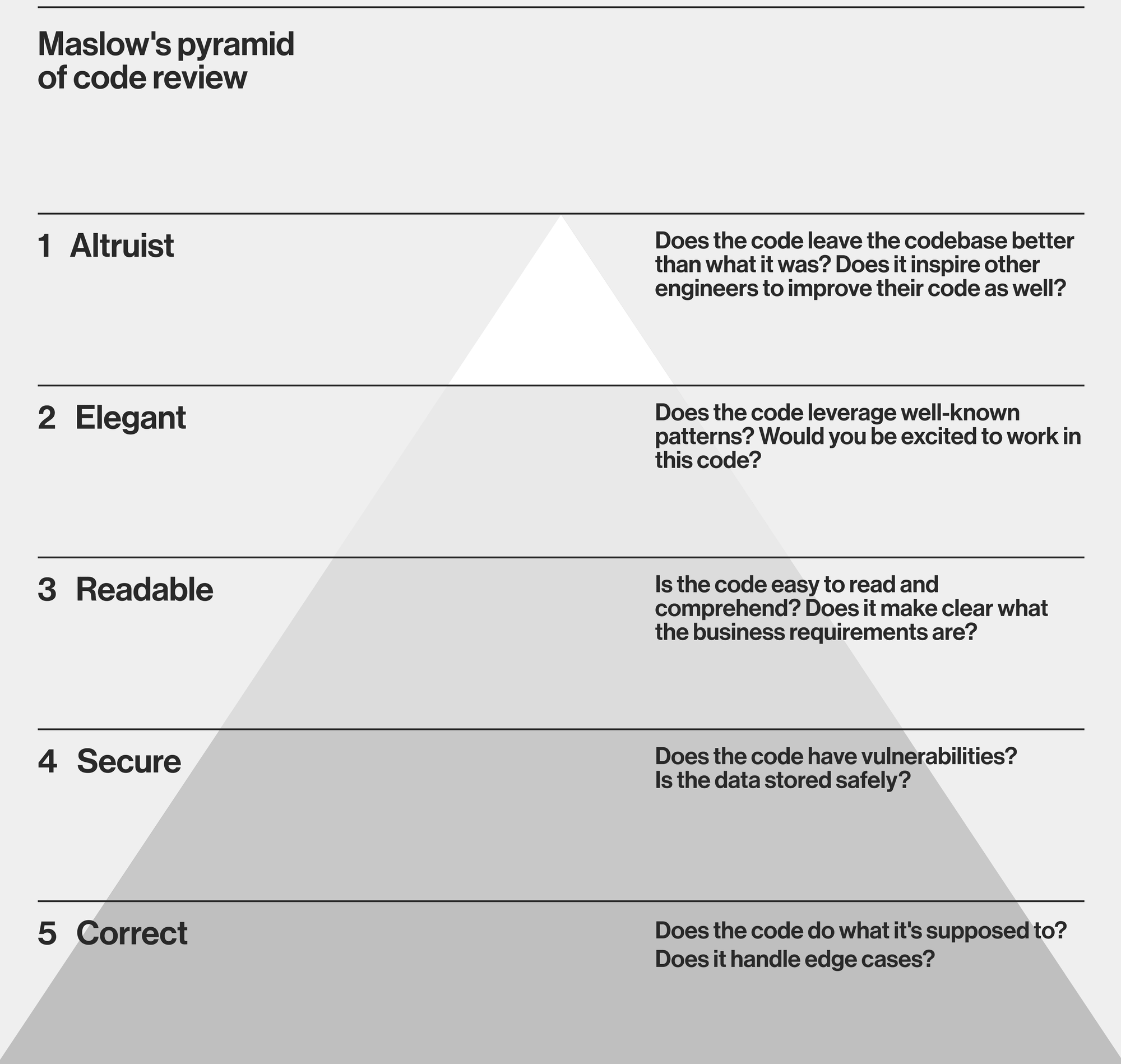

We’ve also refined our commenting style. Reviews should be clear and direct. We’ve adopted a structured format for comments:

- Observation: What is the issue?

- Impact: Why does it matter?

- Suggestion: How can it be improved?

This structure makes feedback clear and actionable. Developers know exactly what to focus on, and the conversation becomes a collaborative effort to elevate the code. This method has reduced back-and-forth exchanges and has shortened review cycles by eliminating ambiguity.

Moreover, we hold quarterly “review retrospectives” where the team discusses what types of feedback have been most effective. We analyze patterns—what’s working, what’s not—and continuously refine our approach. It’s a dynamic process. The culture of constructive criticism has not only improved our code quality but has also strengthened our team’s cohesion.

Feedback that is respectful and solution-oriented builds trust. It transforms code reviews from potential conflict zones into opportunities for shared growth. This shift in mindset has been a game changer in our team dynamics.

Training and Onboarding

Streamlining code reviews isn’t just about process and tools—it’s also about people. The best process in the world won’t help if the team isn’t equipped to use it. At 1985, we invest in continuous training and onboarding to ensure every team member understands not just how to code, but how to review code effectively.

Continuous Learning

Our approach is simple: learning never stops. We hold monthly workshops focused on best practices, emerging trends, and deep dives into our codebase. These sessions are led by our most experienced developers. They share real-world examples, dissect tricky bugs, and discuss new tools that can enhance our workflow.

One memorable session focused on asynchronous code review strategies for distributed teams. The discussion highlighted practical tips for remote collaboration, like time-zone considerations and balancing synchronous and asynchronous communication. This kind of focused training has made a noticeable difference. Developers are not only more confident in their reviews but are also more receptive to feedback.

Onboarding New Team Members

The onboarding process is crucial. New hires need to understand the culture and the expectations around code reviews from day one. We’ve developed a comprehensive onboarding package that includes:

- A detailed overview of our code review process.

- Access to our coding guidelines and best practices documents.

- Recorded training sessions from senior developers.

- A mentorship program where new hires shadow experienced reviewers.

This approach ensures that even if someone is new to outsourced development or distributed teams, they’re not overwhelmed by the process. Instead, they’re given the tools and context they need to succeed. A robust onboarding process translates into faster ramp-up times and fewer initial mistakes. The cost of onboarding is recouped many times over in the form of smoother reviews and higher quality code.

The investment in training pays off not only in technical skill but also in fostering a culture of continuous improvement. When every team member is on the same page, reviews become a natural part of the development cycle, not an afterthought.

Handling Complex Reviews

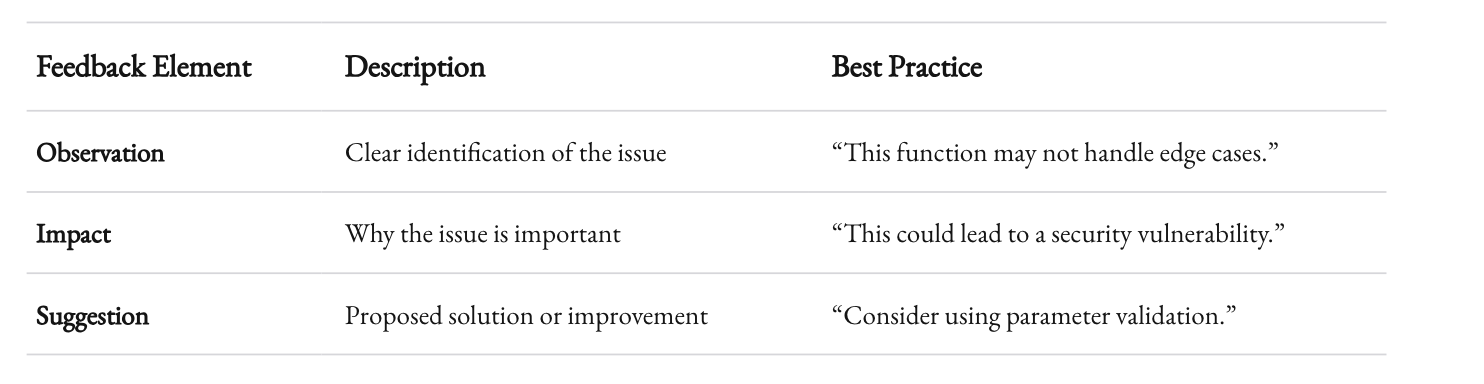

Not all reviews are created equal. Some pull requests are straightforward. Others, however, are complex, spanning multiple modules or touching on critical areas of the system. Handling these reviews requires a nuanced approach.

Breaking Down the Review

When faced with a complex review, the first step is to break it down into manageable parts. Instead of tackling a massive pull request in one go, we segment it:

- Modular Reviews: Divide the changes by module or feature. Each segment is reviewed separately, focusing on its specific context.

- Risk-Based Segmentation: Prioritize sections that impact security, performance, or critical functionality. These get more attention, while less critical sections can be reviewed in parallel.

- Incremental Feedback: Encourage developers to submit smaller, more frequent pull requests. This reduces the complexity of any single review and helps maintain momentum.

One senior developer once told me, “I’d rather do ten small reviews than one monstrous one.” And he’s right. By breaking down the review, we make it easier to spot issues and give targeted feedback. The segmentation method has helped us catch subtle bugs that might have been overlooked in a sprawling code dump.

Deep Dive Sessions

For particularly challenging areas, we sometimes organize deep dive sessions. These are collaborative meetings where the author of the code walks the team through the changes. The session is interactive. Questions fly, ideas bounce around, and by the end, there’s a shared understanding of the code’s intent and functionality.

These sessions are especially useful for critical code or when a new technology or design pattern is introduced. They also serve as a valuable training opportunity. The transparency and collective problem-solving build trust and ensure that the review isn’t just about finding faults but about enhancing overall quality.

Complex reviews demand extra time and effort. But when handled correctly, they turn into opportunities to elevate the entire team’s skill set. It’s about shifting from a mindset of “fixing problems” to “building knowledge.”

The Role of Documentation

Documentation is often overlooked in the rush to ship code. Yet, it plays a crucial role in streamlining reviews. When code is well-documented, reviewers can understand the context and intention behind changes more quickly.

In-Line Documentation

We emphasize in-line documentation as part of our coding standards. Every function, class, or module should have clear comments explaining its purpose, expected inputs, and outputs. This is not about redundancy; it’s about clarity. When every line of code is accompanied by thoughtful commentary, the review process becomes less of a guessing game.

External Documentation

In addition to in-line comments, we maintain robust external documentation. This includes:

- API Documentation: Detailed explanations of public endpoints, parameters, and usage examples.

- Architecture Overviews: Diagrams and written summaries of how different parts of the system interact.

- Change Logs: A record of major changes, including the rationale and impact.

Good documentation acts as a roadmap. When a reviewer understands the broader context, they can focus on the details that matter. It also speeds up onboarding and helps in knowledge transfer. When the documentation is up-to-date, it reduces the back-and-forth during reviews, as developers have a solid reference point.

In my experience, well-documented code can reduce review time by up to 30%. It cuts down on the need for clarifying questions and minimizes the chance of misunderstandings. The goal is not to document for documentation’s sake, but to create a living resource that evolves alongside the codebase.

Integrating Feedback Loops

No process is perfect. Continuous improvement requires a robust feedback loop that captures insights from every review session. At 1985, we’ve built a system to gather, analyze, and act on feedback.

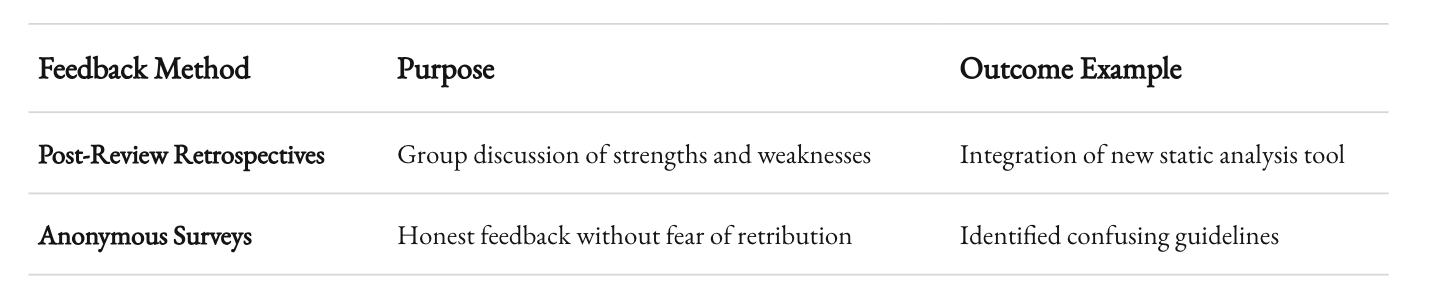

Post-Review Retrospectives

After major sprints, we hold retrospective meetings dedicated solely to our code review process. The agenda is simple:

- What worked well?

- What could have been better?

- What did we learn?

These discussions are candid. We share both successes and failures. For example, a retrospective might reveal that our automated tools missed a critical security check or that a particular guideline is causing confusion. Armed with this information, we iterate on our process.

One such session led us to integrate a new static analysis tool that reduced false positives by 25%. The key is to treat every review as a learning opportunity. This continuous improvement mindset is embedded in our culture.

Anonymous Surveys

Sometimes, people are more honest behind a veil of anonymity. We periodically distribute surveys asking for honest feedback on the review process. The results are eye-opening. Developers point out inefficiencies that might not surface in group discussions. It’s a safe space for constructive criticism, and the insights have been invaluable in refining our approach.

Feedback loops ensure that the process remains dynamic and adaptable. They create a mechanism for continuous, incremental improvement rather than a one-time overhaul. This commitment to learning keeps our code review process as agile as the software we develop.

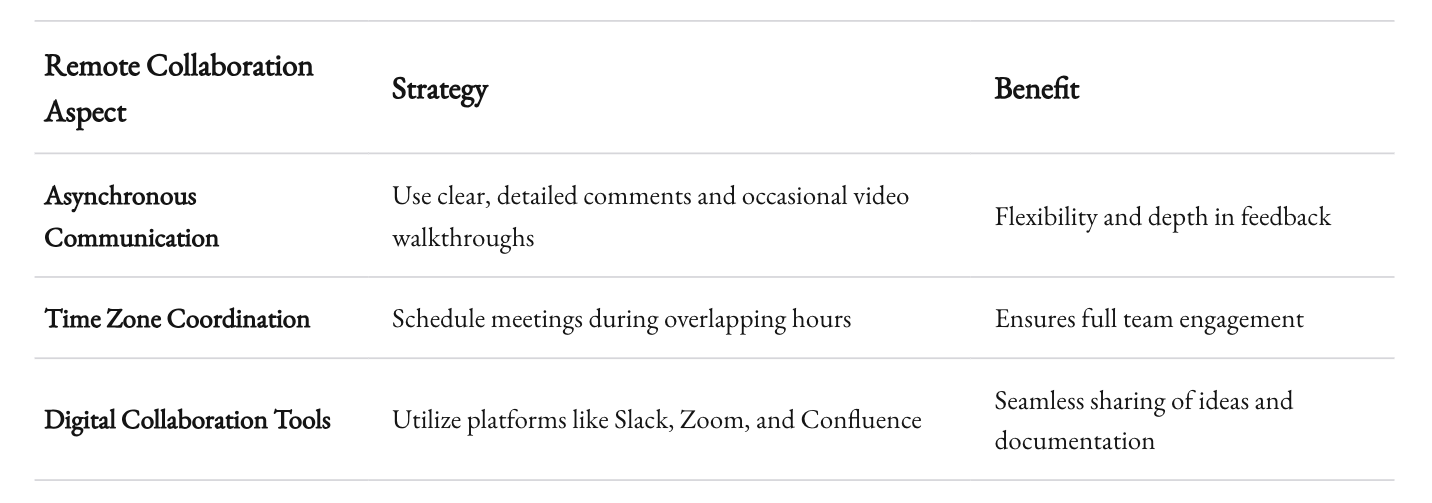

Embracing Remote Collaboration

In today’s globalized tech landscape, remote work is not a trend—it’s a reality. At 1985, where our teams are scattered across different time zones, streamlining the code review process requires special attention to remote collaboration.

Asynchronous Communication

Remote teams thrive on asynchronous communication. Reviews need not happen in real time. Instead, developers can review code on their own schedule. This flexibility is crucial. It allows for thoughtful, uninterrupted feedback and accommodates different work hours.

However, asynchronous reviews come with challenges. Without face-to-face interaction, misinterpretations can occur. To counteract this, we encourage clear, precise comments. We also use video walkthroughs for particularly complex changes. A short recorded explanation can save hours of back-and-forth text and ensure that everyone understands the context.

Time Zone Awareness

A simple yet powerful strategy is time zone awareness. When scheduling synchronous review sessions, we ensure they fall within overlapping hours for all team members. This small adjustment has a big impact on productivity. It ensures that everyone has a chance to participate and that no one is left out of crucial discussions.

Remote collaboration demands discipline and clear communication. It’s not enough to have the right tools; you must also cultivate habits that bridge the physical divide. At 1985, we view remote work as an opportunity to refine our process, making it robust enough to handle the challenges of distributed teams.

![Code Review: 12 Best Practices [2024] | DevCom](https://devcom.com/wp-content/uploads/2024/10/code-review-1.jpg.webp)

Balancing Rigor and Flexibility

The essence of streamlining code reviews is finding the sweet spot between rigor and flexibility. Too much rigidity stifles innovation and delays progress. Too much flexibility, on the other hand, can lead to chaos and inconsistency.

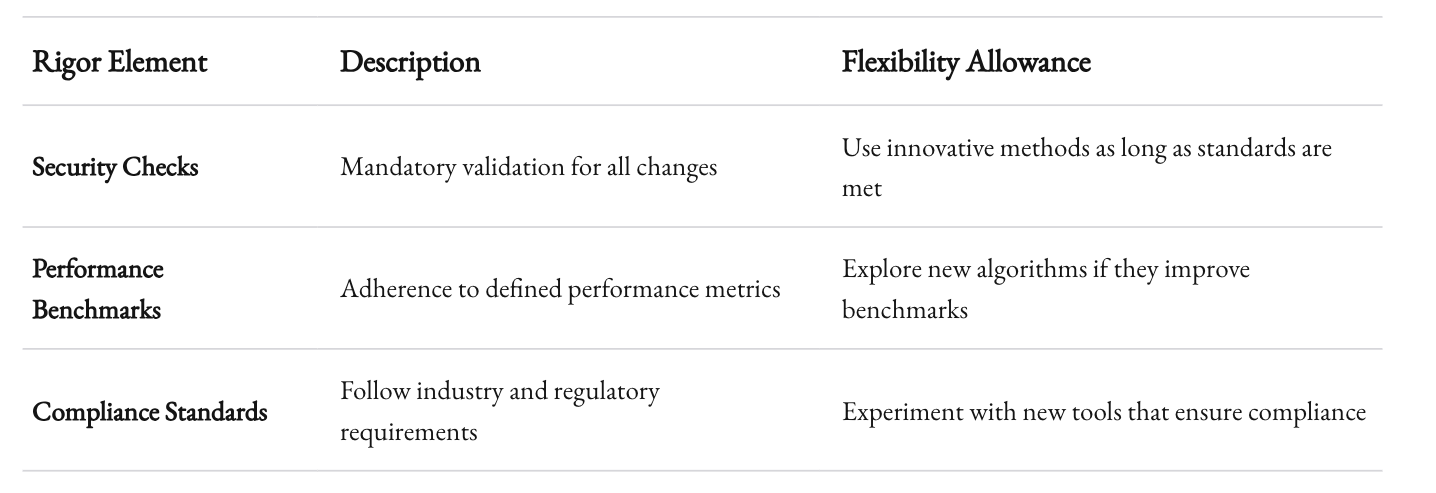

Establishing Non-Negotiables

There are a few non-negotiable elements in our process:

- Security Checks: Every change must pass rigorous security validation.

- Performance Benchmarks: Code that affects performance is scrutinized thoroughly.

- Compliance Standards: Adherence to industry standards and regulations is a must.

These are the bedrock of our review process. They can’t be compromised. But aside from these, there’s room for flexibility. We encourage creative solutions and innovative approaches. When a developer proposes an unconventional solution, we don’t dismiss it outright. Instead, we assess it against our non-negotiables and then allow room for exploration.

Adaptability in Action

In one instance, a developer suggested a new asynchronous handling pattern that deviated from our established norms. Initially, there was resistance. But rather than shutting down the idea, we ran a controlled experiment. We set up a dedicated review and testing phase to evaluate its impact. The results were impressive—a 15% improvement in processing speed with no additional complexity. This flexibility, balanced with our core standards, led to an evolution in our coding practices.

The balance between rigor and flexibility is an ongoing experiment. It requires open-mindedness and a willingness to adapt. This approach not only streamlines our code reviews but also keeps us at the forefront of industry innovation.

The Future of Code Reviews

Looking ahead, I believe that code reviews will continue to evolve. New tools, methodologies, and cultural shifts are already on the horizon. At 1985, we are committed to staying ahead of the curve.

Emerging Trends

- AI-Assisted Reviews: Tools powered by machine learning are already making their way into the development process. These systems can identify patterns, suggest improvements, and even predict potential bugs before a human review. Early trials in our team have shown promising results, with AI catching up to 30% of issues that might have slipped through traditional reviews.

- Collaborative Platforms: As remote work solidifies its place, the platforms we use will become even more integral to our process. Expect better integration between code repositories, chat applications, and project management tools. These integrations will make the review process more seamless.

- Real-Time Analytics: Future systems will offer real-time insights into code quality, review efficiency, and team performance. These analytics will not only highlight areas for improvement but also celebrate successes, fostering a culture of continuous learning.

Preparing for Change

Embracing these trends means being proactive. At 1985, we are already experimenting with AI-driven code analysis tools. We’re integrating real-time dashboards that provide immediate feedback on review metrics. The goal is to create a system where every review is not just a checkpoint but a learning moment that drives future innovation.

We must remain agile. The tech industry is in constant flux, and our processes need to evolve to keep pace. Continuous experimentation, paired with a willingness to discard outdated practices, is essential. By staying adaptable, we can ensure that our code reviews not only remain efficient but also contribute to our overall competitive edge.

Recap

Streamlining the code review process is not about cutting corners. It’s about creating a system that respects time, fosters collaboration, and drives quality. At 1985, we’ve learned that the secret lies in balancing automation with human insight, consistency with flexibility, and rigor with a constructive culture.

Every code review is an opportunity—a chance to share knowledge, catch issues early, and build a stronger, more resilient codebase. It’s a process that requires clear objectives, well-defined metrics, and a commitment to continuous improvement. From setting clear goals to embracing the latest tools, each step is a building block towards a more efficient and effective review process.

Our journey has not been without challenges. There were times when reviews felt like endless debates, when metrics showed slow progress, and when miscommunication threatened to derail a project. But through it all, we persevered. We listened to our team, adapted our methods, and kept our focus on what truly matters: building software that stands the test of time.

If you’re struggling with code reviews, remember this: streamline what you can, invest in your people, and always keep the conversation open. The path to efficiency is not a one-size-fits-all solution—it’s a tailored process that evolves with your team and your technology. Our experience at 1985 is a testament to that philosophy.

Let this be a call to action. Reexamine your process. Challenge the status quo. Embrace new tools and techniques. And most importantly, value the human element behind every line of code. When reviews become a bridge rather than a barrier, your entire development process transforms.

Thank you for taking the time to read this. I hope these insights help you streamline your own code review process. Here’s to building better software—one review at a time.