Implementing ChatGPT in React Native Apps

Want to make your app smarter? Learn the step-by-step process of adding ChatGPT to your React Native app and see it come alive!

Implementing cutting-edge technology in mobile applications can feel like magic. One minute you have a fairly standard app, and the next, it's delighting users with smart, human-like interactions. That’s the power of integrating something like ChatGPT. It’s like giving your app a personality, an ability to converse with its users. Today, we’ll deep dive into how you can integrate ChatGPT into your React Native apps. Let’s make your app a little smarter and, dare I say, a bit more exciting.

I run an outsourced software development company called 1985, and I can tell you—clients today want apps that do more. Not just buttons and pages, but applications that understand, respond, and evolve. This is where ChatGPT comes into play. It’s a nuanced tool, not just an “AI gimmick,” but a real, transformative experience. Buckle up, we’re going on a pretty detailed ride here. And no, we’re not going to spend paragraphs on the basics.

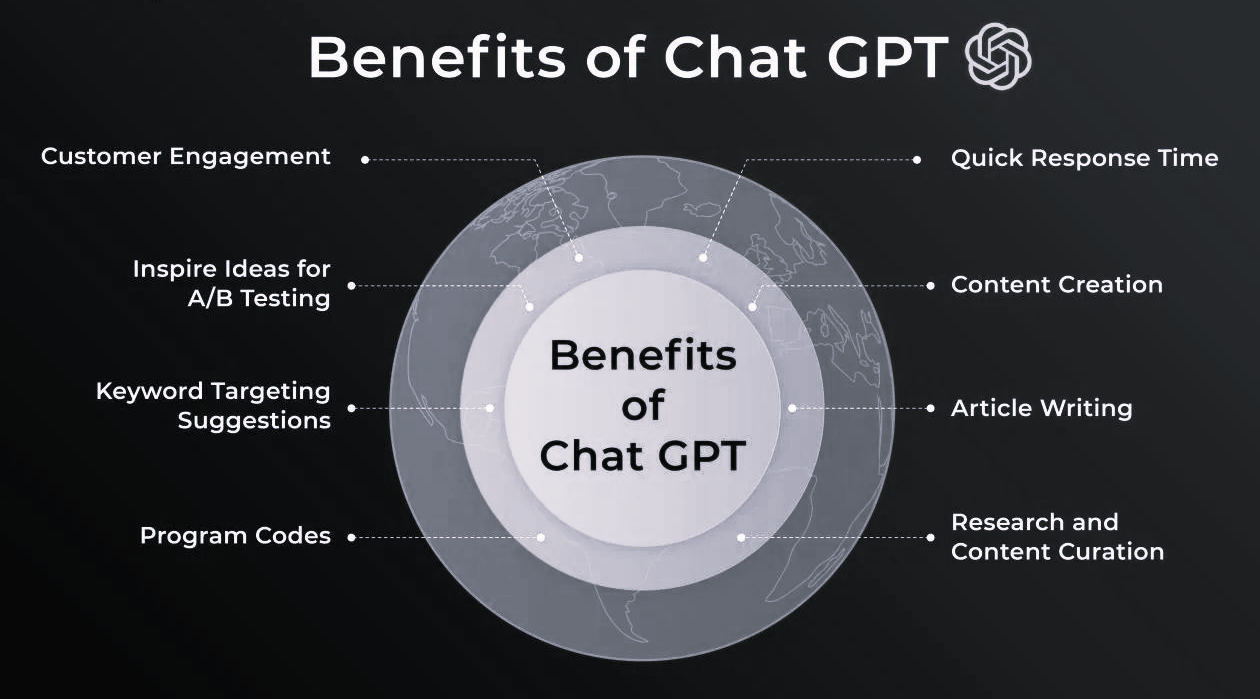

Why ChatGPT?

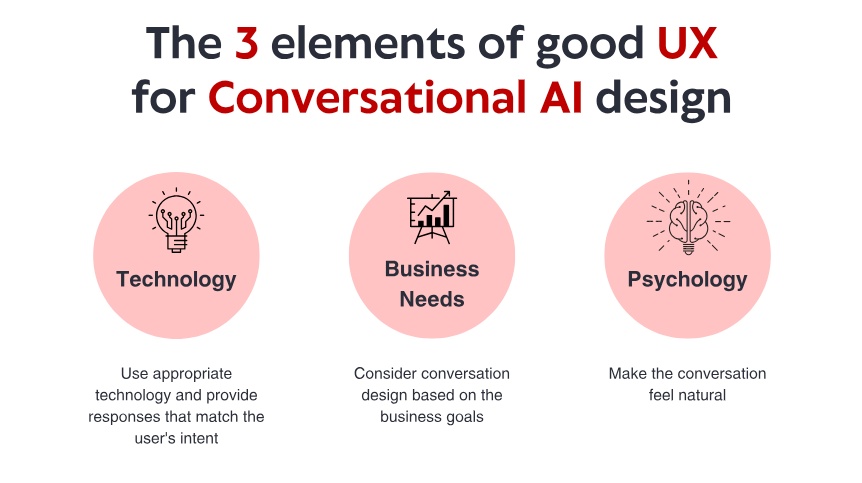

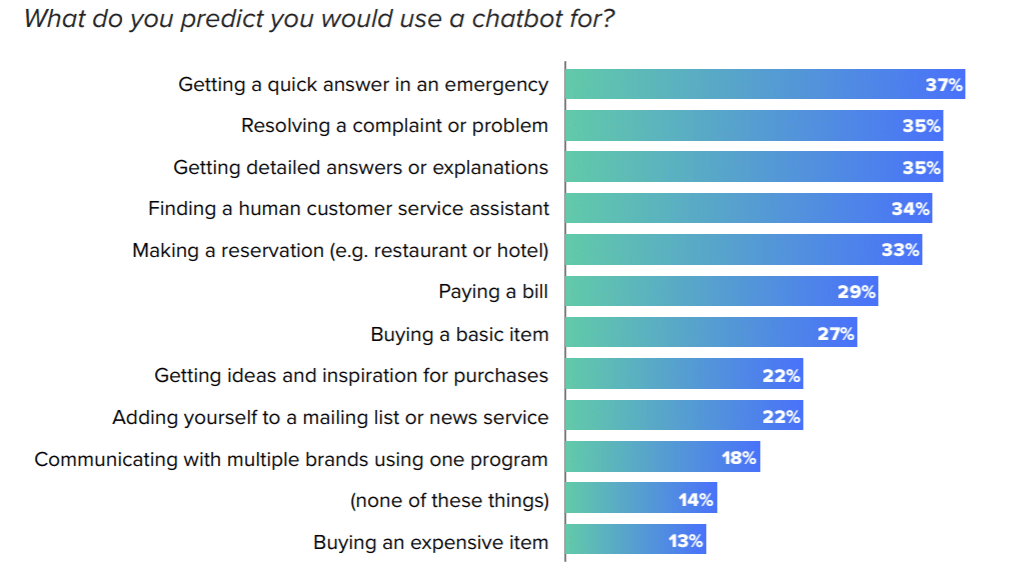

ChatGPT isn’t just another buzzword in the tech sphere; it’s a leap forward in natural language processing (NLP). Think about this—users have grown accustomed to digital assistants that "understand" them. From Siri to Alexa, from WhatsApp auto-replies to customer service bots, conversational AI is shaping user expectations. Adding a ChatGPT-driven feature to your React Native app helps bridge that gap. It turns a static experience into something dynamic and, for some users, downright magical.

In the context of mobile apps, this could mean anything from answering user questions to providing instant customer support, personalizing user journeys, or even just offering some friendly, low-key banter. Now, the technical implementation does require a fair amount of attention to detail. It’s nuanced—but it’s nothing you can’t do with a solid plan in place.

Statistically, according to a survey by Salesforce, 84% of customers say that being treated like a person, not a number, is very important to winning their business. With an AI tool like ChatGPT, you make users feel heard. Not just acknowledged—actually heard.

Architectural Considerations

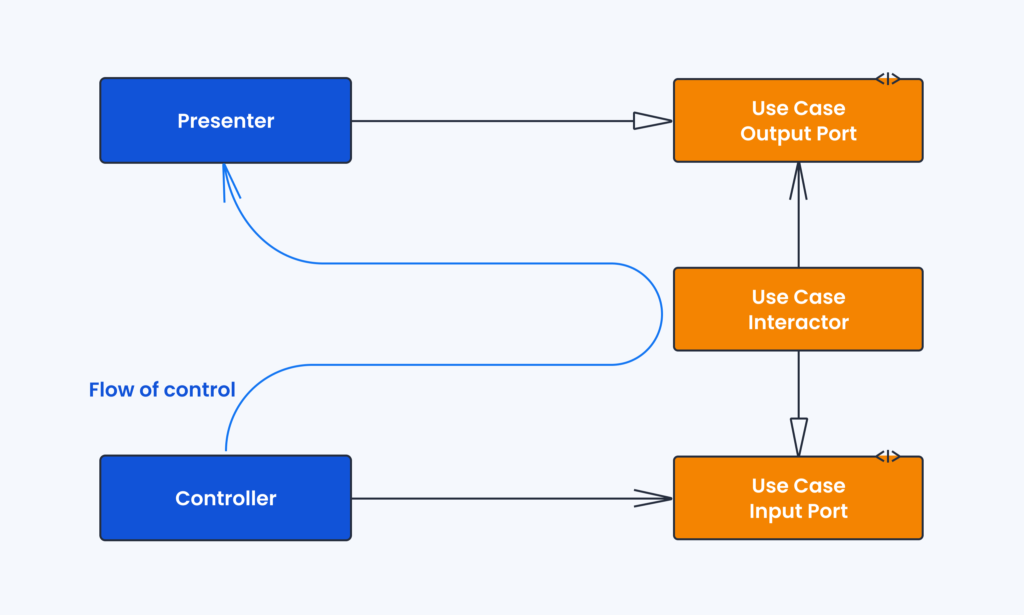

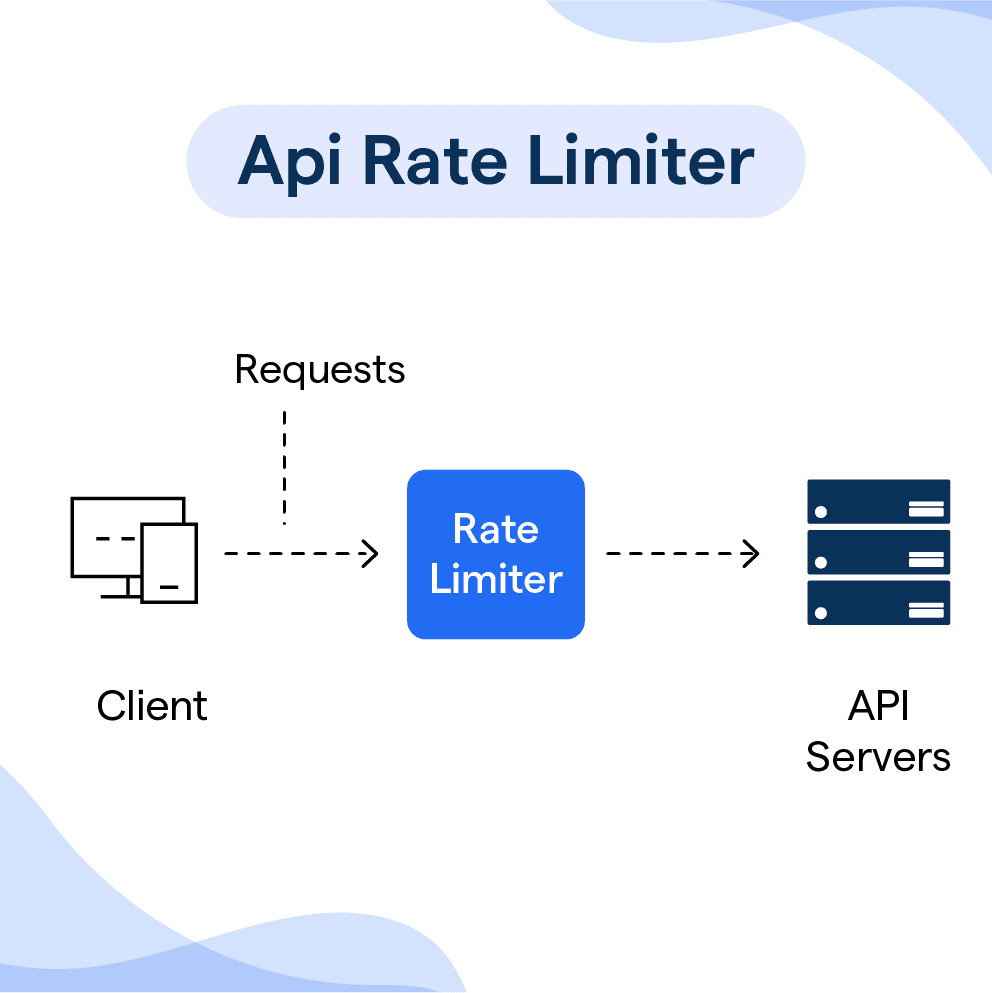

Before jumping into the code, let’s talk about the architecture. Adding ChatGPT to a React Native app isn’t just about calling an API. You’re effectively introducing an AI-driven dialogue system into a client-server architecture, which means considering both frontend integration and backend support.

Think of it like this: The frontend (your React Native app) acts as a microphone and a speaker. It listens to what the user says (via text input or even voice) and plays back the responses. The backend (in our case, OpenAI's servers) acts as the brain. It’s doing the heavy lifting—processing, interpreting, and generating the response.

Your task as a developer is to ensure the communication between these parts is seamless. You’ll also need to think about other aspects such as rate limiting, latency, authentication, and error handling. Depending on your user base, scalability could become a major challenge. So plan accordingly—implement some solid logging and monitoring, use a caching mechanism if it makes sense, and make sure your backend services can handle the chat volume.

Potential Challenges

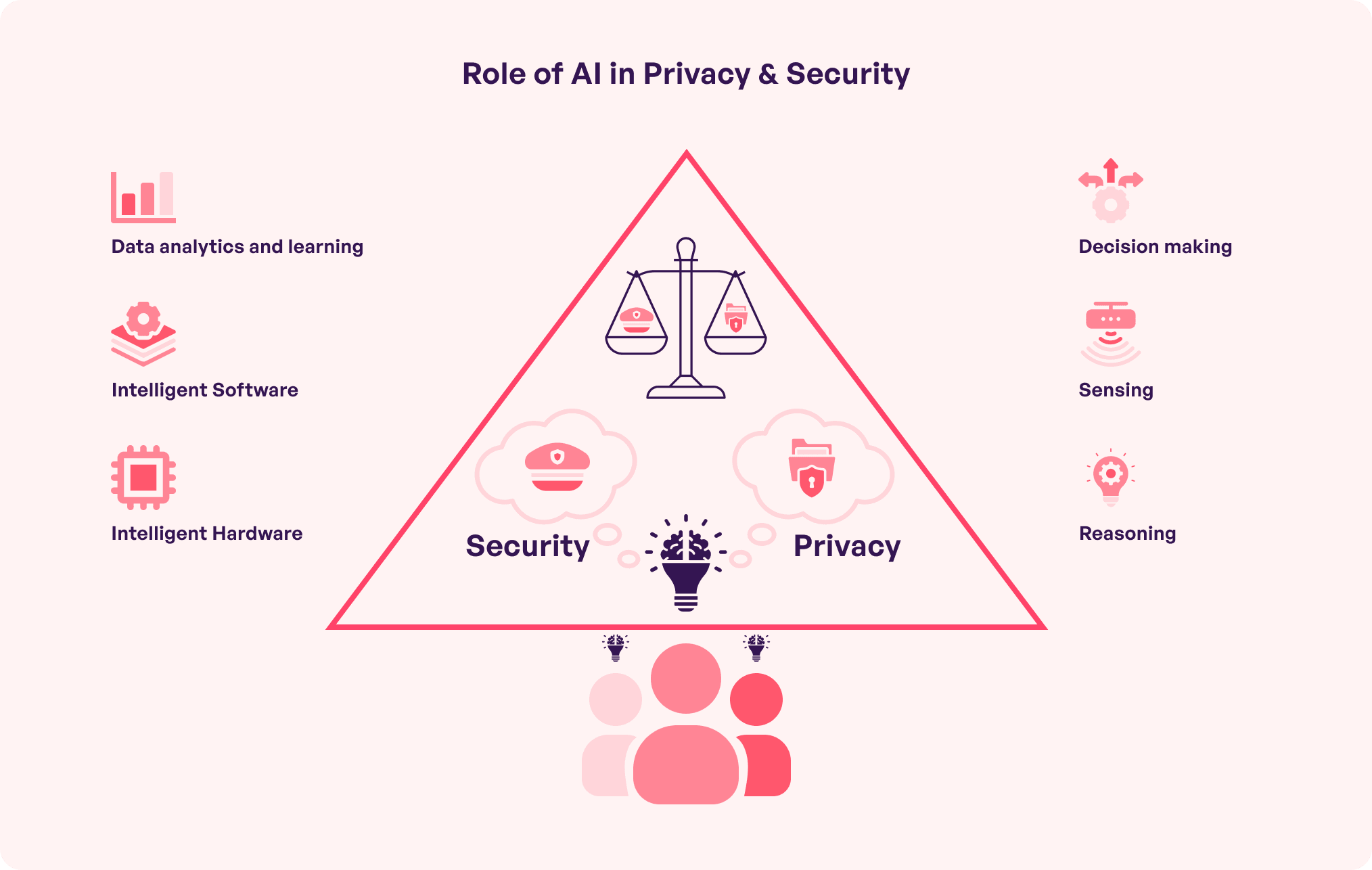

There are some challenges you’ll need to deal with—data privacy, especially. In some industries, sharing chat data with an external API can be problematic. You may need to consider federated learning or deploying GPT models in a more isolated manner, though this is often impractical for small teams or early-stage products.

Rate limiting is another major issue. OpenAI’s API has rate limits that could restrict how often users can interact with ChatGPT. If your app is wildly successful (fingers crossed), you’ll need to factor in the cost and usage limits.

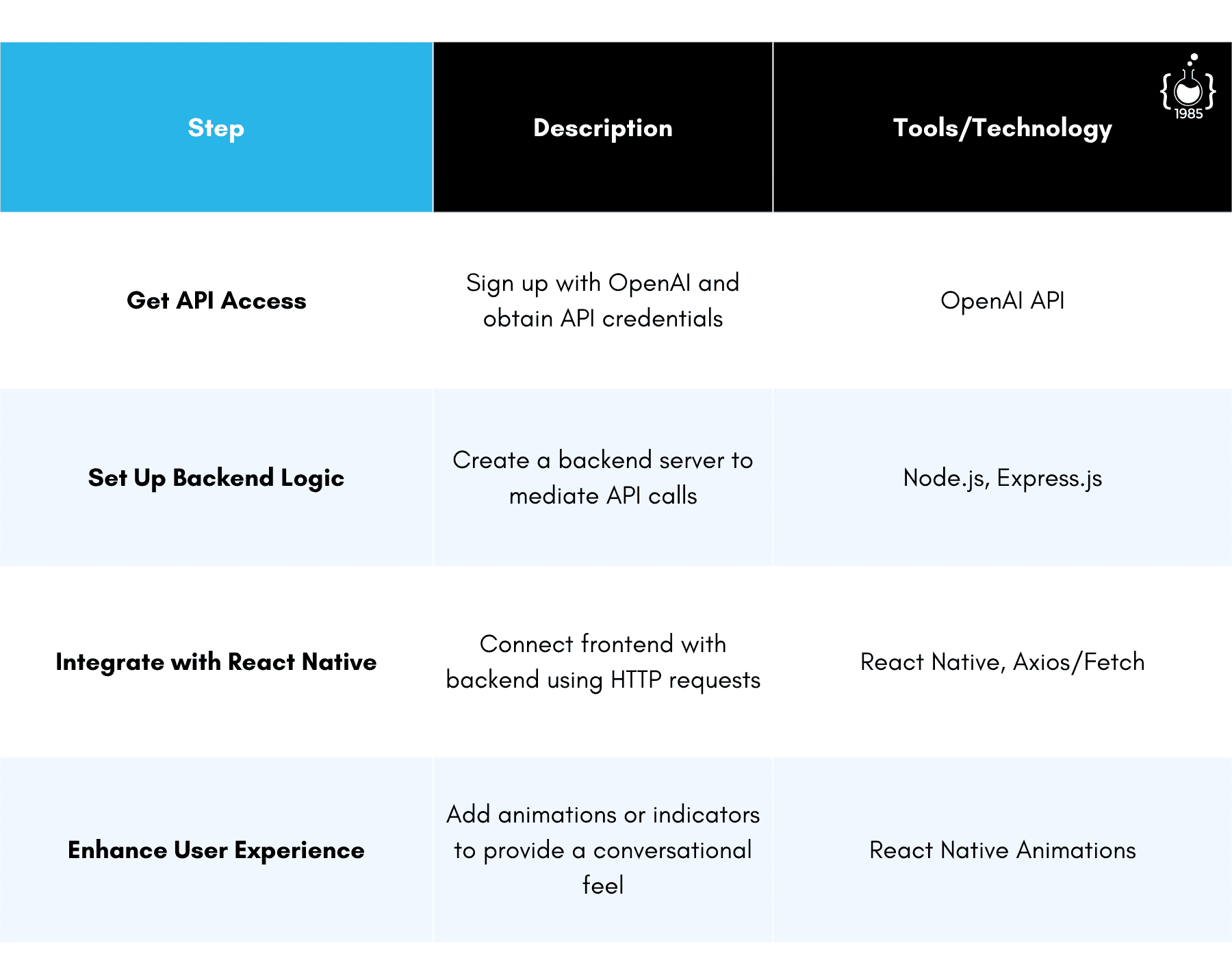

Step-by-Step Implementation

So, you’ve decided to do this. You’re ready to bring ChatGPT into your React Native app. Here’s how we’ll get it done:

Sign Up and Get API Access: First things first, you need an API key from OpenAI. Head over to their website, create an account, and get your API credentials. Simple enough. Treat this key like your most precious secret, though—you don’t want unauthorized people making expensive API calls on your behalf.

React Native Integration: Now that we have the backend setup, let’s integrate it into your React Native app. You’ll want to use the fetch API or something like axios to send messages from your mobile app to your backend.Here’s a simple example:

import React, { useState } from 'react';

import { View, TextInput, Button, Text, StyleSheet } from 'react-native';

import axios from 'axios';

const ChatScreen = () => {

const [input, setInput] = useState('');

const [response, setResponse] = useState('');

const sendMessage = async () => {

try {

const res = await axios.post('http://your-backend-url.com/chat', { message: input });

setResponse(res.data.choices[0].text);

} catch (error) {

console.error(error);

}

};

return (

<View style={styles.container}>

<TextInput

style={styles.input}

value={input}

onChangeText={setInput}

placeholder="Type your message here"

/>

<Button title="Send" onPress={sendMessage} />

{response ? <Text style={styles.response}>{response}</Text> : null}

</View>

);

};

const styles = StyleSheet.create({

container: { padding: 20 },

input: { borderWidth: 1, padding: 10, marginBottom: 10 },

response: { marginTop: 20, fontSize: 16 },

});

export default ChatScreen;In this component, we have a basic UI: a text input, a button, and a space to display ChatGPT’s response. When the user sends a message, it gets sent to the backend, which in turn forwards it to OpenAI. The response is set in state and displayed to the user.

Set Up Backend Logic: Even though we’re building a mobile app, it’s wise to have a backend to mediate these API calls. The backend acts as a proxy between your React Native app and OpenAI’s API—this helps protect your API key and allows you to perform additional tasks like logging or modifying the user prompt to improve responses.You could set up a simple Express.js server to handle this. Here’s a quick example:

const express = require('express');

const axios = require('axios');

const app = express();

app.use(express.json());

app.post('/chat', async (req, res) => {

const { message } = req.body;

try {

const response = await axios.post(

'https://api.openai.com/v1/completions',

{

model: 'text-davinci-003',

prompt: message,

max_tokens: 150

},

{

headers: {

Authorization: `Bearer YOUR_OPENAI_API_KEY`,

},

}

);

res.send(response.data);

} catch (error) {

console.error(error);

res.status(500).send('Something went wrong!');

}

});

app.listen(3000, () => console.log('Server running on port 3000'));This small snippet is your middleman—it takes in a message, forwards it to OpenAI, and sends the response back to your app.

Important Factors to Consider

Rate Limiting and User Experience

Remember that OpenAI’s API has rate limits, which means you need to carefully handle user expectations. If you’re going to deploy ChatGPT in a commercial product, be mindful of how often you allow users to hit that button. A poor experience here can make your app seem unreliable.

You could implement a queue system—or perhaps just let users know when they’re sending messages too quickly. A small notification that says, "Hey, give us a moment to think" can go a long way in keeping users engaged without frustrating them.

Data Privacy & Token Management

From a security perspective, you want to ensure that user data—in this case, conversation data—is handled appropriately. Ideally, you’re not saving raw chat logs unless necessary, and you’re certainly not exposing any sensitive data to third parties. As mentioned, having your backend handle the API communication is a smart move. You don’t want API keys hardcoded into the mobile app, as they could be extracted by a malicious actor.

OpenAI charges based on the number of tokens (think of tokens as pieces of a word). Keeping user prompts short and concise helps keep your costs down. Your backend might even preprocess user inputs—removing unnecessary fluff—before passing them along to OpenAI.

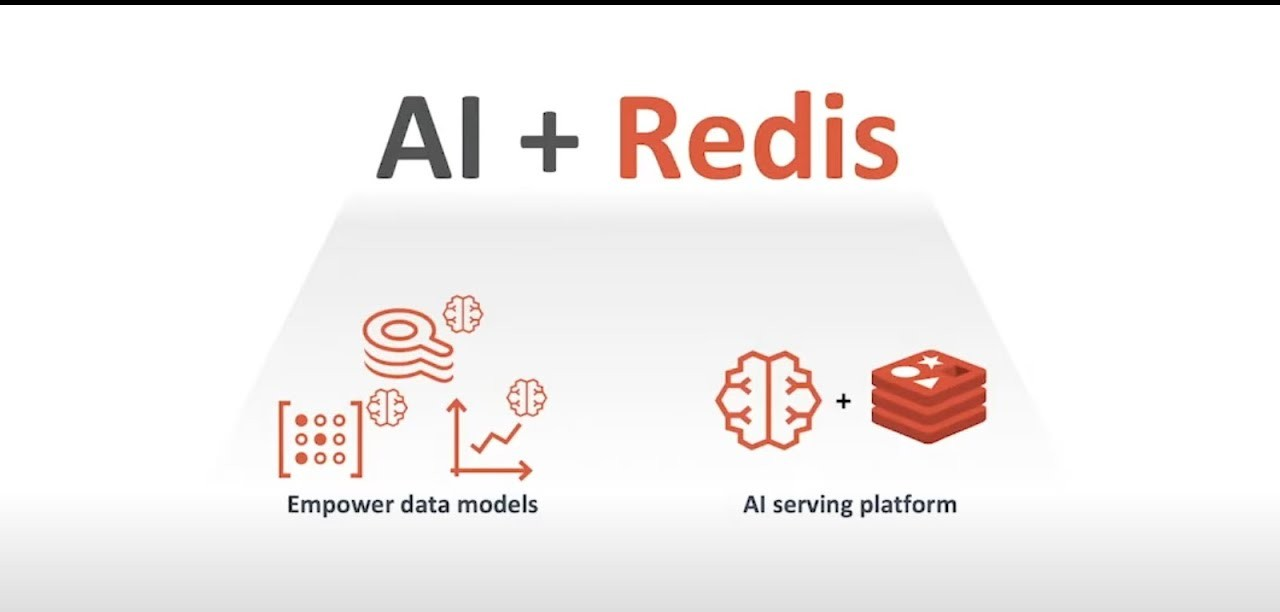

Scalability and Caching

A significant part of the allure of ChatGPT lies in its conversational capabilities. But that also means multiple users will potentially be sending repetitive prompts, especially for FAQs or simple queries. Implementing a caching mechanism can cut costs and reduce the need for identical queries to reach OpenAI’s API.

A tool like Redis could work well here, allowing you to store commonly asked questions and their respective responses. You could even go as far as implementing some fuzzy matching—when a user query closely resembles a stored query, serve up that cached response instead of generating a new one.

Making the UX Shine

We’ve got the technical side covered, but the real magic of ChatGPT in a mobile app goes beyond functionality—it's in how the user perceives the interaction. Users expect conversations with AI to be smooth and fast, much like a natural chat with a friend.

This means avoiding jarring transitions. Adding animations—like a typing indicator or a slight delay before showing the response—gives users a sense of anticipation, much like waiting for a reply in a real conversation. Also, make sure the text input is easily accessible and doesn't get obstructed by the keyboard (especially on iOS devices where this can be an issue).

Handling Errors Gracefully

When things go wrong—and let’s face it, things do go wrong—your app should handle it gracefully. An error like "Unable to reach our servers" should be humanized. Something like, “Oops! Looks like our assistant stepped away for a coffee break. Please try again in a moment,” turns frustration into a light chuckle.

Always make sure there’s a way for users to retry actions. Whether it's retrying the entire conversation or re-sending just the last prompt, keep user frustrations to a minimum.

Potential Use Cases in React Native Apps

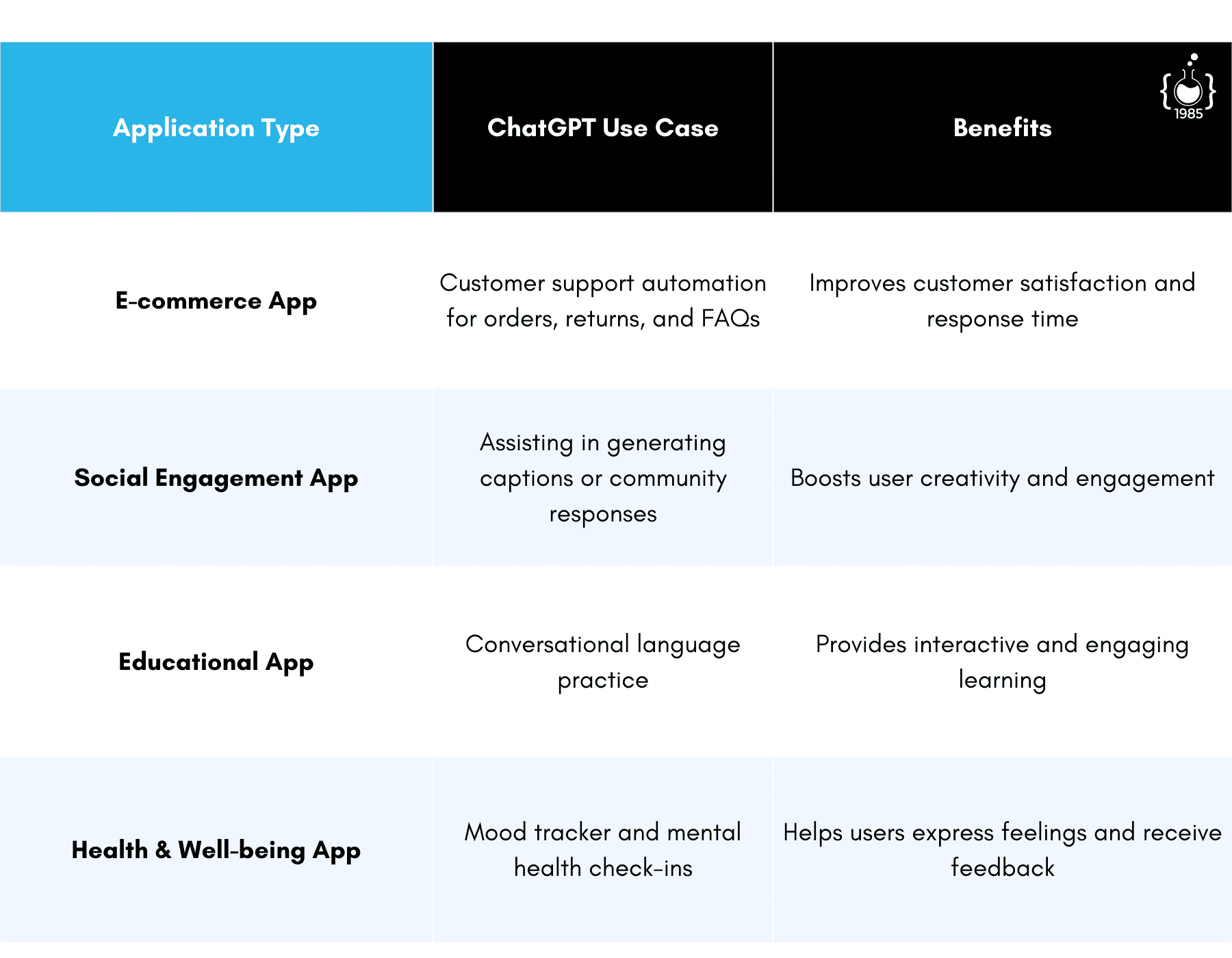

ChatGPT doesn’t have to be a one-size-fits-all solution. Depending on the context of your app, you could adapt its use case:

- Customer Support Automation: Imagine an e-commerce app where users can ask questions about orders, returns, or product details. ChatGPT can take on a majority of simple customer queries, only escalating to human support for more complex issues.

- Content Generation: For social apps or apps centered around community engagement, ChatGPT can be used to help users craft content. Imagine a social media scheduling app suggesting catchy captions.

- Learning and Education: A language learning app can use ChatGPT to provide conversation practice. It’s a powerful way for learners to apply their skills in a simulated, responsive dialogue.

- Health and Well-being Apps: Providing users with responses based on frequently asked questions, or even acting as a mood tracker—letting users type out their feelings and providing compassionate, relevant feedback.

Wrapping Up

Integrating ChatGPT into a React Native app isn’t just adding a feature—it’s enhancing the user journey by offering a sense of connection and real-time interaction. Sure, it’s technically challenging, with concerns around rate limiting, latency, and data privacy, but the reward can be immense. You turn a one-dimensional app experience into something genuinely interactive and, frankly, quite engaging.

As someone running an outsourced software dev company, my advice is: Start simple. Don’t overthink the perfect implementation. Get a minimum viable product up and running. See how your users react, gather data, and iterate. AI is evolving, and your product should evolve with it.

The key takeaway? The future of mobile apps is conversational. Implementing something like ChatGPT today is not just a cool idea—it’s a step toward keeping pace with where users expect software to go. And let’s be honest, who doesn’t love the idea of giving their app a personality?