How Can We Design Our Architecture to Be Cloud-Native and Scalable?

Scalable cloud-native architecture isn't magic. It's smart design. Learn how to make it happen step-by-step.

You have a great product, people love it, and your customer base is growing faster than you ever imagined. That's the good news. The not-so-good news? Your architecture is starting to look like it’s been taped together with digital duct tape. It’s clunky, brittle, and every time you push a feature update, you break something. Sound familiar?

The truth is, designing an architecture that is cloud-native and scalable is an art. It’s about thinking ahead, planning for growth, and ensuring that every piece you build can flex and stretch as your business evolves. It’s also about embracing cloud-native paradigms right from the start, thinking beyond the obvious, and building something that can truly take advantage of all the scalability, resilience, and cost efficiency the cloud promises.

Now let's start. This isn’t an overview of cloud computing fundamentals. Instead, we’ll be getting into the thick of it — with nuanced insights, specific patterns, and real lessons we’ve learned while building cloud-native solutions here at 1985.

It's All About the Cloud, Not Just In the Cloud

To design cloud-native architecture, you need to embrace the cloud-native mindset. This isn’t about taking an existing application and deploying it to AWS, Azure, or GCP. It’s about adopting design principles and technologies that let you make the most of what the cloud offers.

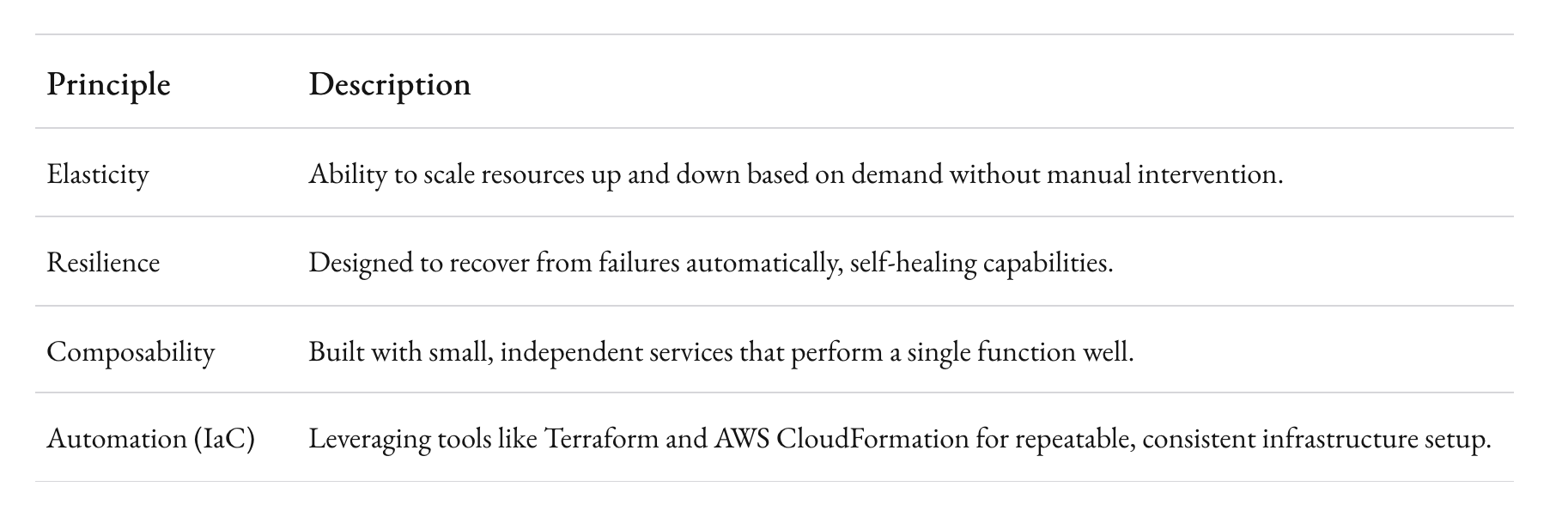

A cloud-native system is elastic. It scales up and down without human intervention. It’s resilient — designed to tolerate failures and heal itself. It’s composable, built with small services that do one thing well. Think about scalability from the very first architectural sketch, as something woven into the DNA of your system.

Cloud-native architecture also thrives on automation. Infrastructure as Code (IaC) is fundamental here, helping you version and manage infrastructure with the same precision as application code. Consider Terraform or AWS CloudFormation — if you’re not automating provisioning, you’re losing out on one of the greatest strengths of cloud-native.

Decompose into Microservices.. But Make It Granular Enough to Scale

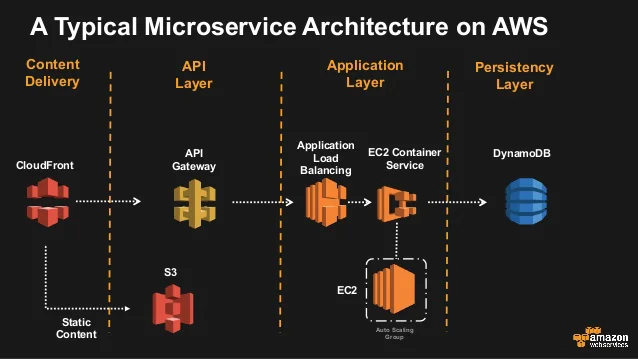

Microservices are one of the pillars of scalable cloud-native architecture. But designing a microservices architecture isn’t about dividing everything up into the smallest possible parts. There's a balance. You want granularity, but not at the expense of constant cross-service chatter that slows everything down.

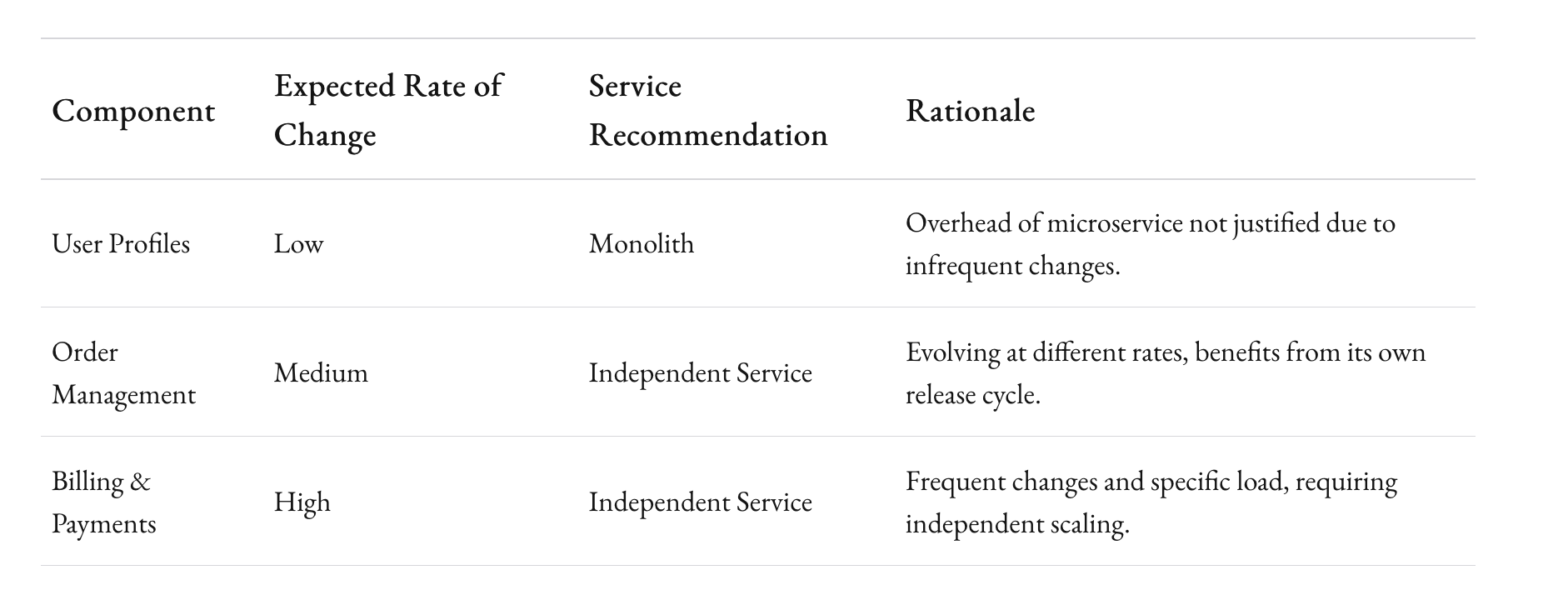

A great starting point is to identify components of your system that change at different paces. Split these out into distinct services. For example, in an e-commerce system, order management may evolve independently of inventory. Make those independent services. But don’t fall into the trap of splitting something like user profiles into multiple tiny services just because you can — the cost of communication overhead and orchestration complexity might kill your dreams of scalability.

Another key here is to design services that are independently deployable. Each service should have its own release cycle. When you push an update, it shouldn’t cause a ripple effect of dependencies across the architecture. Use CI/CD pipelines to manage these deployments independently — more on automation later.

Netflix is often used as the poster child for scalable microservices — their architecture involves over 700 services, all built to scale individually. But they didn’t start there. They found logical boundaries within their system and iterated until they had the ideal level of granularity. Designing for scalability means anticipating growth, but not over-engineering to the point of paralysis.

Make Data Decoupling a Priority

Data is often the biggest bottleneck when trying to scale. A common mistake is to put all your data in one monolithic database that every service reads from and writes to. This tightly couples services and makes scaling impossible without breaking everything.

Instead, think in terms of data ownership. Each microservice should own its data — entirely. If you’re building a billing service, it should own its records without any dependencies on a central database used by other services. This lets you independently scale each service based on its specific load.

Of course, this means thinking about data consistency. In a cloud-native world, you’re looking at eventual consistency rather than the immediate, strong consistency we all love from monoliths. It’s a trade-off, but one that’s worth it. Implement event-driven architectures to keep services updated. Kafka, RabbitMQ, or AWS SNS/SQS are all reliable choices here. They enable asynchronous communication between services, maintaining decoupling while ensuring data flow remains consistent.

There’s a reason Amazon broke its monolithic database into hundreds of micro-databases, each with a specific job — it’s the key to making scaling predictable and linear, rather than a chaotic headache.

Serverless Where It Makes Sense

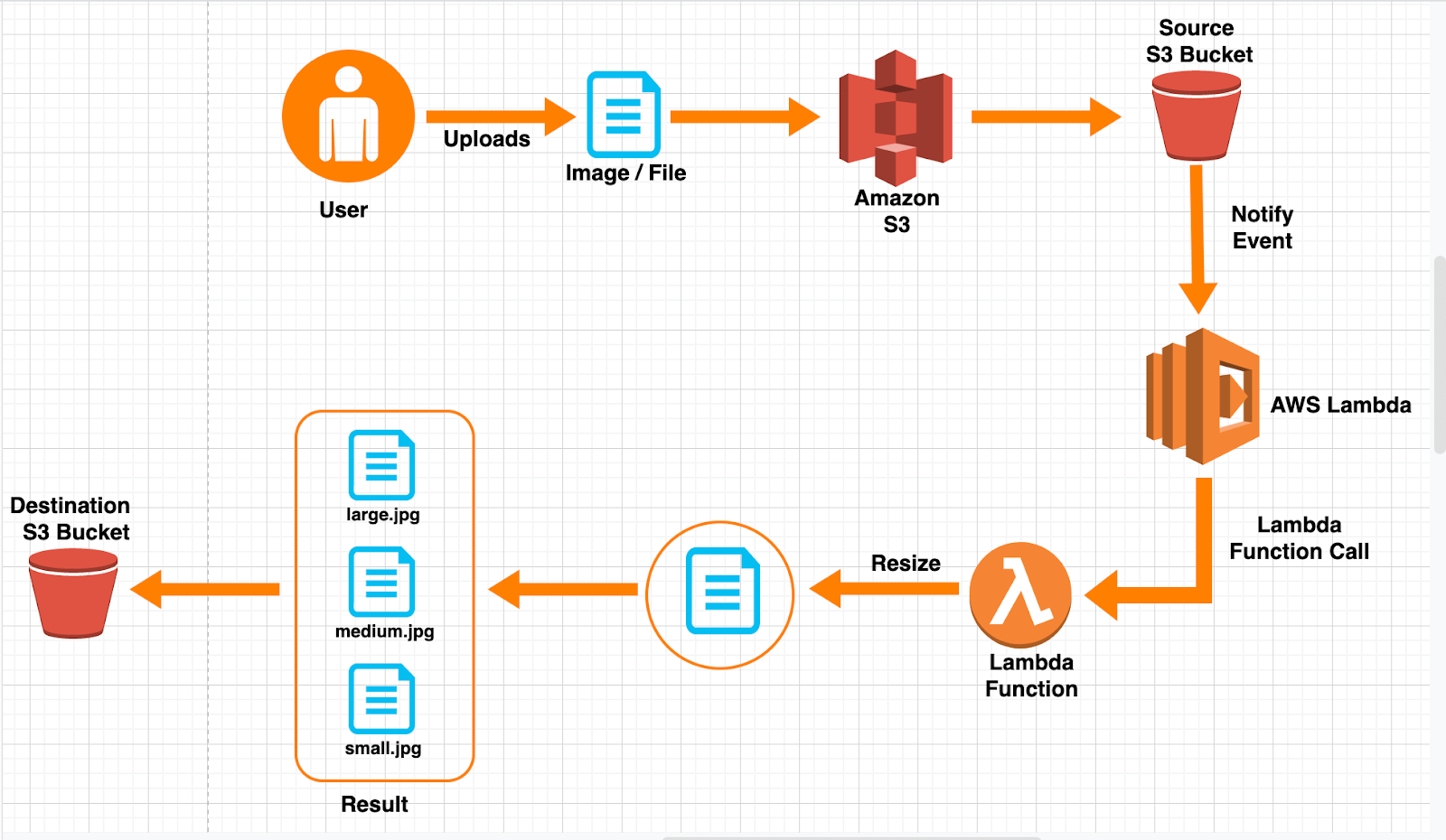

Serverless architectures are a game-changer when it comes to scaling. No servers to manage, and the promise of auto-scaling from zero to millions in no time. But — there’s a caveat. Serverless isn’t the magic bullet for everything.

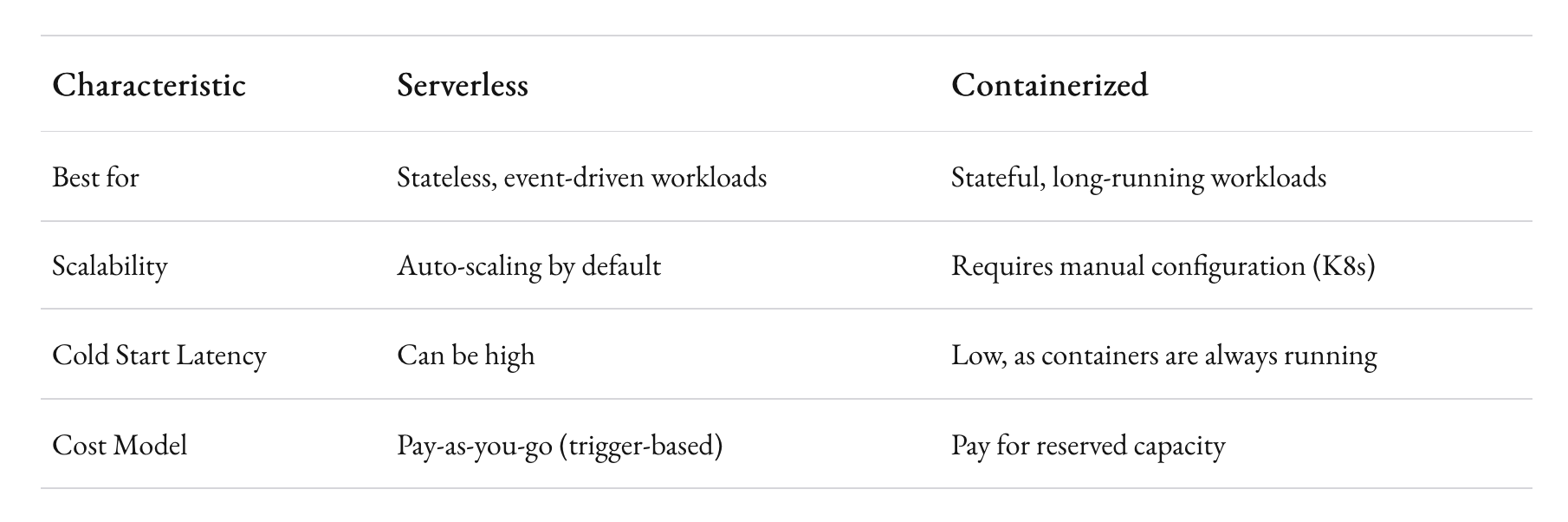

Lambda functions, or any equivalent FaaS (Function as a Service) technology, are great for stateless, event-driven, short-lived workloads. API backends, data transformations, image processing — these are perfect use cases. But if you have stateful, long-running processes, serverless might not be the best fit. Cold starts and limited runtime duration can kill performance if misapplied.

The trick is knowing when to use serverless and when to stick to more traditional containerized services. A hybrid architecture, where some parts are serverless and others are containerized, often works best. The key is aligning the architecture with workload patterns and using serverless where its strengths shine brightest.

Take iRobot’s cloud platform as an example. They employ serverless Lambda functions to collect data from millions of devices in real-time, while simultaneously using containers to handle complex processing that requires state. Mixing and matching to play to each component's strengths is the real art of cloud-native scalability.

Embrace Containers - Orchestration Is Your Secret Weapon

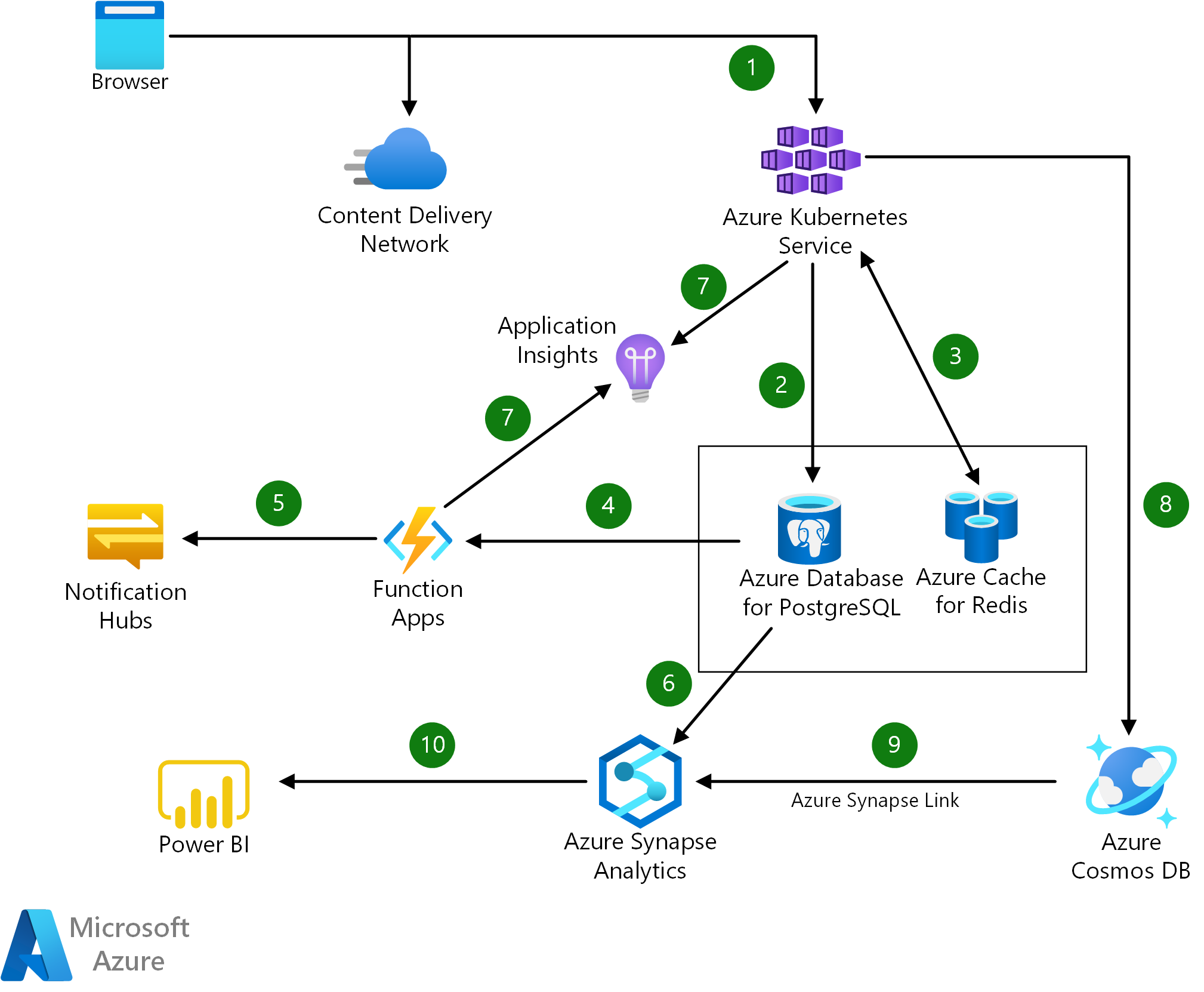

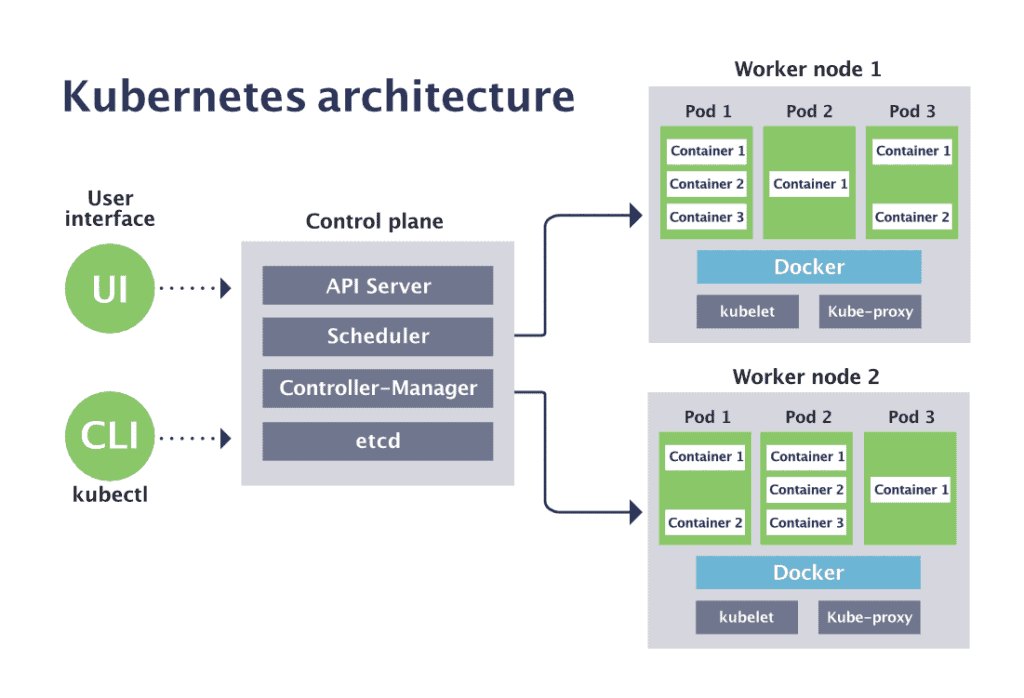

Containers are often at the heart of cloud-native architecture. Kubernetes, Docker Swarm, or even AWS ECS provide a powerful way to bundle applications and manage their deployment with consistency and isolation. But containers without orchestration can leave you lost at sea.

Kubernetes has become the de facto orchestration tool for a reason. It offers the ability to scale your containers automatically, roll out updates with zero downtime, and recover failed services instantly. However, Kubernetes isn’t something you adopt lightly — it comes with complexity, and managing a cluster demands careful consideration of networking, load balancing, and monitoring.

A key part of using Kubernetes effectively for scalability is defining the right resource limits. Not too generous — because that’s wasteful. Not too restrictive — because that stifles performance. Use Horizontal Pod Autoscaling (HPA) to automatically adjust the number of pods in response to demand. This ensures your architecture can dynamically handle fluctuations in load.

Airbnb moved to Kubernetes specifically to handle spikes in demand, such as during high-traffic booking events. By breaking their application into smaller containerized pieces, orchestrated by Kubernetes, they can scale parts of their architecture based on where the actual demand is, reducing costs and increasing resilience.

Resilience, Observability, and Scaling Beyond Failures

Designing for scalability isn’t just about handling increased load — it’s about doing so gracefully, even in the face of inevitable failures. This is where resilience comes into play.

Implement patterns like Circuit Breaker to protect your architecture from cascading failures. The idea is simple — when a service detects that a downstream dependency is failing, it automatically stops sending requests until it has recovered. This prevents the entire architecture from being dragged down by a single failure.

Netflix has again set the standard with their open-source tool, Hystrix, implementing the Circuit Breaker pattern. It's an example of proactive resilience in action — the sort of architecture that is ready for things to go wrong, because in cloud-native systems, they inevitably will.

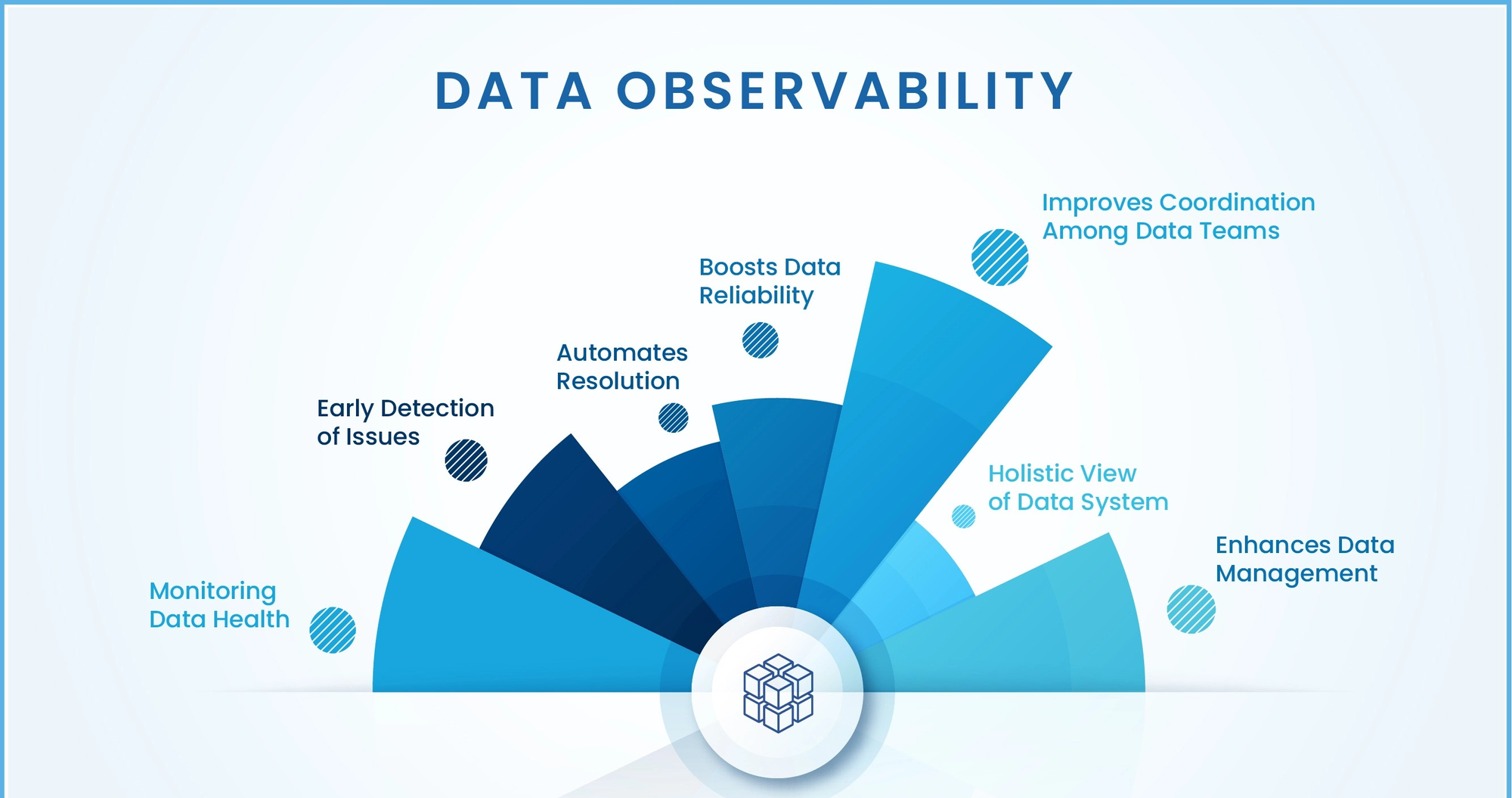

Observability is another cornerstone of a scalable architecture. You need insights into what’s happening across your services at any given moment. Logs, metrics, and traces should all work together to give you a 360-degree view of your system. Tools like Prometheus, Grafana, and Jaeger provide the observability stack you need. They’re essential not just for monitoring health but for identifying bottlenecks and understanding how your services behave under load.

Facebook’s deployment of Grafana and Prometheus across their systems allows for real-time monitoring, which is crucial in identifying scaling issues before they become problems. Scalability is not a one-time design decision — it’s an ongoing process of measuring, tuning, and adapting.

Automation Is Everything

If you want to scale, automation cannot be an afterthought. It needs to be embedded in every stage of your development and deployment pipeline. Infrastructure as Code (IaC) ensures that your environments are identical, version-controlled, and provisioned with a click. Terraform and AWS CloudFormation help you do this at scale.

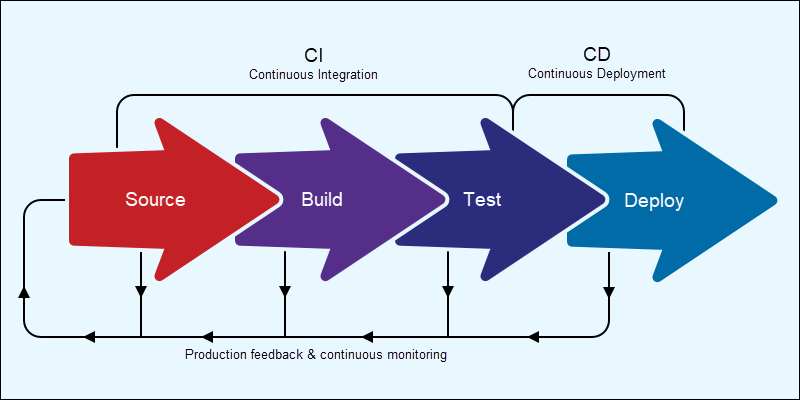

But automation doesn’t end with infrastructure. Continuous Integration/Continuous Deployment (CI/CD) pipelines let you push updates to production rapidly and safely. Automated testing ensures that your services are tested for scalability and resilience before they go live.

A strong CI/CD setup helps you deal with rolling updates, A/B testing, canary deployments, and more — all of which are critical in ensuring your architecture remains scalable even as you make changes. Spinnaker, the open-source multi-cloud continuous delivery tool developed by Netflix, has been instrumental in allowing teams to deploy code hundreds of times a day. With this level of automation, they can scale features in production without impacting the user experience.

Secure, Cost-Effective Scaling

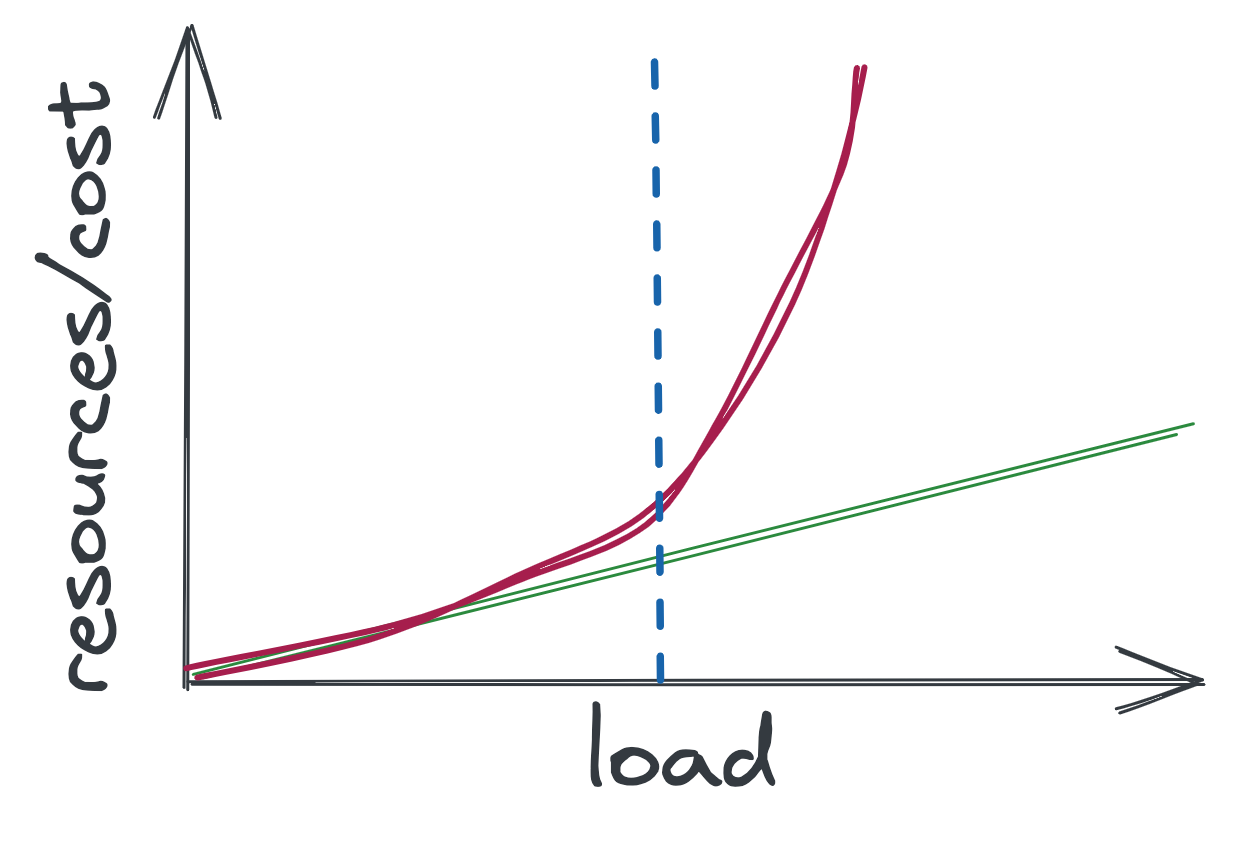

Scaling isn’t just about making things bigger. It’s about doing it cost-effectively and securely. One of the traps teams often fall into is equating scalability with spending more money. Sure, it’s easy to throw resources at the problem, but the real art lies in scaling while maintaining costs.

Use auto-scaling groups, right-size your instances, and keep a close eye on your billing metrics. AWS offers tools like Cost Explorer to help monitor expenses — use these to ensure that as your architecture scales, you aren’t accidentally burning through budgets.

Security must be a core tenet of your scalable architecture. Every microservice introduces more surface area for potential attacks. Secure communication between services with tools like AWS IAM or service mesh solutions like Istio. Ensure secrets are managed through services like AWS Secrets Manager or HashiCorp Vault.

Uber, which handles millions of ride requests per day, scaled their architecture cost-effectively by adopting a combination of on-premise and cloud-based infrastructure, allowing them to control costs while maintaining the flexibility to scale up when needed. They also introduced secure communication across their hundreds of microservices using service mesh to reduce vulnerability as they scaled.

Keep Evolving

Designing cloud-native, scalable architecture isn’t a destination — it’s a journey. It’s about laying the foundation with the right principles: embracing microservices, making data independent, using containers, and going serverless where appropriate. It’s about constantly measuring, automating, and iterating. But more importantly, it’s about a mindset shift — treating your architecture as something alive, growing, and capable of evolving with your needs.

Here at 1985, we’ve learned that the best architectures are those that grow organically, evolving step by step. We don’t try to get everything right the first time, but we do build systems with the flexibility to change. With cloud-native paradigms, we’re not just keeping the lights on — we’re ensuring that as the demands on our systems increase, we can confidently meet them. Every day brings a new lesson in scalability, and that’s what makes this journey so rewarding.

Are you building your architecture to truly scale? It might be time to think about what’s coming next, not just what's working now. Let’s keep the conversation going.