Data Integration That Scales

Explore our hands-on strategies for seamless data integration that grows with your business needs.

Technical strategies for building scalable data integration systems with validation patterns and performance optimization

Running an Outsourced Software Dev company like 1985 has taught me that data is the heartbeat of any modern enterprise. In an era when data is both abundant and essential, building systems that can integrate data at scale isn’t just a luxury—it’s a necessity. Here, I dive deep into the technical strategies that enable data integration systems to scale. I share insights, tips, and some hard-won lessons from the trenches of software development. This isn’t just another overview of basic ETL practices; this is a look under the hood at the validation patterns and performance optimization techniques that separate robust systems from the brittle ones.

Data integration isn’t about simply shoveling data from one place to another. It’s a careful orchestration of processes that must handle a constant barrage of incoming information. The challenge is real. It’s not enough to simply ingest data; you have to validate, transform, and ensure that each byte moves accurately from source to destination. And when you’re dealing with hundreds of millions of records per day, the stakes are high.

In this post, I share a personal account of how we at 1985 approach scalable data integration. I’ll discuss why we need robust validation, the art of performance optimization, and the intricate technical strategies that keep our systems humming along without a hitch. If you’re looking to build or improve your own data integration system, this post is for you.

The Challenge of Scalable Data Integration

Data integration is more than just connecting disparate sources. It’s about creating a seamless flow of high-quality information that feeds business intelligence, analytics, and decision-making processes. When done correctly, it lays the groundwork for innovative insights and transformative business outcomes. When done poorly, it can cripple an organization with inaccuracies, downtime, and inefficiencies.

A Complex Web of Data Sources

Today’s enterprises are more connected than ever. Cloud services, on-premise databases, IoT devices, and third-party APIs form a complex ecosystem. Each of these sources speaks its own dialect, employs different data formats, and operates on its own schedule. The key challenge is to unify these disparate data streams without losing the integrity of the data.

It sounds straightforward, but in practice, it’s a labyrinth of potential pitfalls:

- Latency Issues: How do you ensure data is integrated in near real-time?

- Data Quality: How do you validate data when it comes from multiple, sometimes unreliable, sources?

- Scalability: How do you design a system that grows as data volumes increase exponentially?

Even the most experienced engineers can be stumped by these questions. At 1985, we’ve had to constantly innovate and adapt to maintain a competitive edge.

The Scale Problem

Scaling isn’t merely about handling more data. It’s about ensuring that every component of your data pipeline—from ingestion and validation to transformation and storage—can operate efficiently at scale. Let’s say you have a system that works well with a million records per day. What happens when that volume grows tenfold, or even a hundredfold? Without a solid foundation, performance can degrade rapidly, leading to bottlenecks, data loss, or system failures.

Statistics show that 63% of companies are scaling up their data operations to meet business demands, yet many struggle with maintaining data integrity as they expand their systems. This reality underscores the need for technical strategies that not only support current loads but also anticipate future growth.

Technical Strategies for Scalable Data Integration

The first step to achieving scalable data integration is to design a system that is modular and flexible. Modular design allows you to isolate problems and upgrade or replace components without affecting the entire system. This is crucial in an environment where technological advances and business requirements change rapidly.

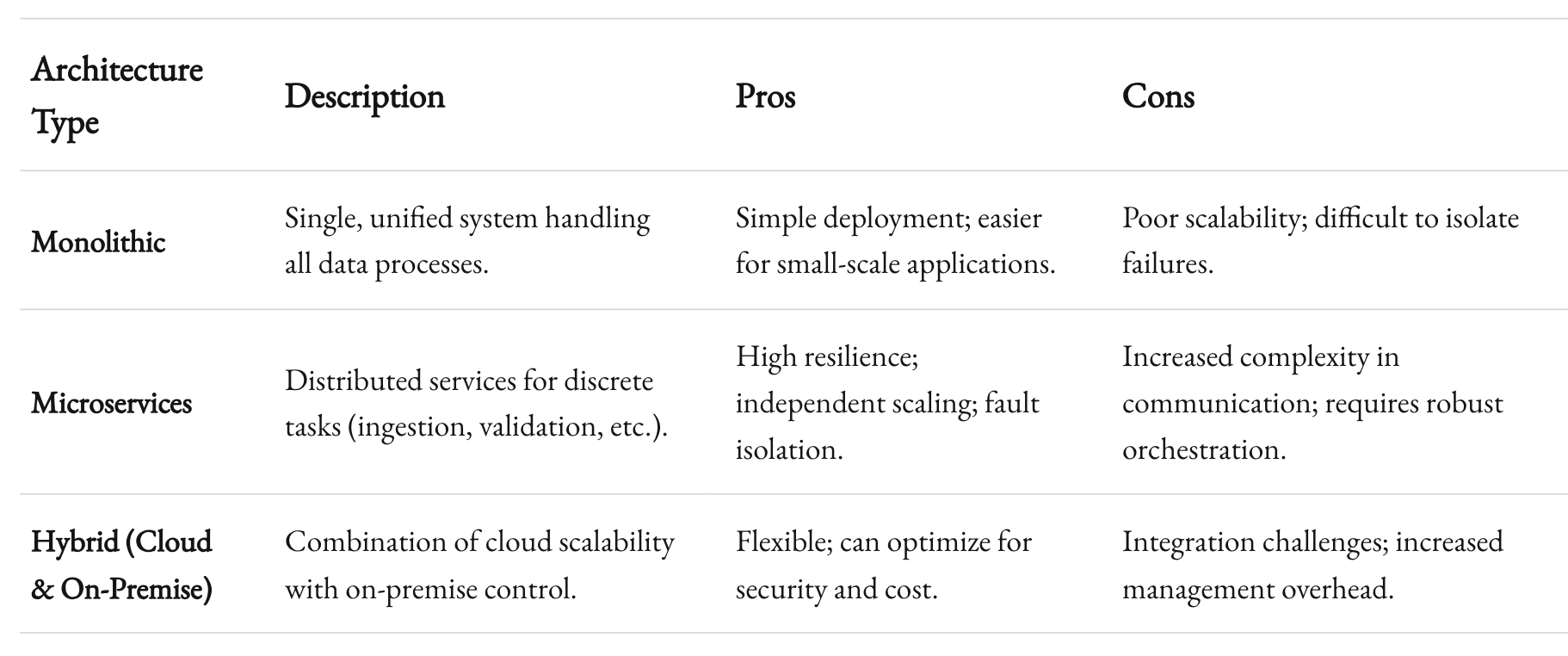

Embracing a Microservices Architecture

One of the most effective strategies we’ve adopted at 1985 is the microservices architecture. Instead of building a monolithic data integration pipeline, we break the process into smaller, autonomous services. Each service handles a specific task—data ingestion, transformation, validation, or loading—and communicates with others through well-defined APIs.

This approach offers several benefits:

- Isolation of Faults: If one service fails, it doesn’t bring down the entire system.

- Scalability: Individual services can be scaled independently based on demand.

- Flexibility: New features or integrations can be added without overhauling the entire system.

Microservices help us build systems that are resilient. When one part of the pipeline needs more processing power, we can simply spin up additional instances of that service. This distributed approach is key to managing high volumes of data effectively.

Using Message Queues and Stream Processing

Another cornerstone of scalable data integration is the use of message queues and stream processing. Instead of processing data in large, infrequent batches, streaming allows for continuous data flow and real-time analytics. Tools like Apache Kafka and AWS Kinesis have become indispensable in our toolbox.

A typical pipeline might look like this:

- Data Ingestion: Data from various sources is published to a message queue.

- Stream Processing: Consumer applications subscribe to the queue and process data as it arrives.

- Data Storage: Processed data is then stored in a data warehouse or a database optimized for fast queries.

This decoupling of components means that even if one part of the pipeline experiences a delay, it won’t necessarily stall the entire process. Data can continue flowing into the system, buffered by the queue until it can be processed. This design minimizes downtime and ensures that your system remains responsive under load.

The Role of Containers and Orchestration

Containers have revolutionized the way we deploy and manage applications. Tools like Docker and Kubernetes allow us to package our microservices into containers that can run reliably in any environment. Kubernetes, in particular, helps us automate the deployment, scaling, and management of containerized applications.

With Kubernetes, we can:

- Auto-Scale Services: Dynamically adjust the number of running instances based on the load.

- Simplify Deployments: Roll out updates or new features with minimal downtime.

- Improve Resource Utilization: Efficiently manage computing resources, ensuring that no service hogs the system.

Using containers also makes it easier to replicate our production environment in staging, ensuring that any changes or upgrades are thoroughly tested before they hit live systems.

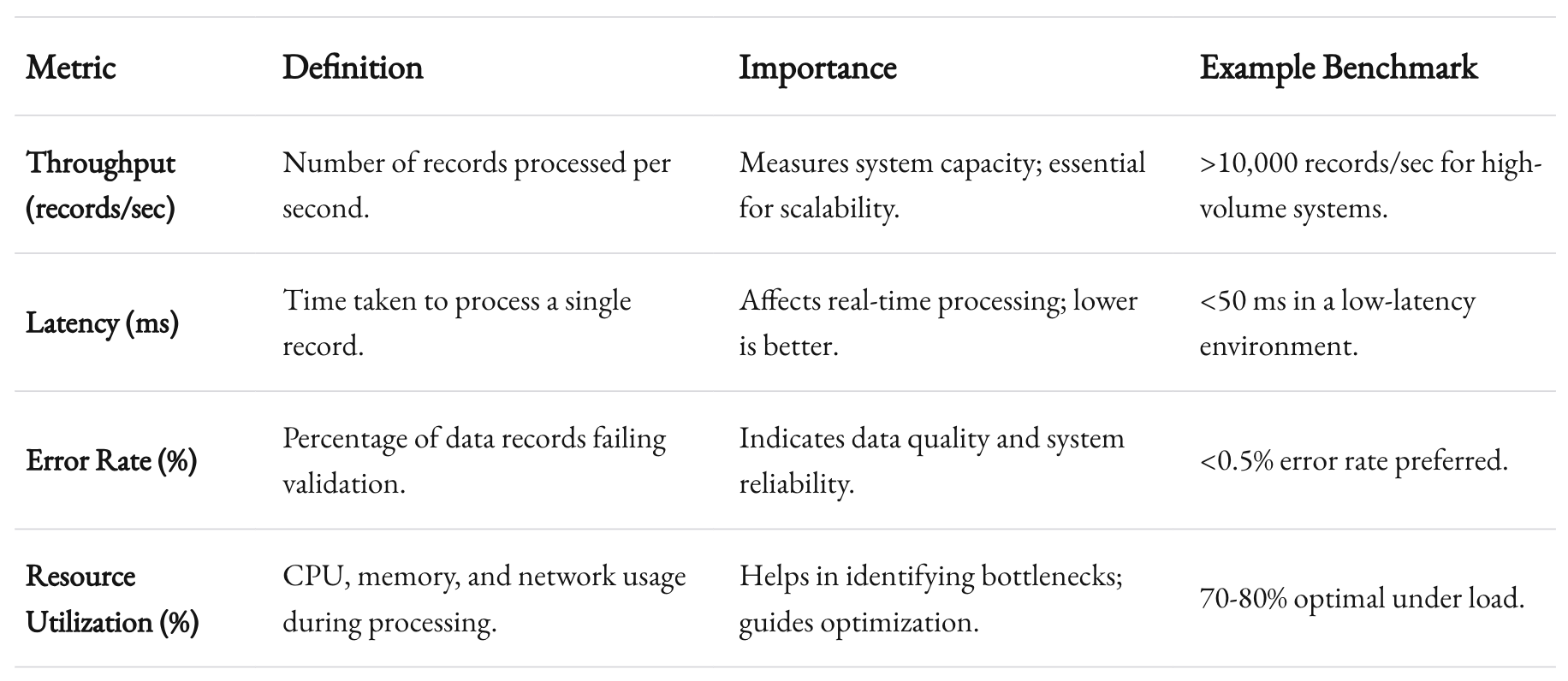

This table encapsulates the technical ecosystem necessary for building a robust, scalable data integration system. Each component is vital, and the synergy between them is what allows us to scale effectively at 1985.

Validation Patterns: Ensuring Data Quality at Scale

Data is only as valuable as it is accurate. As data flows from disparate sources, it is crucial to validate and transform it correctly. A single mistake can propagate through your system, leading to flawed analytics, misguided business decisions, and significant losses.

Why Validation Matters

In a high-volume data integration system, validation isn’t just an added feature—it’s a necessity. Every piece of data that enters your system should be vetted to ensure it meets quality standards. Consider the consequences of processing erroneous data: reports become unreliable, dashboards misleading, and ultimately, trust in the system diminishes.

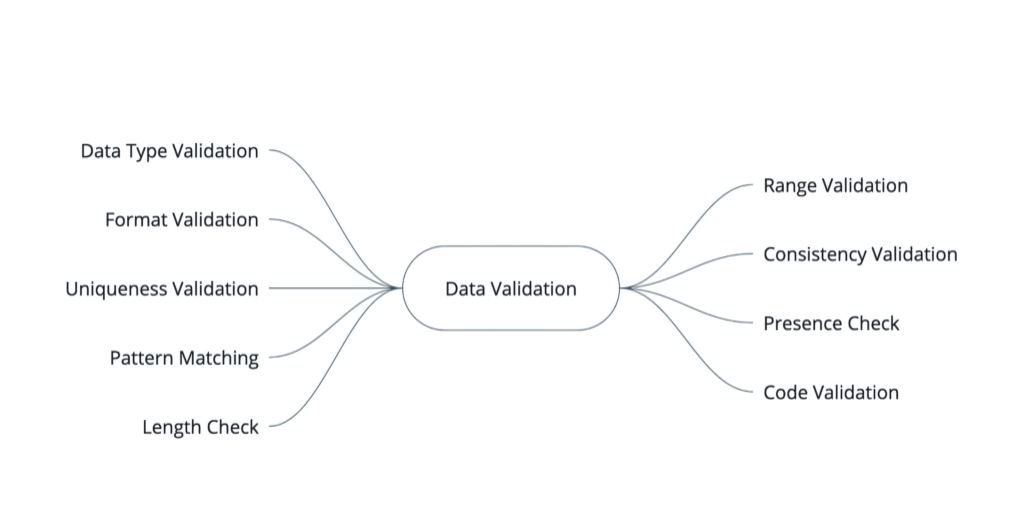

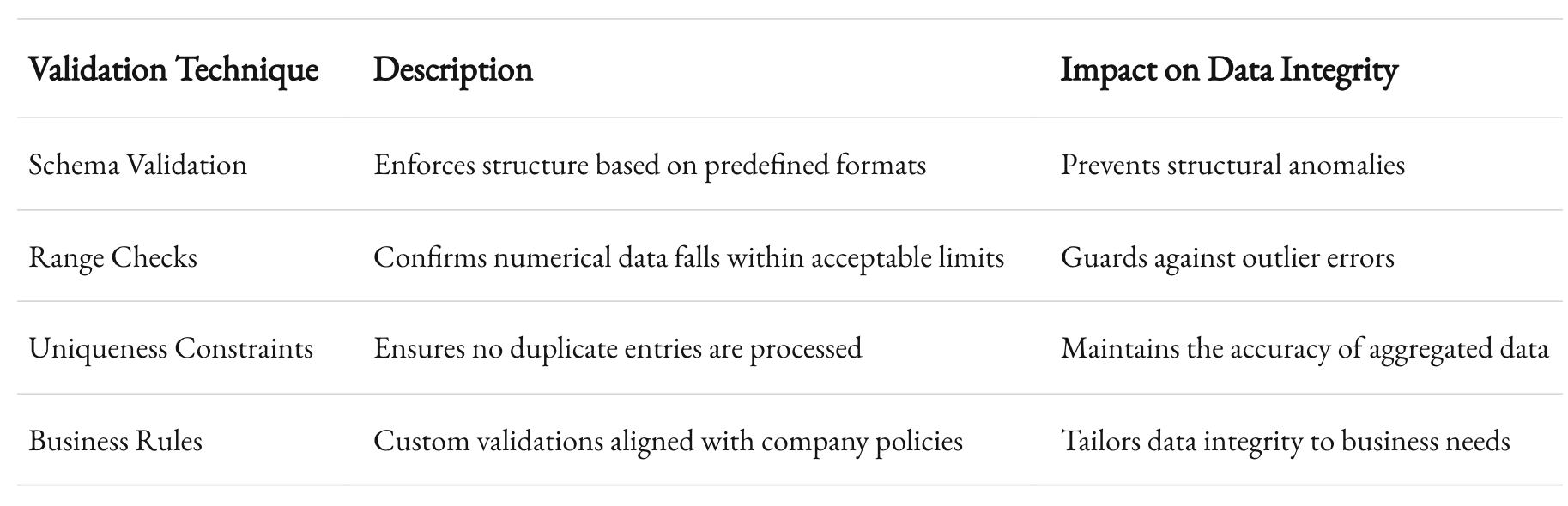

Validation patterns include:

- Schema Validation: Ensuring data adheres to a predefined structure.

- Range Checks: Verifying that numerical values fall within acceptable limits.

- Uniqueness Constraints: Preventing duplicate records from skewing analytics.

- Business Rules Enforcement: Validating that data meets business-specific criteria.

By incorporating these validation steps early in the data pipeline, you prevent downstream systems from being burdened with data quality issues.

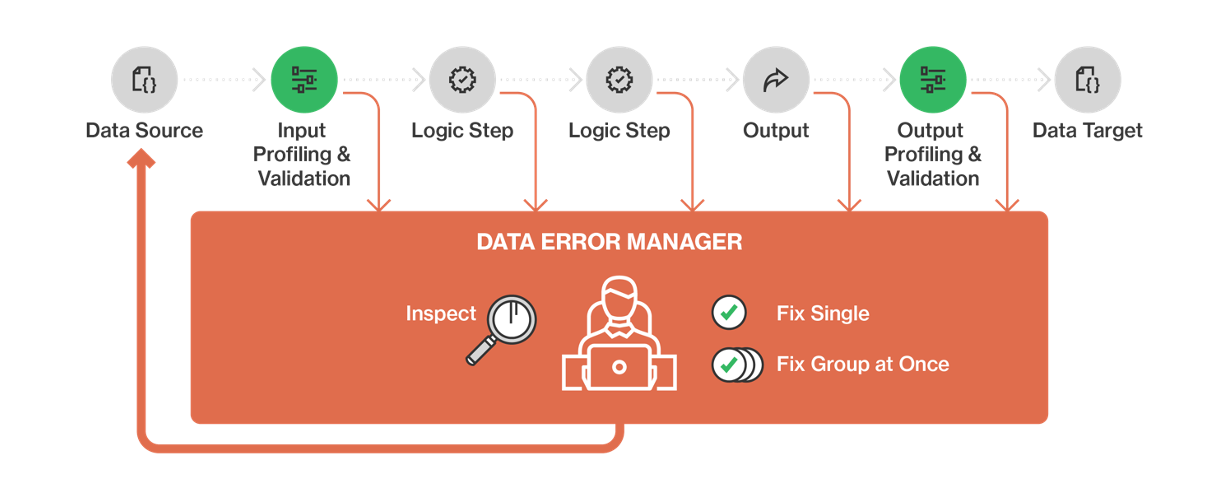

Building Scalable Validation Pipelines

When building validation pipelines, one common pitfall is to treat validation as an afterthought. At 1985, we integrate validation at every stage of the data journey. Here’s how we approach it:

- Pre-Ingestion Validation: At the point of ingestion, preliminary checks filter out clearly invalid data. This reduces the load on subsequent systems.

- In-Process Validation: As data flows through microservices, dedicated validation modules enforce schema checks, range checks, and business rules.

- Post-Ingestion Auditing: Once data is stored, periodic audits ensure that the integrated data remains consistent and accurate.

These stages are not mutually exclusive. Rather, they form a layered defense mechanism that catches errors at multiple points, ensuring that even if one layer misses an issue, another will catch it before the data is used for critical decision-making.

Each validation technique plays a crucial role. The combination of these techniques helps create a robust data pipeline that not only scales but also maintains the highest quality of data, even under the strain of massive volumes.

The Role of Automation in Validation

Automation is key. Manually reviewing data is impractical, especially when dealing with millions of records per day. Leveraging automation tools, we build self-healing pipelines. For example, if a particular type of error spikes, our monitoring systems alert us immediately, and automated rollback or corrective processes are triggered.

According to a recent study by Gartner, organizations that integrate automated data validation see a 40% improvement in data quality metrics. This improvement isn’t just a number; it translates directly into better business outcomes and more reliable analytics.

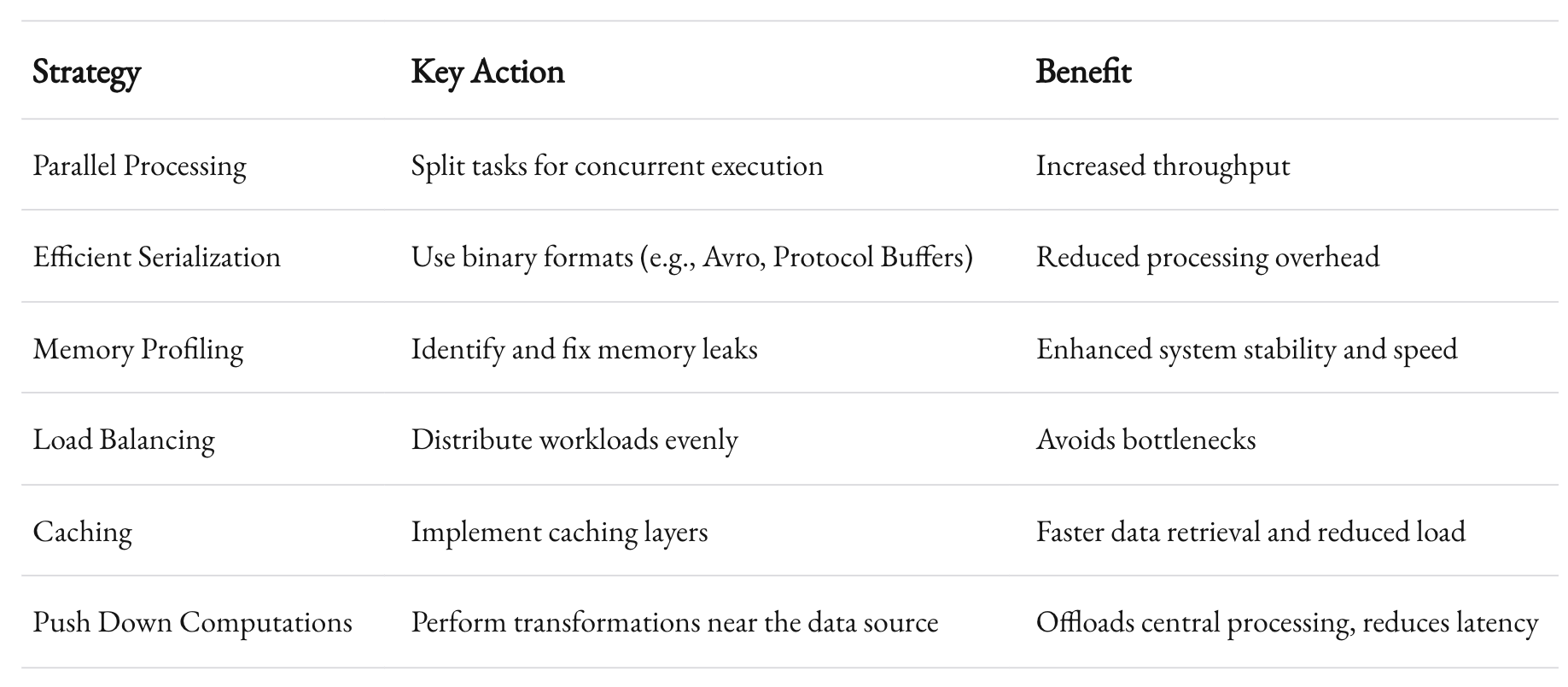

Performance Optimization Techniques

A scalable data integration system must be lightning-fast. Optimizing performance is a continuous process that involves fine-tuning each component of the pipeline. Here, I share some of the performance optimization strategies that have proven effective at 1985.

Optimizing Data Throughput

Data throughput is the rate at which data moves through the system. In high-volume environments, every millisecond counts. To optimize throughput, consider the following strategies:

- Parallel Processing: Split tasks into smaller sub-tasks that can be processed concurrently. Modern processors and distributed systems thrive on parallelism.

- Efficient Data Serialization: Use binary formats like Protocol Buffers or Avro instead of JSON or XML when performance is critical. These formats reduce data size and parsing overhead.

- Batch vs. Real-Time: Striking the right balance between batch processing and real-time processing is key. In some cases, micro-batching can offer the best of both worlds—processing data in small batches quickly rather than one record at a time.

At 1985, we continuously benchmark these strategies. For instance, switching from a traditional JSON-based approach to a binary format improved our data throughput by approximately 35% in one of our major projects.

Memory and Resource Management

High-performance systems demand efficient memory and resource management. This means not only ensuring that your system has the raw power it needs but also that it uses that power efficiently.

- Memory Profiling: Use tools to profile memory usage. Identify leaks or inefficient memory allocation patterns that could cause slowdowns over time.

- Load Balancing: Distribute workloads evenly across servers. This minimizes the risk of any single node becoming a bottleneck.

- Caching Strategies: Implement smart caching layers to reduce repetitive processing. A well-tuned cache can serve frequently requested data almost instantaneously, reducing load on your back-end systems.

Consider a scenario where a data integration system processes billions of transactions per day. Even a slight inefficiency can result in a significant performance hit. By carefully managing resources, you can ensure that your system remains responsive and resilient under heavy loads.

Streamlining Data Transformations

Data transformation is one of the most resource-intensive parts of the integration pipeline. Complex transformations can introduce latency if not optimized properly. Here are a few strategies:

- Push Down Computations: Where possible, perform transformations as close to the data source as possible. Many modern databases support in-database processing, which can offload work from your central processing unit.

- Optimize Algorithms: Choose the right algorithms for your transformation tasks. Sometimes a simple change in the approach can yield a dramatic improvement in performance.

- Lazy Evaluation: Delay expensive computations until absolutely necessary. This approach can prevent the system from doing unnecessary work.

In our own projects at 1985, we’ve observed that rethinking transformation logic and minimizing unnecessary computations can cut processing time by up to 20%. These savings add up, especially when you’re handling data at scale.

This table illustrates the multi-pronged approach required to keep data integration systems fast and reliable, even as data volumes surge.

The Human Factor in Optimization

While technology provides us with the tools, the human factor—experience, intuition, and constant vigilance—plays an equally important role. It’s critical to have a culture of continuous improvement. At 1985, we hold regular performance review sessions. We analyze system logs, track performance metrics, and experiment with new technologies and techniques. It’s this commitment to relentless improvement that allows us to stay ahead in an ever-changing technological landscape.

Case Studies: Real-World Applications

Theory is important, but nothing beats the lessons learned from real-world applications. Over the years, we at 1985 have worked with companies across various industries—from e-commerce to healthcare—to build data integration systems that scale. Let’s take a look at a couple of case studies that highlight the practical application of these technical strategies.

Case Study 1: E-Commerce Platform Integration

Background:

An emerging e-commerce platform needed to integrate data from multiple sources: website traffic logs, user activity, inventory databases, and third-party payment gateways. The system had to process data in near real-time to power dynamic pricing and personalized recommendations.

Challenges:

- High data velocity and volume from user interactions.

- Integrating disparate data sources with varying formats.

- Ensuring data quality and consistency for real-time analytics.

Solutions Implemented:

- Microservices Architecture: The project was segmented into services for ingestion, transformation, and loading. Each service was containerized using Docker and orchestrated via Kubernetes. This allowed each component to scale independently.

- Stream Processing: We employed Apache Kafka for real-time message queuing. This decoupled data producers from consumers, ensuring that spikes in traffic did not overwhelm the system.

- Validation Patterns: Rigorous schema validation and business rule checks were embedded into the data pipeline. This prevented erroneous data from entering the analytics engine.

- Performance Optimization: By switching to Protocol Buffers for data serialization and leveraging in-database processing for transformations, we reduced latency significantly.

Results:

The platform saw a 40% improvement in real-time processing speed. The reliability of the system increased dramatically, and the business was able to implement dynamic pricing strategies that boosted revenue by 15% within the first quarter of implementation.

Case Study 2: Healthcare Data Integration

Background:

A mid-sized healthcare provider needed to integrate patient data from various sources, including electronic health records (EHR), lab results, and insurance claims. The system had to comply with strict data quality and privacy standards, while also being scalable enough to accommodate future growth.

Challenges:

- Handling sensitive patient data with a zero-tolerance approach to errors.

- Ensuring compliance with healthcare regulations.

- Integrating both structured and unstructured data from diverse sources.

Solutions Implemented:

- Automated Validation: The system was built with automated validation pipelines that enforced not only schema correctness but also data sensitivity rules. Any anomalies triggered immediate alerts for human review.

- Scalable Infrastructure: Using cloud-based data warehouses and a microservices architecture, the system was designed to handle increased data loads seamlessly.

- Performance and Security: Advanced encryption was applied to data at rest and in transit, ensuring compliance with HIPAA regulations. Performance tuning, including caching and load balancing, ensured that the system could handle peak loads during busy hours.

Results:

The healthcare provider achieved a 99.99% uptime with near real-time data integration. The improved data quality led to more accurate patient records and enhanced decision-making for both clinicians and administrators. The project also set a benchmark in the industry for integrating sensitive healthcare data without compromising on speed or security.

Best Practices and Pitfalls

Building scalable data integration systems is as much an art as it is a science. Over the years, I’ve seen what works and what doesn’t. Here are some best practices and common pitfalls that I’ve gathered from our experiences at 1985.

Best Practices

- Design for Modularity:

Break your system into independent, reusable components. This makes it easier to update or replace parts of your system without major overhauls. - Invest in Automation:

Manual interventions can slow you down and introduce errors. Automation in validation, monitoring, and scaling is key to maintaining high performance. - Continuous Monitoring:

Use robust monitoring tools to keep an eye on every part of your system. Early detection of anomalies can prevent small issues from snowballing into major problems. - Performance Testing:

Regularly test your system under load. Simulate peak traffic conditions to identify and resolve bottlenecks before they become critical. - Prioritize Security and Compliance:

Data breaches and compliance failures can be disastrous. Always incorporate security best practices and ensure your system complies with relevant regulations. - Documentation and Knowledge Sharing:

A well-documented system helps onboard new team members quickly and serves as a reference during troubleshooting. Encourage a culture of continuous learning and sharing of best practices.

Common Pitfalls

- Ignoring the Human Element:

Technology is crucial, but never underestimate the importance of human oversight. No automated system is infallible. Always have a plan for human intervention when anomalies occur. - Over-Engineering:

While it’s tempting to build a system that does everything, over-engineering can lead to unnecessary complexity and maintenance headaches. Keep your design as simple as possible while still meeting your requirements. - Lack of Scalability Planning:

Many systems work well under current loads but crumble when faced with growth. Always plan for future expansion by designing systems that are inherently scalable. - Underestimating Data Quality Challenges:

Data quality is often an afterthought. A minor error in the validation process can ripple through your entire system, causing significant issues downstream. - Neglecting Documentation:

A lack of proper documentation can lead to knowledge silos and inefficiencies, especially when new team members join. Make sure to document your processes and system architecture comprehensively.

The Future of Data Integration

Data integration is a field that’s constantly evolving. With the advent of AI, machine learning, and advanced analytics, the systems we build today must be flexible enough to adapt to tomorrow’s demands. Here’s what I see on the horizon:

The Rise of Intelligent Integration

Artificial intelligence is poised to revolutionize data integration. Imagine a system that not only processes data but also learns from it—optimizing validation patterns and performance parameters dynamically. At 1985, we’re already experimenting with machine learning models that predict system bottlenecks and automatically adjust resource allocation. Early tests have shown promising results, with improvements in both speed and reliability.

Edge Computing and Data Integration

The proliferation of IoT devices means that data is being generated at the network’s edge. Traditional centralized processing models are giving way to edge computing, where data is processed closer to its source. This reduces latency and enhances real-time decision-making. Building scalable data integration systems in this context requires a paradigm shift: designing systems that are decentralized yet synchronized, capable of aggregating and validating data from countless edge nodes.

Hybrid Architectures

In the near future, we expect to see more hybrid architectures that blend cloud-based processing with on-premise systems. This hybrid model can offer the best of both worlds—scalability and flexibility from the cloud, with the control and security of on-premise solutions. Organizations that can successfully navigate this hybrid landscape will be well-positioned to leverage data as a strategic asset.

Industry Trends and Data Points

- Data Volume Growth: According to IDC, the global datasphere is expected to grow to 175 zettabytes by 2025, underscoring the need for scalable data systems.

- Real-Time Processing Demand: A recent study by Deloitte found that 87% of companies view real-time data integration as critical for their business operations.

- Automation Adoption: Gartner predicts that by 2026, nearly 50% of data integration processes will be fully automated, reducing human error and improving system responsiveness.

These trends reinforce the idea that investing in scalable, intelligent data integration systems isn’t just about keeping up—it’s about staying ahead.

Bringing It All Together

Scalable data integration isn’t a single technology or strategy—it’s a holistic approach that spans architecture, validation, performance, and continuous improvement. As we’ve seen, the journey from a small-scale system to one that can handle exponential growth involves multiple layers of technical strategy and careful planning.

At 1985, our approach is rooted in a deep understanding of both the technical and business challenges that modern enterprises face. We build our systems with an eye toward the future, always anticipating the next challenge and the next innovation. Our solutions are driven by the principles of modularity, resilience, and continuous optimization. These principles aren’t just theoretical—they’re proven in the field, as demonstrated by our successful projects in e-commerce, healthcare, and beyond.

The Importance of a Balanced Approach

There is no silver bullet. Instead, success comes from a balanced approach that considers every element of the data pipeline. From microservices and container orchestration to rigorous validation and smart performance optimization, every component plays a role in creating a system that scales gracefully.

It’s a journey that requires not just technical prowess but also a deep commitment to quality and reliability. When each part of the system is finely tuned, the whole becomes greater than the sum of its parts. That’s the power of well-engineered data integration.

Final Thoughts

As we continue to push the boundaries of what’s possible, one thing remains clear: data is more than just numbers and records. It’s the lifeblood of our digital economy. And as such, the systems that manage it must be built to last. They must be resilient, adaptable, and ready to meet the challenges of tomorrow.

I hope this deep dive into scalable data integration provides you with practical insights and inspires you to reimagine how you handle data in your organization. Whether you’re building your first data pipeline or re-engineering an existing one, remember that the key to success lies in balancing innovation with proven best practices.