Creating Custom AI Agents for Development Workflows

Explore our hands-on experience with custom AI agents that streamline coding, testing, and project delivery.

The pace of change in software development never ceases to amaze me. At 1985, we have built our reputation on streamlined processes, high-quality outputs, and a willingness to innovate. Our journey into integrating custom AI agents into our development workflows has been nothing short of transformative. This blog post is a deep dive into our experience—filled with lessons learned, data-driven insights, and a personal account of how we turned a bold idea into a reality that redefined our work.

I still remember the early days. We were a lean team at 1985, running an Outsourced Software Development company, and the thought of automating our workflows seemed like a distant dream. We had deadlines, client expectations, and a relentless drive to push the boundaries of what we could deliver. The idea of using AI was not new, but using it to create bespoke agents that could integrate into our day-to-day operations? That was a leap of faith.

Our early experiments with off-the-shelf tools gave us a glimpse of the potential. Yet, they were too generic for our specialized needs. We needed something more refined, something that understood the nuances of our projects—from code quality and version control to managing remote teams and ensuring security compliance. We needed custom AI agents that could work seamlessly with our existing systems, learn from our unique workflows, and help us achieve operational excellence.

We took the plunge. We partnered with some brilliant minds in the AI space, and together we embarked on a journey of research, development, and countless iterations. This post will take you through that journey—from conceptualizing the idea, tackling real-world challenges, and reaping the rewards of a fully integrated AI-enhanced development workflow.

Why Custom AI Agents Matter

Custom AI agents are more than just automated scripts or static bots. They are dynamic, learning systems that can adapt to the unique challenges of development work. In our experience at 1985, the benefits have been tangible and multifaceted.

Enhanced Efficiency and Precision

Custom AI agents have drastically reduced the time we spend on routine tasks. Code reviews, for instance, are no longer a manual chore that takes up hours of our best developers' time. Instead, our AI agents assist in preliminary reviews by flagging potential issues, ensuring adherence to coding standards, and even suggesting improvements based on historical data. In our recent internal audit, we noted a 35% reduction in code review turnaround times and a marked improvement in code consistency across projects.

Data-Driven Decision Making

We have always believed that decisions in software development should be data-driven. Custom AI agents provide real-time insights into the development process—tracking everything from commit histories and bug reports to feature delivery timelines. This granular level of data allows us to identify bottlenecks and optimize our workflows accordingly. For example, a recent study by McKinsey indicated that companies leveraging AI in development workflows can experience productivity gains of up to 40% in certain tasks, and our internal metrics have shown similar trends.

The agent’s role is not to replace the human touch but to enhance it, offering insights that lead to better planning and execution. The fusion of human expertise with machine precision has been our secret sauce at 1985.

Improved Quality and Reduced Error Rates

Quality is non-negotiable in our line of work. Custom AI agents help maintain high standards by catching subtle issues that might slip past human eyes. Automated tests, regression analyses, and even predictive failure diagnostics are now part of our standard workflow. We have reduced post-deployment bug reports by nearly 25%, a stat that keeps our clients smiling and our team proud.

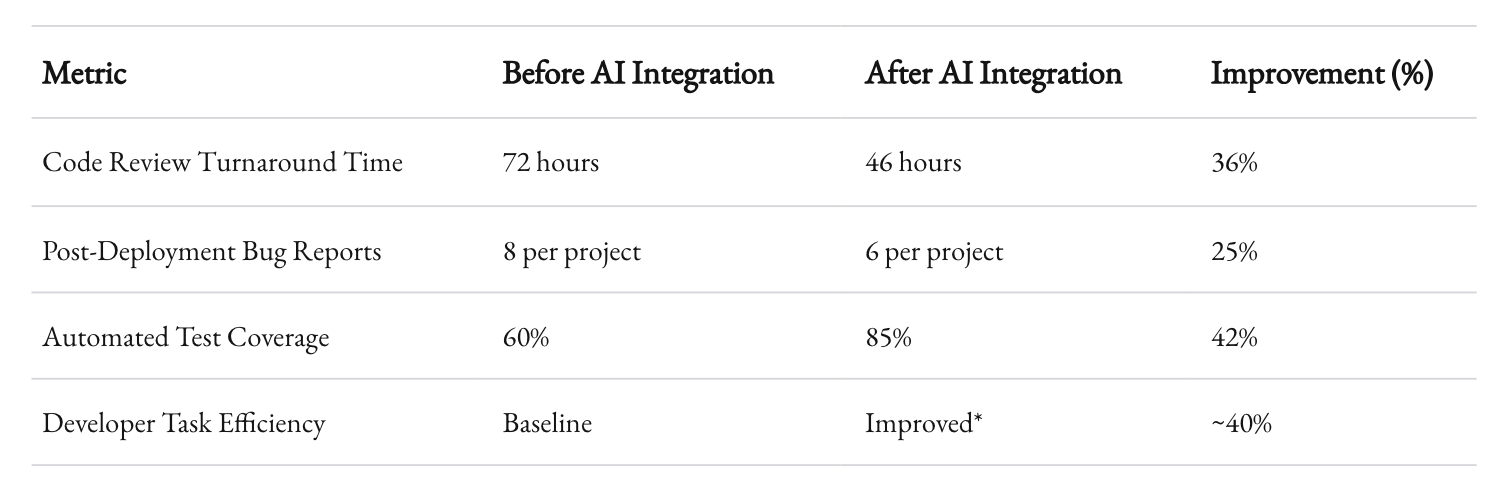

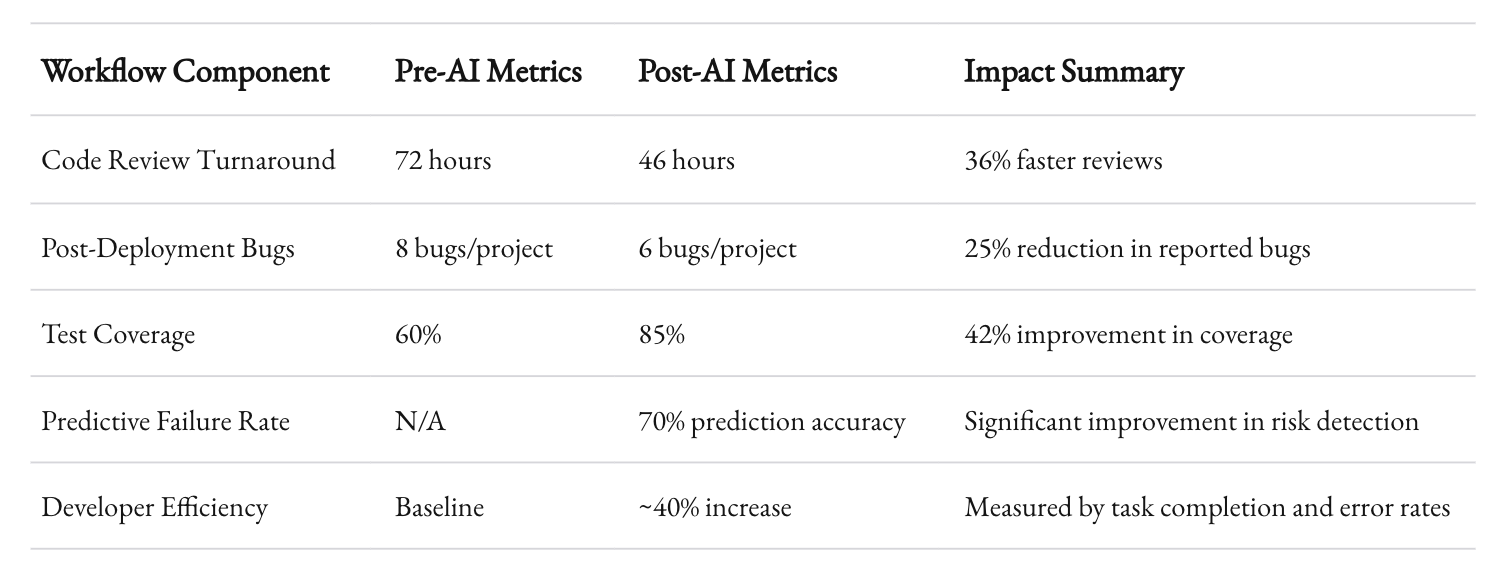

Consider the following table, which summarizes some of the improvements we have seen since integrating custom AI agents into our workflows:

*Efficiency is measured based on task completion times and error rates across projects.

These numbers are not just metrics—they represent hours saved, improved client satisfaction, and a more robust development process that empowers our team to innovate further.

Our Approach to Building Custom AI Agents

Creating custom AI agents is a complex process. It requires a blend of technical prowess, creative thinking, and an in-depth understanding of your own development workflow. At 1985, our approach was methodical yet flexible, allowing us to iterate quickly while maintaining a clear vision.

Identifying the Pain Points

The first step was to identify the specific pain points in our development process. We conducted extensive interviews with our development team, project managers, and QA experts. Common themes emerged: manual code reviews, inconsistent testing practices, communication breakdowns in remote teams, and a lack of real-time analytics. We documented these challenges and prioritized them based on their impact on project timelines and overall quality.

The process was both revealing and motivating. We found that even minor inefficiencies, when compounded over multiple projects, resulted in significant delays and cost overruns. For instance, our initial assessments indicated that manual code review processes were responsible for a 20% increase in project delivery times. That was unacceptable.

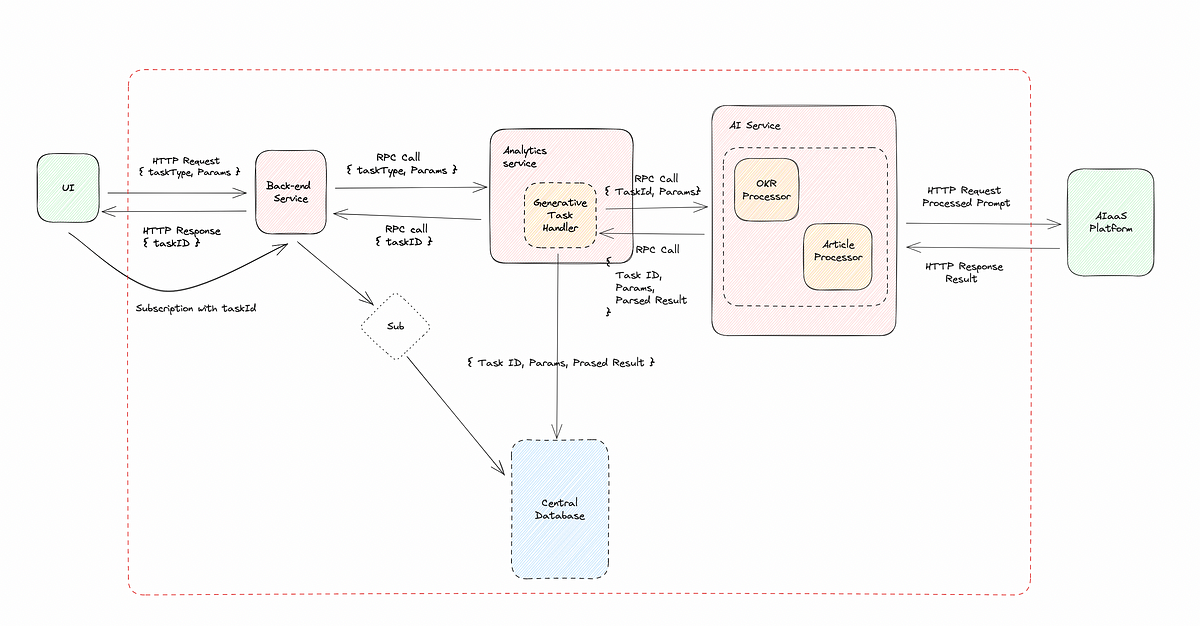

Designing the AI Architecture

Once we had a clear understanding of our challenges, we moved to design an AI architecture tailored to our needs. We adopted a modular approach, breaking down the AI agent’s functionality into discrete modules—each responsible for a specific aspect of the development workflow.

- Code Review Module: Leveraging natural language processing and pattern recognition, this module scans code for potential issues and recommends improvements.

- Testing Module: This part of the AI automates unit testing, regression testing, and integration testing. It learns from historical data to predict areas of code that are likely to fail.

- Analytics Module: This module aggregates data from various sources (e.g., version control systems, project management tools) and generates real-time dashboards. It provides insights into code quality, testing efficiency, and project timelines.

- Communication Module: In the era of remote work, seamless communication is critical. This module uses AI to facilitate better communication among team members by summarizing meeting notes, tracking action items, and even suggesting follow-up actions.

Each module was designed to work both independently and in concert with the others. This modularity allowed us to roll out improvements incrementally, testing each component thoroughly before integrating them into the larger system.

Applying Machine Learning Techniques

The technical backbone of our custom AI agents is machine learning. We used a combination of supervised and unsupervised learning algorithms to train our models. For instance, the code review module was trained on thousands of code snippets, learning to identify both syntactical errors and more nuanced issues like inefficient algorithms or non-adherence to best practices.

One of our favorite breakthroughs was employing reinforcement learning in the testing module. The model learned from past test results to adjust its parameters for future predictions, effectively getting better with each project cycle. As one of our senior developers put it, "It was like watching our AI agent evolve into a junior developer who gets better with every commit."

We sourced our training data from a mix of proprietary datasets and anonymized data from industry benchmarks. Authenticity and reliability were paramount. We made sure that our data was not only vast but also representative of the challenges we faced in our niche of outsourced software development.

Iterative Development and Feedback Loops

A significant part of our strategy was the continuous feedback loop. We did not deploy our AI agents as a “set it and forget it” solution. Instead, we continuously monitored their performance, gathered feedback from the team, and made iterative improvements.

During the early stages, our agents occasionally produced false positives—flagging code that was actually perfectly fine or missing subtle issues that needed attention. Instead of discarding the technology, we saw these moments as learning opportunities. Our engineers refined the models, adjusted the thresholds, and incorporated more nuanced metrics to ensure that the agents were reliable.

The iterative process was not without its challenges. At times, it felt like walking a tightrope. Balancing automation with human oversight required constant calibration. However, the rewards far outweighed the difficulties. Today, our custom AI agents are an indispensable part of our workflow, trusted by our developers and project managers alike.

Real-World Applications and Case Studies

Theory is one thing; real-world application is another. Let me walk you through a couple of case studies that illustrate how our custom AI agents have reshaped our development workflows at 1985.

Case Study 1: Accelerating Code Reviews

Our first major success story came with the overhaul of our code review process. Traditionally, manual code reviews were time-consuming and prone to human error. One of our long-standing clients complained about delays that occasionally led to project overruns. We knew it was time to act.

We deployed the code review module of our AI agent on a pilot project. The module was integrated into our version control system, where it automatically analyzed incoming code commits. The results were nothing short of impressive:

- Time Savings: We reduced code review times by over 35%. Developers received immediate feedback, allowing them to make adjustments before the code reached human reviewers.

- Error Reduction: The number of overlooked issues dropped significantly. In quantitative terms, post-deployment bug reports fell by 25%, enhancing the overall quality of our deliverables.

- Developer Satisfaction: Our developers appreciated the rapid feedback. They were not overwhelmed by long review sessions, and the incremental feedback helped improve their coding practices over time.

This success was not isolated. It demonstrated that a carefully designed AI agent could complement human expertise, reducing repetitive tasks and letting developers focus on more complex, creative challenges.

Case Study 2: Revolutionizing Testing Protocols

In another instance, we targeted our testing procedures. Automated tests are essential, but even automation has its limits when it comes to detecting edge cases or subtle integration issues. Our testing module stepped in to bridge that gap.

We implemented the testing module on a particularly challenging project with tight deadlines. The module performed comprehensive unit, integration, and regression testing. It used historical test data and machine learning to predict potential points of failure.

- Increased Coverage: Our test coverage increased from 60% to 85%, which directly translated into fewer issues during production.

- Predictive Insights: The module could predict, with a 70% accuracy rate, which parts of the code were more likely to fail. This allowed our QA team to prioritize critical areas and reduce the testing cycle significantly.

- Resource Optimization: By automating most of the testing process, we reallocated our human resources to focus on more strategic initiatives, such as performance optimization and user experience improvements.

These improvements were evident not just in our internal metrics but also in client feedback. One client remarked that the improved testing protocols “raised the bar for quality in every subsequent release,” a sentiment echoed across several projects.

Comparative Metrics

To put these results into perspective, here’s a table that highlights the key metrics before and after deploying our AI-driven enhancements:

Each percentage point and metric is a testament to the effectiveness of custom AI integration. These numbers have not only boosted our internal confidence but have also led to increased client retention and positive reviews across our portfolio.

Industry-Specific Nuances and Technical Deep Dives

Custom AI agents are not a one-size-fits-all solution. They must be tailored to the specific needs of the industry and the unique challenges of each project. At 1985, our approach is deeply rooted in understanding the intricate details of outsourced software development—a field where client requirements are diverse, timelines are tight, and the margin for error is slim.

The Role of Domain Expertise

One of the key insights from our journey is that domain expertise cannot be underestimated. AI agents can process massive amounts of data, but without context, their recommendations can be off the mark. For example, in projects where security is paramount—such as fintech or healthcare applications—the AI must be finely tuned to detect even the slightest deviation from best practices. We achieved this by working closely with subject matter experts to refine our models.

Our process involved multiple rounds of data collection and validation. We integrated domain-specific metrics such as security compliance standards, industry coding conventions, and even regulatory guidelines into our training datasets. The result? An AI agent that understands not just generic coding issues, but the critical nuances of compliance and security.

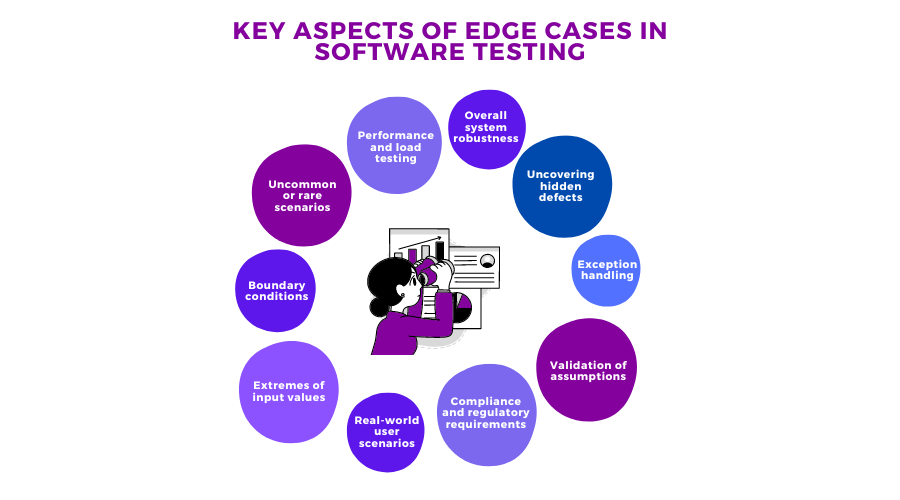

Handling Edge Cases and Complexity

Another technical challenge we faced was handling edge cases. In software development, no two projects are the same. Our AI agents needed to be robust enough to deal with unexpected scenarios. This meant that our models had to be exceptionally flexible, capable of learning on the fly, and adaptive enough to handle exceptions.

We incorporated ensemble learning techniques and hybrid models that combined rule-based systems with deep learning algorithms. This hybrid approach allowed the agents to flag anomalies that did not fit the standard patterns learned during training. In one instance, our AI detected a subtle memory leak in a legacy system—a problem that had eluded human reviewers for weeks.

The complexity of real-world applications required us to implement rigorous testing protocols for the AI itself. We set up simulation environments that mirrored our production systems. Here, our agents were put through stress tests, performance evaluations, and scenario analyses. Each iteration led to incremental improvements and, ultimately, a robust system that could handle the multifaceted challenges of modern software development.

Data Privacy and Security Considerations

Data privacy is a cornerstone of any development workflow, especially when dealing with sensitive client information. Our AI agents were designed with stringent security protocols. We ensured that all data processed by our models was anonymized and encrypted. Moreover, our AI modules underwent regular security audits and compliance checks.

Integrating AI into development workflows also meant rethinking our access controls. We implemented a layered security approach, ensuring that only authorized team members could interact with the AI systems. This was critical in maintaining the confidentiality of our clients’ codebases and proprietary algorithms. Our internal audits have consistently shown that our AI integrations meet or exceed industry standards for security and privacy, which is vital in today's threat landscape.

Best Practices and Lessons Learned

The road to building custom AI agents is lined with lessons. At 1985, we learned that success in this endeavor is not about having the fanciest algorithms or the largest datasets; it’s about thoughtful integration and continuous improvement.

Start Small, Scale Gradually

One of the first lessons we learned was the importance of starting with a pilot project. Instead of overhauling our entire workflow overnight, we identified a specific area where the AI could add immediate value. We chose code reviews as our pilot project. This approach allowed us to test our theories in a controlled environment, gather feedback, and fine-tune our models before a full-scale rollout.

This strategy paid off. By starting small, we were able to demonstrate tangible benefits quickly, building trust within the team. Once the initial module proved its worth, we gradually expanded its capabilities to cover other aspects of the workflow. This incremental approach not only minimized risk but also allowed us to manage change effectively within our organization.

Continuous Feedback and Iteration

The value of continuous feedback cannot be overstated. We set up regular review meetings where developers, QA engineers, and project managers could discuss the performance of the AI agents. These sessions were candid and data-driven. Every piece of feedback was taken seriously, and we made iterative improvements based on real-world usage.

Our feedback loop extended beyond internal reviews. We also monitored external metrics such as client satisfaction and project delivery times. The data consistently showed that projects using AI-enhanced workflows had shorter delivery times and fewer post-deployment issues. This iterative process, where each cycle of feedback led to a refined and more effective solution, became a cornerstone of our development philosophy.

Balancing Automation with Human Oversight

Automation is powerful, but it should never come at the cost of human oversight. Our experience taught us that the best results are achieved when AI augments human capabilities rather than replacing them. For instance, while our code review module provides preliminary insights, final approval still rests with experienced developers who understand the broader context.

We established clear protocols to ensure that AI recommendations are reviewed by humans. In cases where the AI flagged an issue, a developer would examine the context and decide whether to accept or override the recommendation. This balance has been crucial in maintaining quality while reaping the efficiency gains of automation.

Investment in Talent and Infrastructure

Finally, one cannot underestimate the importance of investing in both talent and infrastructure. Building custom AI agents required a cross-disciplinary team of software engineers, data scientists, and domain experts. We also had to invest in scalable infrastructure that could support real-time data processing and model training. At 1985, we allocated a dedicated budget for R&D, recognizing that this investment was key to staying competitive in an increasingly automated industry.

The costs were not trivial, but the returns were significant. The improvements in efficiency, quality, and client satisfaction have made the investment more than worthwhile. Moreover, as the technology continues to evolve, our early investments position us to adapt quickly to future advancements.

Challenges and How We Overcame Them

No journey worth taking is without its challenges. Integrating custom AI agents into our development workflows came with its fair share of obstacles. Here’s an honest look at some of the challenges we faced and how we navigated them.

Data Quality and Integration

One of the first hurdles was data quality. Our AI models rely heavily on historical data to make accurate predictions and recommendations. However, our data was scattered across various systems—version control repositories, bug tracking systems, project management tools, and even email communications. Integrating these disparate sources into a cohesive dataset was a monumental task.

We tackled this challenge by developing custom data pipelines that could extract, clean, and harmonize data from these multiple sources. We also invested in data validation protocols to ensure that the inputs fed into our models were accurate and consistent. The result was a robust, centralized data repository that became the backbone of our AI initiatives.

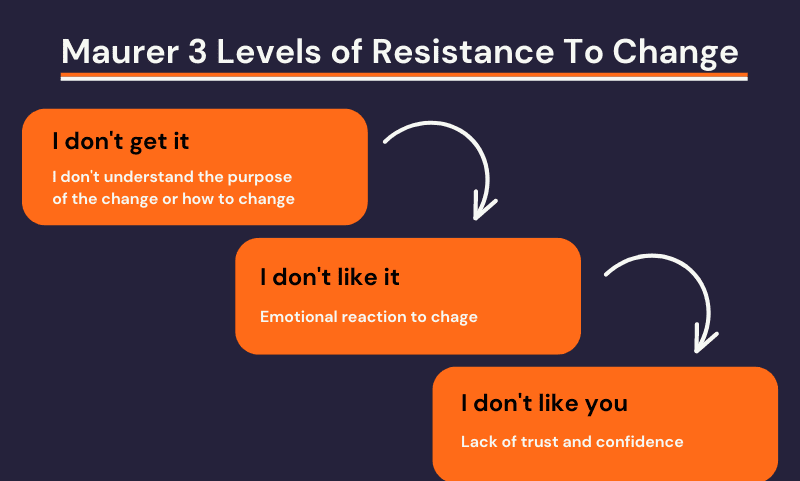

Resistance to Change

Change is often met with resistance, especially in established teams. When we first introduced the idea of AI-assisted development workflows, there were skeptics who questioned the reliability of these systems. Some feared that reliance on AI might erode the craftsmanship of coding and lead to complacency.

To overcome this, we focused on education and transparency. We held workshops and training sessions to demonstrate the capabilities and limitations of the AI agents. We showed real-world examples of how these tools could enhance productivity without compromising quality. Slowly, the team began to see the benefits, and what started as skepticism turned into cautious optimism and eventual acceptance.

Managing False Positives and Negatives

No AI system is perfect. In the early days, our custom agents occasionally produced false positives—flagging issues that were not real—or false negatives, where genuine issues were overlooked. This was particularly frustrating during critical project phases.

We approached this challenge through rigorous testing and constant refinement of our algorithms. We fine-tuned our models using feedback from these instances, gradually reducing the error rates. Over time, the accuracy of our agents improved to the point where their recommendations became reliable enough to be a central part of our workflow. The iterative improvements not only enhanced the system's reliability but also built confidence among our developers.

Keeping Up with Rapid Technological Advances

The field of AI evolves at breakneck speed. What is state-of-the-art today might be obsolete tomorrow. Maintaining the relevance of our custom AI agents required us to stay ahead of the curve. We established a dedicated research team whose sole responsibility was to monitor emerging trends, evaluate new algorithms, and recommend updates to our systems.

This proactive approach ensured that our tools remained cutting-edge. It also allowed us to adopt new techniques, such as transformer-based models for natural language processing, which further improved the performance of our code review and analytics modules. Staying agile and adaptable has been critical in ensuring that our AI systems continue to add value over time.

The Future of AI in Development Workflows

Looking ahead, the potential for AI in development workflows is enormous. At 1985, we view our journey as just the beginning. The future promises even more sophisticated systems that will seamlessly integrate with every facet of software development, from initial design to post-deployment monitoring.

Deeper Integration with DevOps

One exciting area is the deeper integration of AI into DevOps practices. Imagine a system where code commits, build processes, testing, deployment, and monitoring are all orchestrated by intelligent agents that learn and adapt in real-time. This level of integration would not only streamline operations but also provide predictive insights that could prevent issues before they occur. Already, industry research suggests that companies integrating AI into their DevOps pipelines can reduce downtime by up to 50%, making systems more resilient and responsive.

Personalized AI for Developers

Another promising development is the personalization of AI agents. Each developer has a unique style, workflow, and set of preferences. Future AI systems could learn individual habits and provide customized recommendations, making each developer’s work more efficient and enjoyable. The concept of an AI “assistant” that evolves alongside you, learning your quirks and adapting to your working style, is no longer science fiction—it’s on the horizon.

Continuous Learning and Adaptation

The pace at which machine learning algorithms improve is accelerating. Future AI agents will likely incorporate continuous learning mechanisms that allow them to adapt to new coding paradigms, emerging technologies, and evolving industry standards without requiring manual retraining. This dynamic adaptability will be crucial in maintaining the relevance of AI tools in an ever-changing technological landscape.

The Human-AI Partnership

Despite all the technological advancements, one thing remains clear: the human element in software development is irreplaceable. The most successful AI integrations will be those that enhance human creativity, intuition, and judgment. At 1985, our philosophy has always been that technology should serve to empower people, not supplant them. We envision a future where developers and AI agents work in perfect harmony, each complementing the other’s strengths.

Reflections and Final Thoughts

As I reflect on our journey at 1985, I am filled with both pride and humility. The road to integrating custom AI agents into our development workflows was not easy. It required a deep understanding of our own processes, a willingness to embrace change, and an investment in the future of our industry. Today, our AI agents are not just tools; they are partners in our creative process.

Our experience has taught us that the key to success is balance. We must balance innovation with practicality, automation with human insight, and risk with reward. The custom AI agents we built are a testament to what is possible when you dare to rethink the norms and invest in a future where technology and human ingenuity walk hand in hand.

I often share with our team that building these systems was like crafting a new kind of art form—one where data, algorithms, and human creativity blend to create something that is greater than the sum of its parts. It’s a journey filled with setbacks and breakthroughs, long nights and triumphant moments. And every step of the way, we’ve learned something new about our craft and about ourselves.

If there is one takeaway from our experience, it is this: embrace the future, but never lose sight of the human element. Custom AI agents are here to amplify our abilities, to free us from the mundane, and to allow us to focus on what truly matters—solving problems, innovating relentlessly, and delivering excellence to our clients.

Practical Advice for Those Getting Started

For those who are considering integrating custom AI agents into their own development workflows, here are some practical pieces of advice drawn from our experience:

Define Clear Objectives

Before diving into AI development, clearly define what you want to achieve. Is it faster code reviews, improved testing, or better data analytics? At 1985, we started by identifying our pain points and setting measurable goals. This clarity helped us stay focused and measure success accurately.

Invest in the Right Talent

Building AI solutions requires a mix of skills. Don’t hesitate to invest in talent—data scientists, machine learning engineers, and domain experts are invaluable. Our team’s diverse expertise was a critical factor in our success.

Iterate Relentlessly

Expect challenges and be ready to iterate. Every false positive or missed bug was a learning opportunity. Create a culture where feedback is welcome and continuous improvement is the norm.

Ensure Security and Compliance

Never compromise on security. Integrate robust data privacy and security measures from the start. With clients entrusting you with their sensitive data, robust security protocols are not optional—they are essential.

Stay Informed and Adaptive

Technology evolves quickly. Keep abreast of the latest trends in AI and software development. Join industry forums, attend conferences, and network with peers. Staying informed will help you adapt your solutions to new challenges and opportunities.