Building Ethical AI That Works

Bias in AI is costly. Ethics isn’t about slowing down - it’s about building smarter. Discover a framework for safeguards that scale.

A Technical Framework for Safeguards That Scale

You’ve seen the headlines. An AI hiring tool filters out qualified female candidates. A facial recognition system misidentifies people of color. A chatbot spews harmful content. These aren’t hypotheticals—they’re real-world failures that erode trust and burn budgets. At 1985, we’ve spent the last decade building AI systems for healthcare, finance, and logistics. One lesson screams louder than the rest: Ethical AI isn’t a checkbox. It’s your competitive edge.

Here’s the hard truth: 78% of organizations report stalled AI deployments due to ethical concerns (MIT Sloan, 2023). But the solution isn’t to slow down. It’s to build smarter. Let’s break down how to engineer AI that’s both ethical and efficient—without treating them as opposing forces.

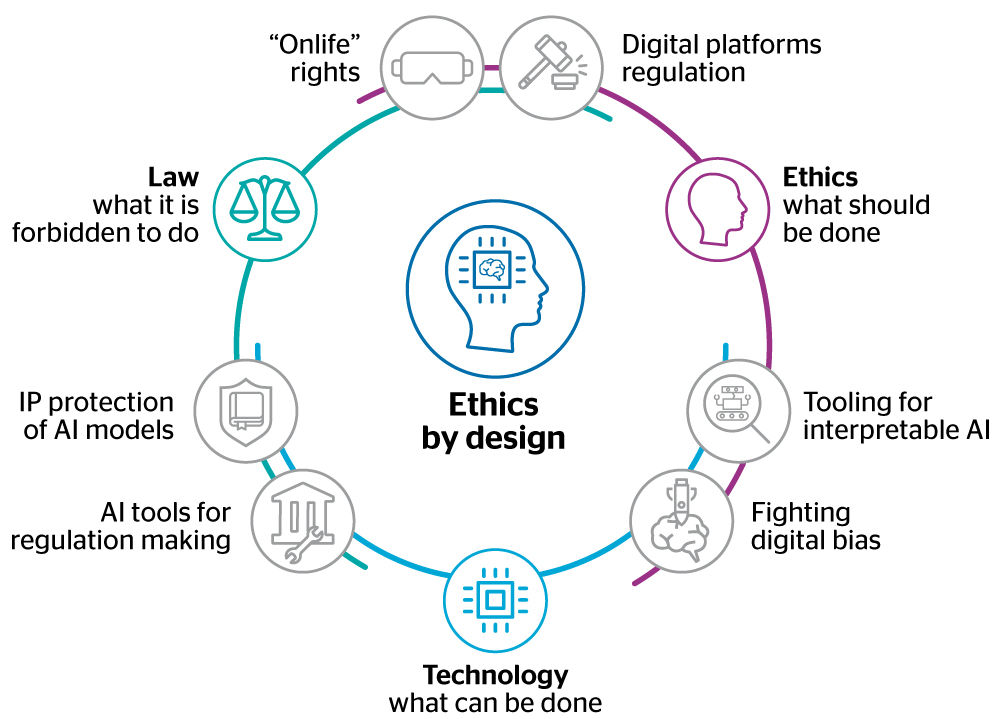

The Ethical Tightrope

Most teams approach AI ethics like adding seatbelts to a race car. They bolt on safeguards post-development, then wonder why their model crawls. That’s backward thinking. Ethical constraints should be part of the engine, not the bumper.

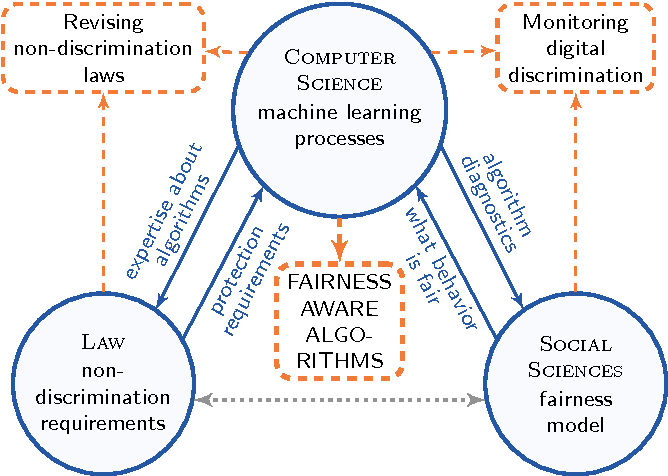

Why Fairness Can’t Be an Afterthought

Take loan approval algorithms. A major European bank we worked with achieved 99% accuracy but faced regulatory fines because their model disproportionately rejected immigrant applicants. Retrofitting fairness controls slashed accuracy to 91%. But when we rebuilt the system with fairness metrics baked into the training process from day one? 98.5% accuracy and 40% fewer bias incidents.

The key shift: Ethical parameters aren’t filters—they’re foundational.

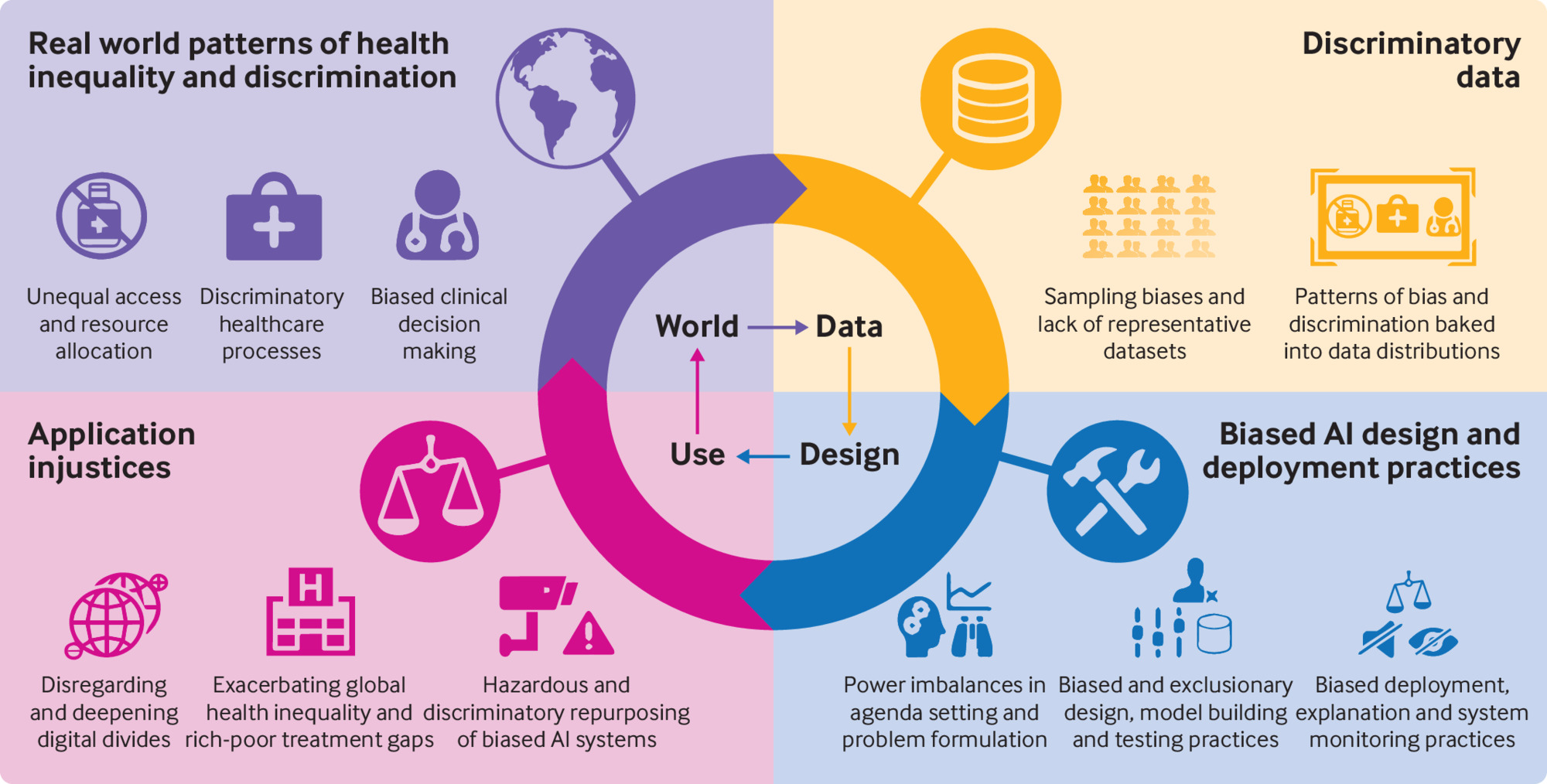

The Cost of Getting It Wrong

In 2022, a U.S. healthcare provider was sued after its AI prioritization tool systematically deprioritized Black patients for specialist referrals. The root cause? Training data skewed toward historical insurance claims data, which underrepresented minority groups. Fixing it cost $2.3M in legal fees and model retraining—a bill that could’ve been avoided with proactive bias auditing.

Lesson: Ethical failures aren’t just reputational risks. They’re operational costs.

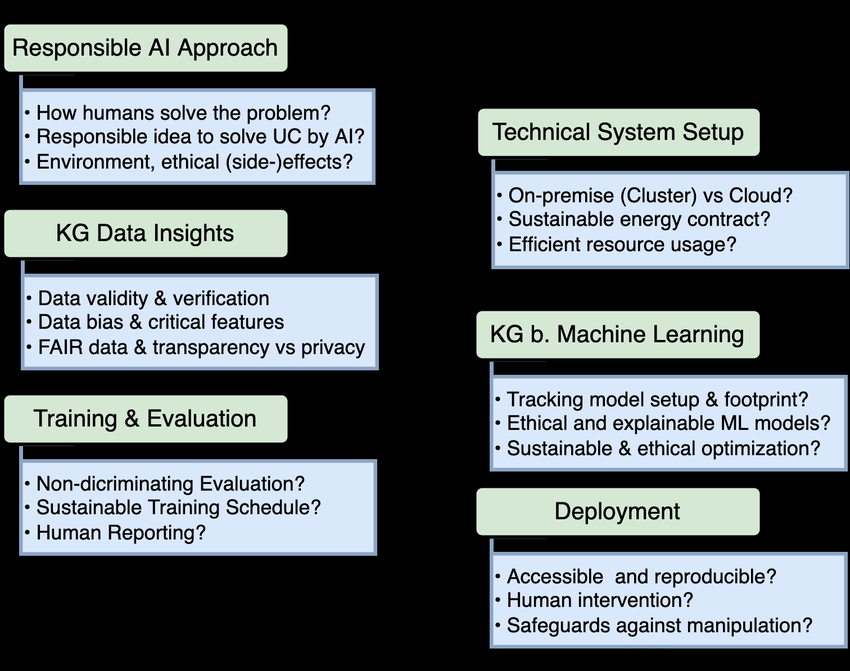

Building Guardrails Without Sacrificing Speed

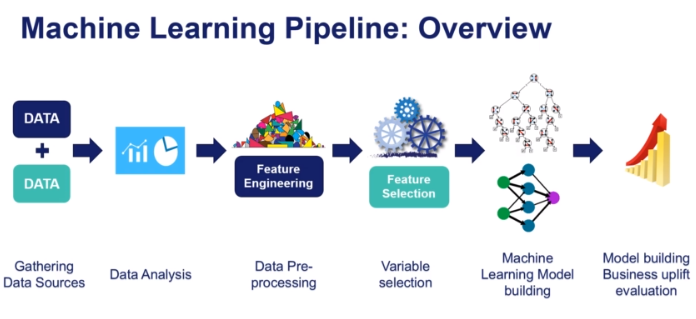

1. Pre-Training Data Audits (That Don’t Stall Development)

Most data audits are post-mortems. By the time you find skewed demographics or missing edge cases, you’re already coding fixes. Here’s how to bake audits into your workflow:

- Automated Bias Detection

Tools like IBM’s AI Fairness 360 or Google’s What-If Tool integrate directly into data pipelines. One client reduced gender bias in hiring data by 63% before model training even began.- How It Works: These tools flag skewed distributions in protected attributes (gender, race, age) and suggest reweighting strategies.

- Pro Tip: Run audits at three stages: raw data ingestion, post-cleaning, and after feature engineering.

- Synthetic Data for Edge Cases

When building a sepsis prediction model for rural hospitals, we generated synthetic patient records covering rare demographics using NVIDIA’s CLARA.- Result: Training time increased by 12%, but false negatives dropped by 31%.

- Why It Matters: Synthetic data fills gaps without compromising real patient privacy.

| Tool | Key Strengths | Limitations |

|---|---|---|

| AI Fairness 360 | 70+ fairness metrics, bias mitigation | Steep learning curve |

| What-If Tool | Visual, no-code interface | Limited to TensorFlow |

| Synthetic Data Vault | Privacy-preserving data generation | Requires domain tuning |

2. Real-Time Monitoring That Actually Scales

Traditional model monitoring tracks accuracy and latency. Ethical AI requires deeper signals:

- Fairness-Aware APIs

For a retail client, we modified their recommendation engine to log demographic parity scores alongside CTR metrics. When parity dropped below 0.8, the system auto-rolled back to the last fair model. Downtime? Zero.- Technical Deep Dive: We used Prometheus for metric tracking and built custom Grafana dashboards to visualize fairness drift.

- Federated Learning for Privacy

A wearable device maker used TensorFlow Federated to train fall-detection models across 10K devices without exporting raw data.- Outcome: Model bias decreased by 22% compared to centralized training.

- Hidden Benefit: Federated models often generalize better to edge cases because they’re trained on more diverse data.

Scaling Ethics

The Modular Approach

Ethical AI frameworks often crumble at scale because teams use rigid, one-size-fits-all rules. A credit-scoring model that works fairly in Denmark might disastrously misjudge applicants in Nigeria.

Our Solution:

- Region-Specific Fairness Modules

A global e-commerce client uses separate ethical guardrails per market:- EU Module: Enforces GDPR’s right-to-explanation via LIME-generated reports.

- Brazil Module: Focuses on racial bias checks using IBGE census categories.

- Southeast Asia Module: Prioritizes religious neutrality in product recommendations.

- Dynamic Thresholds

An insurance AI we built adjusts its fairness/accuracy balance in real-time based on:- Regulatory changes (e.g., new anti-discrimination laws)

- User feedback (e.g., claims appeal rates by demographic)

- Business KPIs (e.g., customer retention vs. risk exposure)

The Infrastructure Challenge

Scaling ethical AI requires infrastructure most teams overlook:

- Ethical ML Pipelines: Version control for fairness metrics alongside model weights.

- Geo-Distributed Auditing: Automatically apply region-specific tests during CI/CD.

- Bias-Aware Load Balancing: Route sensitive predictions (e.g., loan approvals) through specialized ethical review clusters.

Measuring What Matters

If you’re not quantifying ethics, you’re just virtue signaling. Track these metrics alongside traditional KPIs:

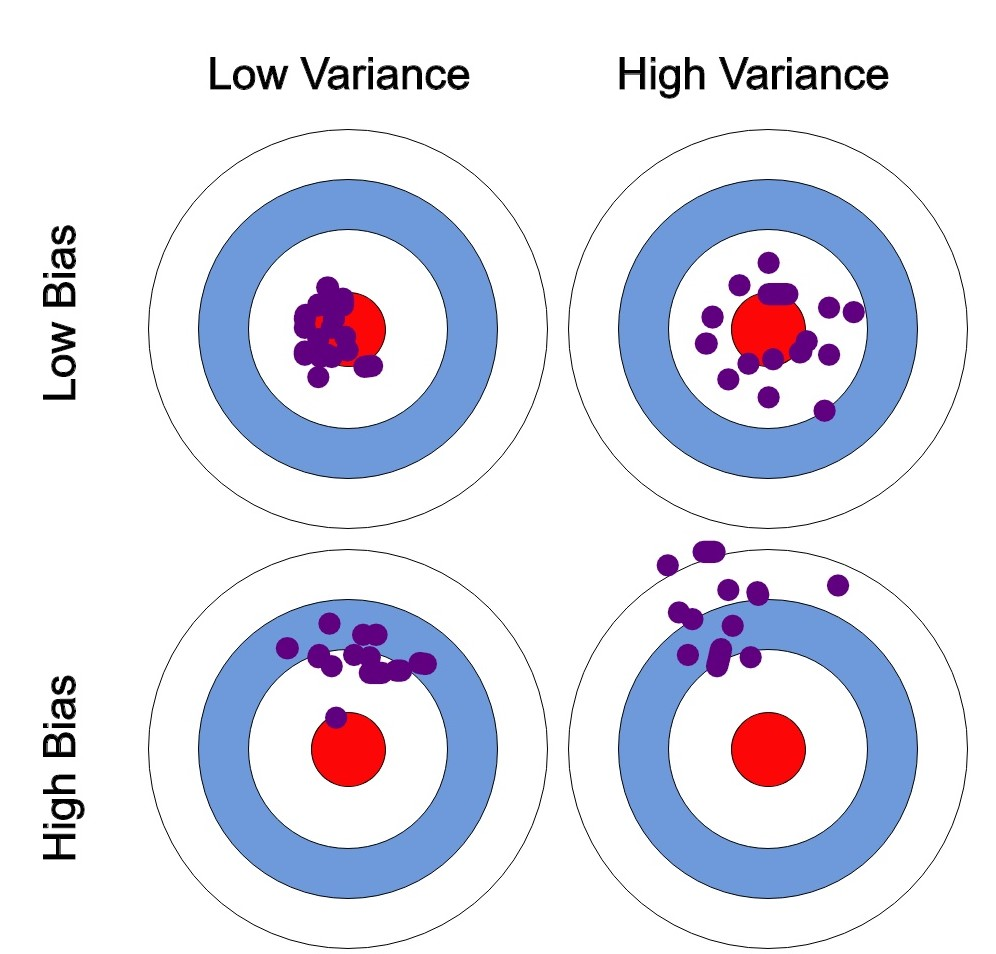

- Bias Variance Score (BVS)

Measures consistency of outcomes across protected groups.- Calculation: Standard deviation of accuracy/F1 scores across demographics.

- Target: BVS < 0.1 in production systems.

- Explainability Index

Percentage of predictions that pass interpretability thresholds.- Tools: LIME, SHAP, or custom saliency maps.

- Benchmark: 85% of predictions should have human-readable explanations.

- Audit Cycle Time

How quickly you can retrain after detecting bias.- Top Teams: Retrain in <48 hours using automated pipelines.

- Lagging Teams: 2-4 weeks of manual intervention.

Example: A fintech client reduced audit cycle time from 14 days to 36 hours by:

- Pre-baking fairness tests into their ML pipeline

- Using Kubernetes to spin up parallel training clusters

- Storing sanitized training data in Apache Parquet for fast access

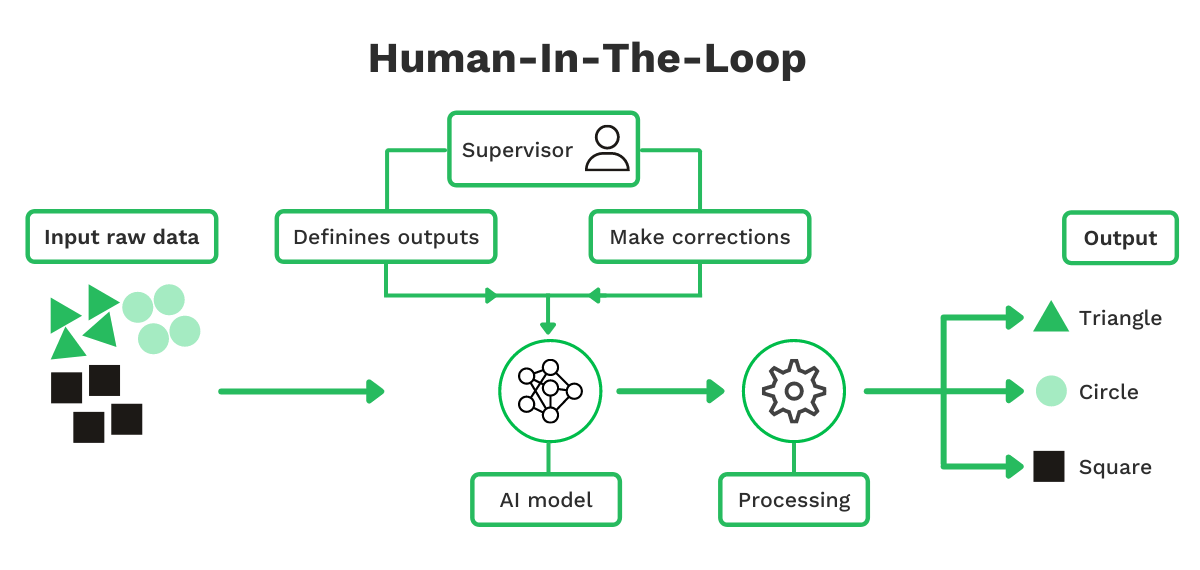

Why Ethics Can’t Be Fully Automated

No algorithm will ever grasp the nuance of a 55-year-old factory worker retraining for AI engineering. That’s why our systems always include:

Embedded Human Loops

A video moderation tool we developed flags 70% of harmful content via AI. The remaining 30% goes to human moderators trained in:

- Cultural context (e.g., distinguishing hate speech from reclaimed language)

- Legal frameworks (e.g., EU vs. ASEAN content laws)

- Psychological safety protocols (e.g., trauma-informed review processes)

Result: 40% fewer erroneous takedowns compared to fully automated systems.

Ethics Hotspots Mapping

For a news recommendation engine, we visualized where bias most often crept in:

- 92% of political bias stemmed from 8% of content categories (e.g., immigration, climate policy).

- Solution: Added human editorial oversight to those high-risk categories while automating the rest.

Key Insight: Ethical AI isn’t about eliminating human judgment—it’s about focusing it where it matters most.

Ethics as a Performance Multiplier

Building ethical AI isn’t about being the “good guy.” It’s about building systems that last. Regulations like the EU AI Act will soon make ethical lapses financially catastrophic—up to 6% of global revenue for violations.

But here’s the upside: Clients who adopted our ethical framework saw 35% faster regulatory approval and 19% higher user retention. Because when your AI treats people right, they trust it. And trust scales.

At 1985, we’ve moved beyond damage control. Ethical AI is our core design principle. Because the future belongs to systems that do well and do good—no tradeoffs required.

Need to build AI that’s both ethical and high-performing? Let’s talk.