AI Production Secrets Nobody Shares

Learn the overlooked truths of deploying AI in the real world, from infrastructure to explainability.

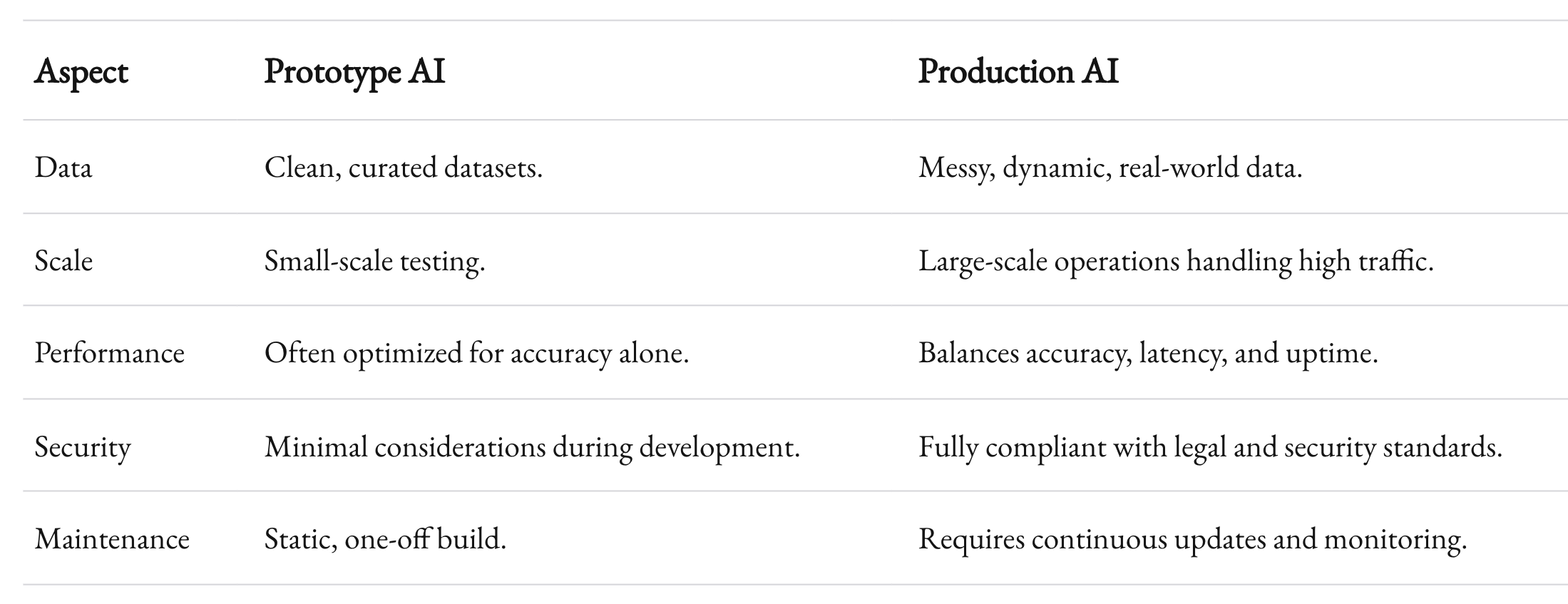

AI prototypes are exciting. They’re flashy, they work in controlled settings, and they promise to change the game. But getting AI from a polished demo to something that works in the messy, unpredictable real world? That’s where the magic—and the headaches—happen.

As someone who runs an outsourced software development company, 1985, I’ve seen the good, the bad, and the ugly of moving AI to production. It’s not glamorous work, but it’s where real value is created. And while everyone loves to show off their successful launches, no one talks about the battle scars. Let’s fix that.

This post pulls back the curtain. It’s a behind-the-scenes look at the pitfalls, lessons, and strategies that separate a slick prototype from a robust, scalable AI product.

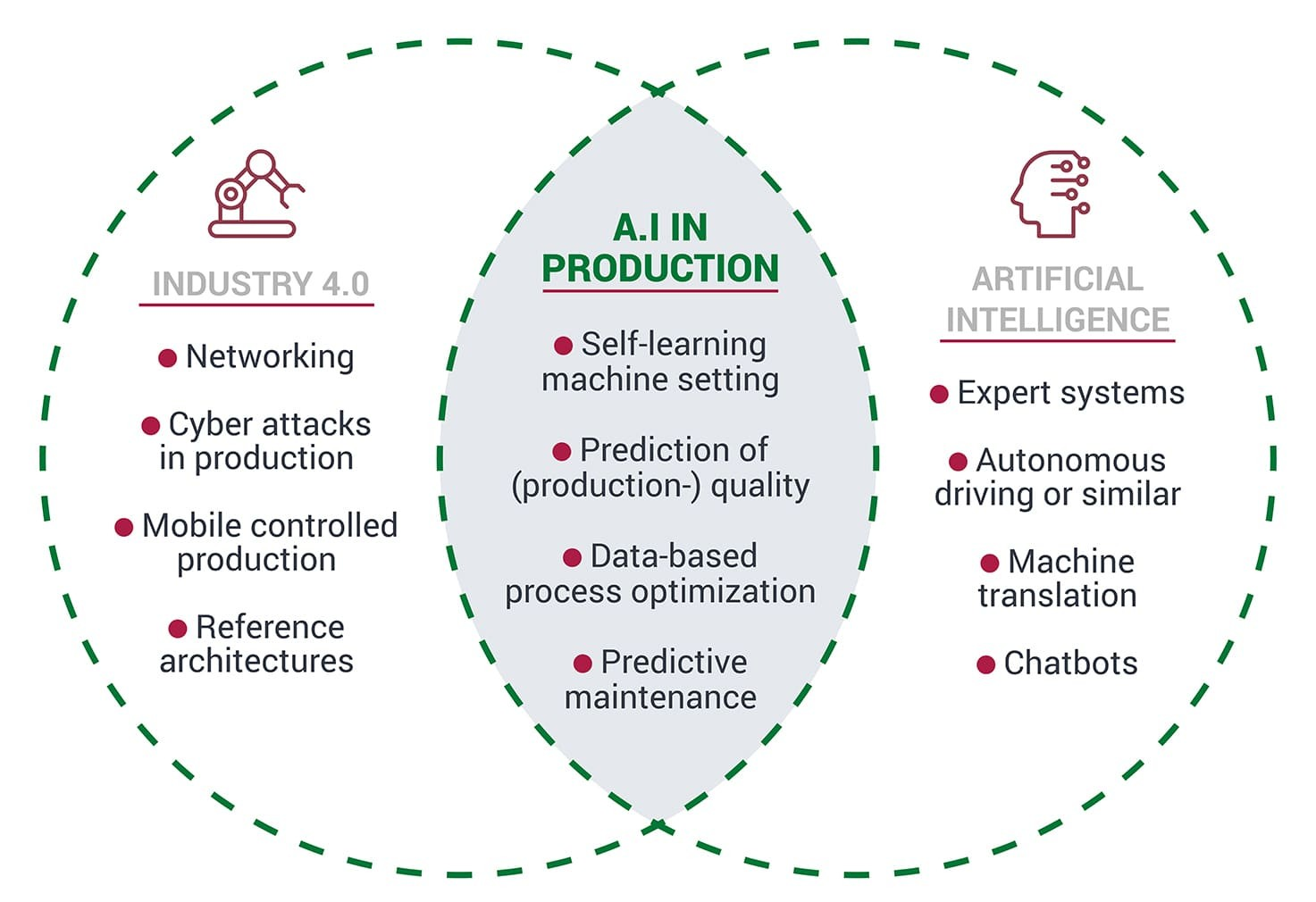

Why the Leap to Production Is Harder Than It Looks

In theory, AI prototypes should transition smoothly into production systems. But in practice? That’s rarely the case. Here’s why:

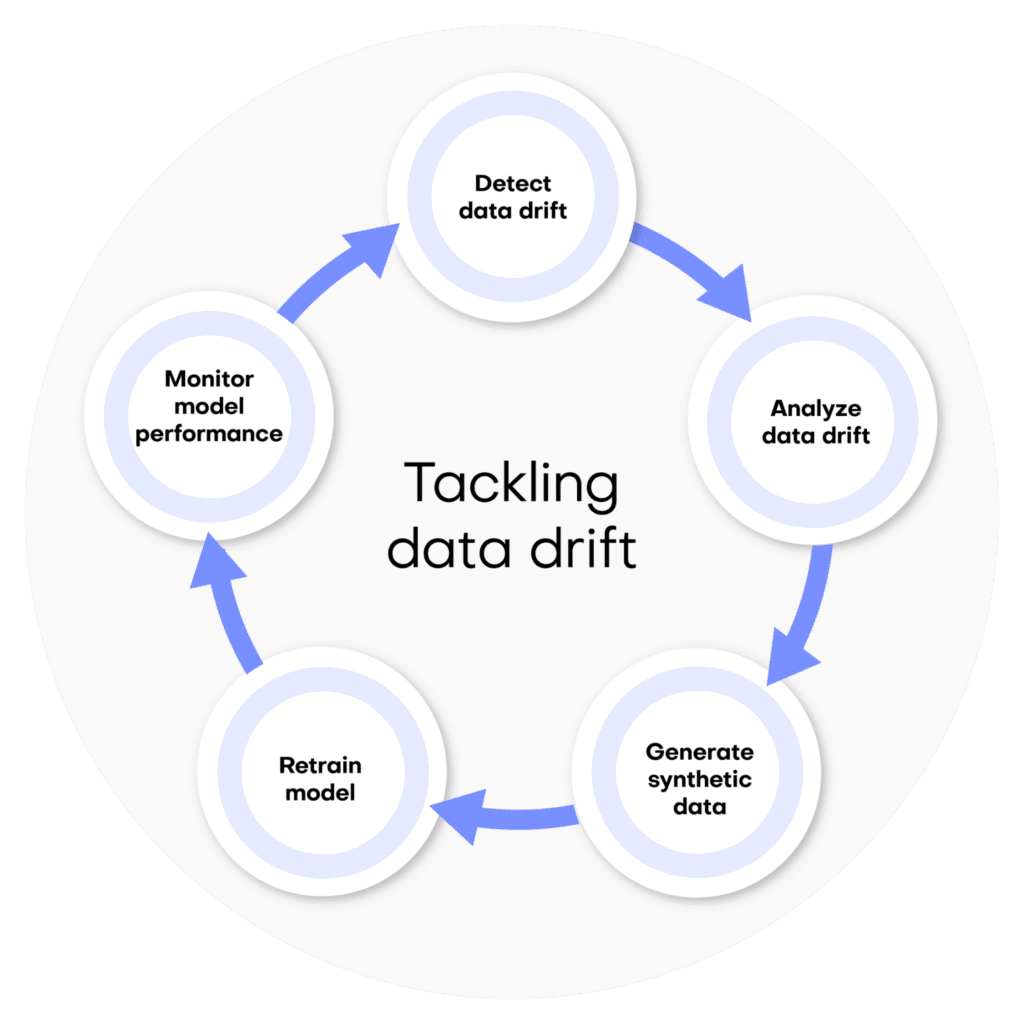

- Data Drift: The data in production doesn’t look like the training data. Real-world data is messy, incomplete, and ever-changing. For instance, a customer service chatbot might perform flawlessly in training but flounder when customers use slang, typos, or unexpected languages.

- Infrastructure Demands: Running a model locally or in a sandbox environment is one thing. Scaling it to handle thousands or millions of requests per second is a different beast. This requires robust infrastructure—from GPUs to distributed systems.

- Latency Expectations: In production, milliseconds matter. A prototype’s slightly laggy response might be acceptable during demos, but in live applications, it’s a dealbreaker. Users won’t wait.

- Compliance and Security: AI prototypes often sidestep legal and security concerns. In production, these are non-negotiable. Think GDPR compliance, data anonymization, and ensuring no personally identifiable information (PII) leaks.

These challenges are why seasoned developers approach production with a mix of caution and rigor. Let’s dig deeper into some specific hurdles and how to overcome them.

Common Pitfalls (and How to Avoid Them)

Pitfall 1: Ignoring Real-World Data Variability

Your AI is only as good as the data it’s trained on. And yet, many teams build prototypes on curated, cleaned datasets that don’t reflect reality. The result? Models that crumble when faced with noise, outliers, or new patterns.

Case Study: Image Recognition Gone Wrong

A retail company developed an AI to identify products in customer-uploaded photos. It worked brilliantly in the lab. But when deployed, it misclassified items because:

- Customers used different lighting conditions.

- Backgrounds were cluttered.

- Photos included multiple products.

Lesson: Simulate real-world conditions during training. Augment your datasets with noisy, diverse examples. And invest in continuous learning pipelines to keep your model updated as new data streams in.

Pitfall 2: Overlooking Explainability

AI decisions can be a black box. While this might fly in research, it’s a red flag in production. Users, stakeholders, and regulators need to understand why a model made a decision.

Example: Credit Scoring Models

When a bank’s credit scoring model started rejecting high-value customers, executives demanded answers. The developers couldn’t explain the model’s logic beyond vague statistical metrics.

Solution: Use techniques like SHAP (SHapley Additive exPlanations) or LIME (Local Interpretable Model-agnostic Explanations). These tools help you break down model outputs into understandable components. Make explainability a core feature, not an afterthought.

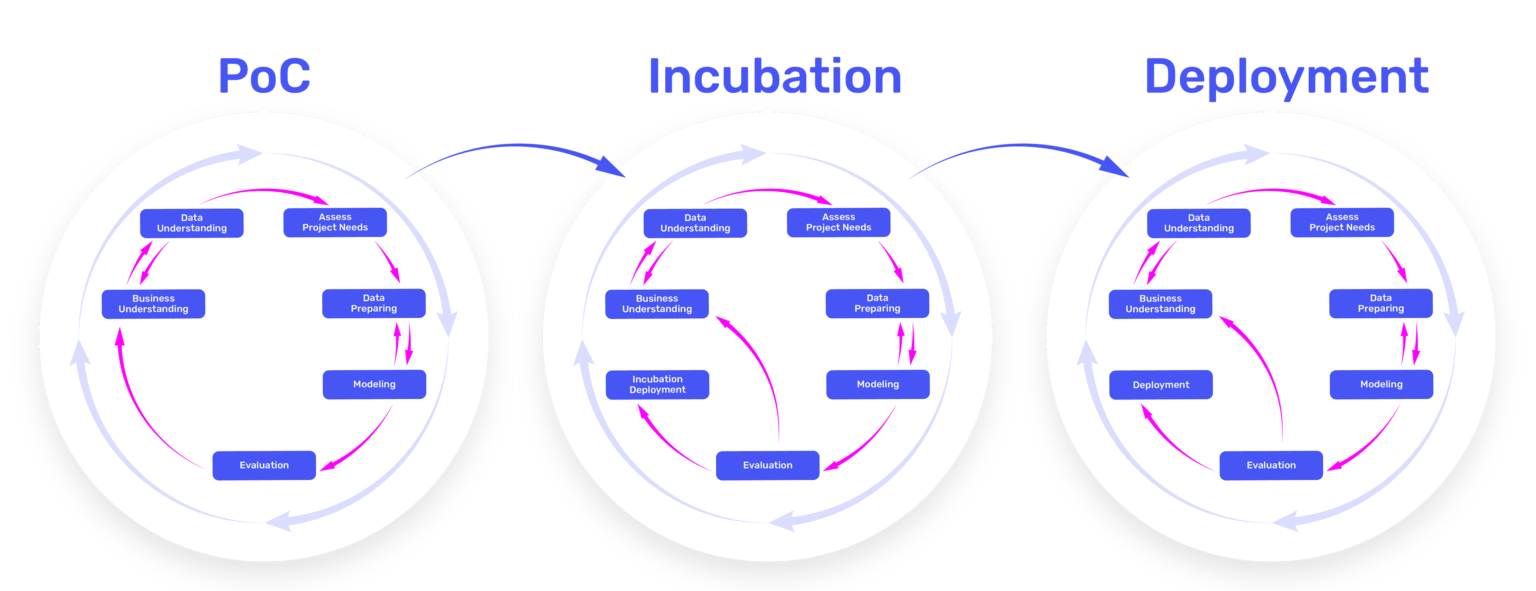

Pitfall 3: Underestimating Deployment Complexity

You’d think once the model is trained, deployment is straightforward. Think again. From containerization to A/B testing, deployment introduces layers of complexity.

Real-World Example: A/B Testing Chaos

An e-commerce company wanted to test its recommendation model by rolling it out to 10% of users. But they didn’t anticipate:

- Handling different user cohorts.

- Merging test results with production data.

- Backlash from users unhappy with inconsistent experiences.

Pro Tip: Use feature flags to manage rollouts. Tools like LaunchDarkly can help you isolate changes, measure impact, and revert quickly if things go south.

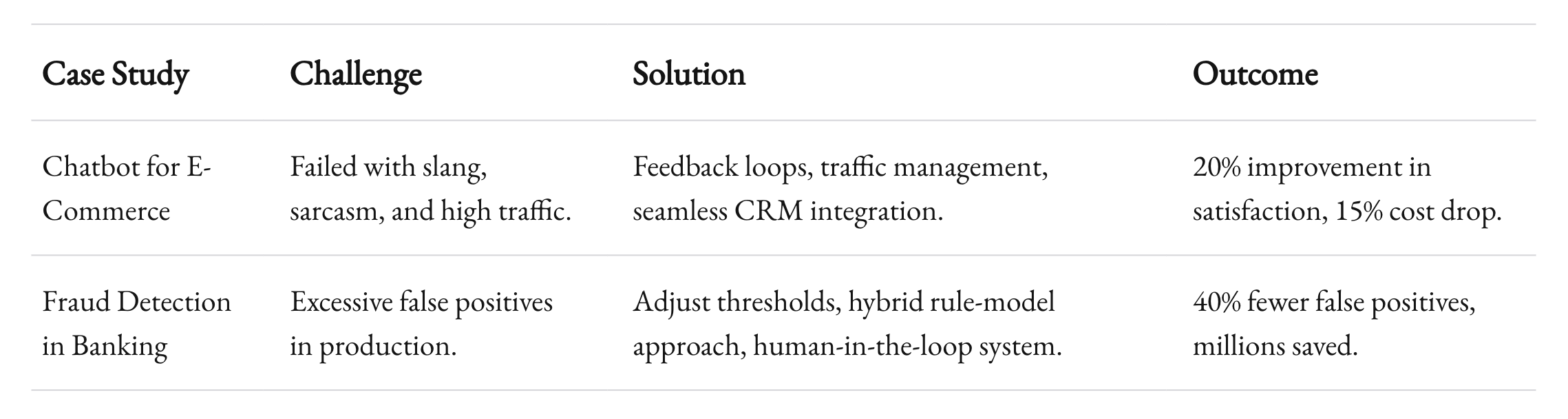

Case Studies That Get Real

Case Study 1: Scaling a Chatbot for E-Commerce

Challenge: A mid-sized retailer built a chatbot to handle FAQs. During testing, it worked great with scripted queries. But in production, it buckled under:

- High traffic during seasonal sales.

- Customer queries with slang, sarcasm, or frustration.

- Integration issues with the company’s CRM.

Solution:

- Traffic Management: Introduced rate-limiting and load balancing.

- Continuous Learning: Implemented a feedback loop where customer queries fed into the training pipeline.

- Seamless Integration: Partnered with CRM experts to ensure the chatbot could pull and push data reliably.

Result: Customer satisfaction scores improved by 20%, and support costs dropped by 15% in the first quarter.

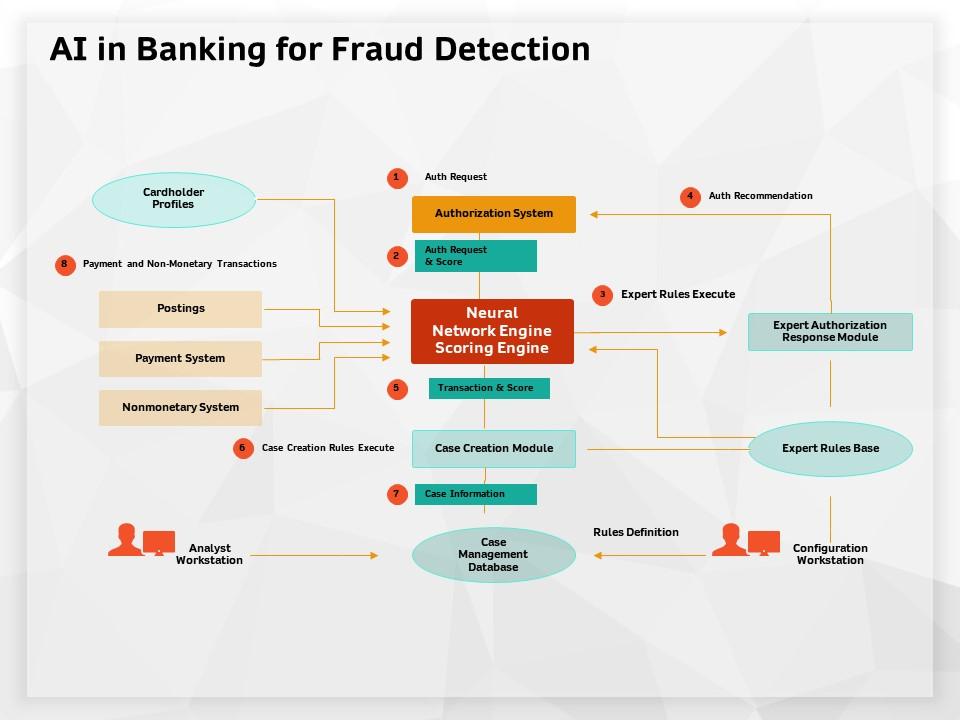

Case Study 2: Fraud Detection in Banking

Problem: A bank deployed an AI model to flag fraudulent transactions. It had high accuracy during tests but flooded human reviewers with false positives in production.

Fix:

- Threshold Tuning: Adjusted confidence thresholds based on business impact.

- Hybrid Approach: Combined the model with rule-based heuristics to catch obvious fraud.

- Human-in-the-Loop: Introduced an escalation system for edge cases.

Outcome: False positives decreased by 40%, saving the bank millions in operational costs.

Practical Tips for Success

- Start Small: Pilot your AI with a limited user base. Use this phase to uncover unexpected issues.

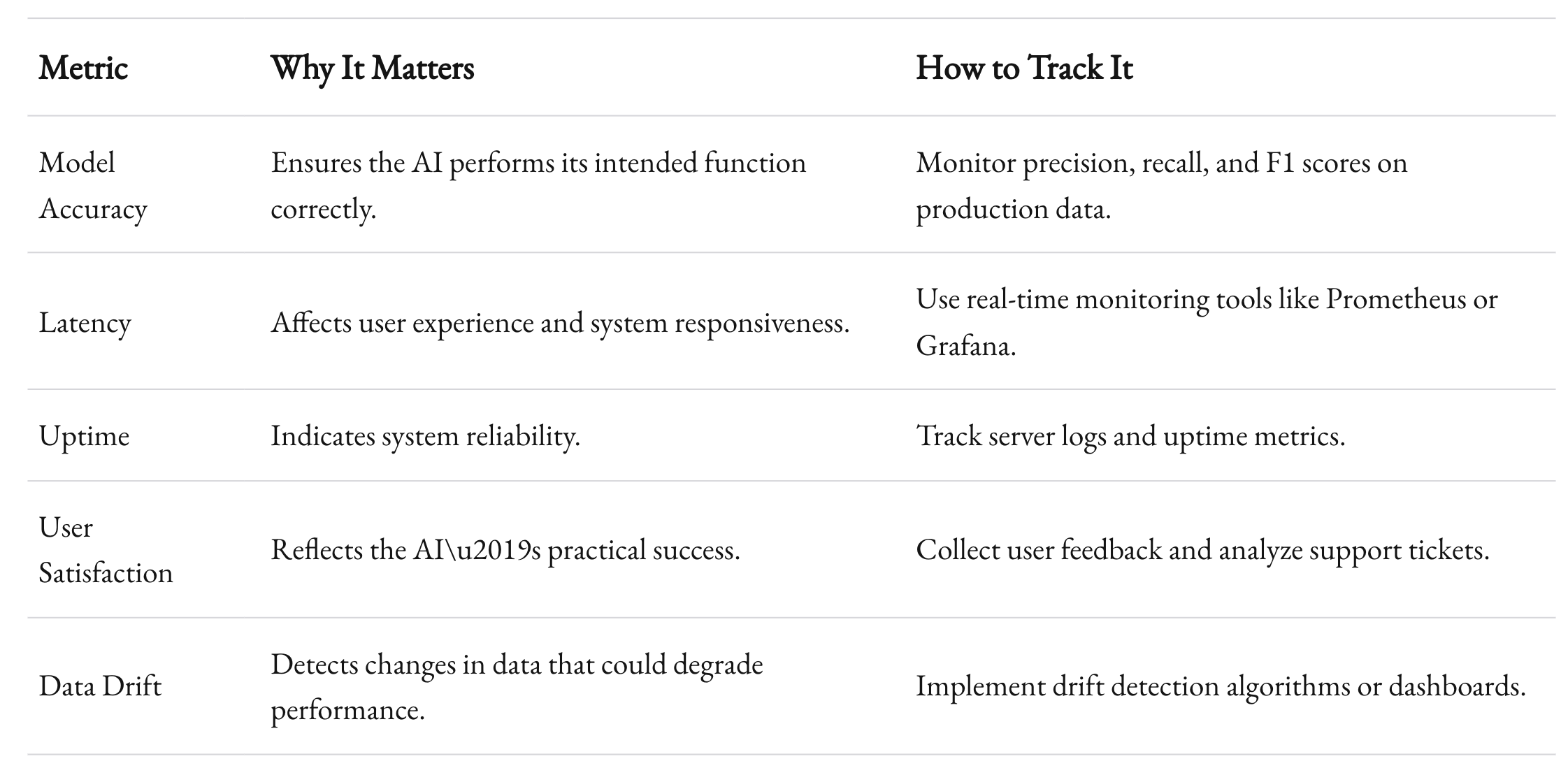

- Obsess Over Metrics: Track more than just accuracy. Monitor latency, uptime, user satisfaction, and business KPIs.

- Document Everything: From model parameters to deployment workflows, keep a record. It’s invaluable for debugging and audits.

- Build for Iteration: Production AI isn’t static. Design your systems to support regular updates and retraining.

- Invest in Monitoring: Use tools like Prometheus, Grafana, or custom dashboards to keep tabs on performance and anomalies in real time.

Wrapping Up

Moving AI from prototype to production isn’t a straight line. It’s messy, iterative, and often frustrating. But it’s also where the real impact happens.

If there’s one takeaway, it’s this: Success isn’t about avoiding challenges. It’s about anticipating them and having the right systems, people, and processes in place to tackle them.

At 1985, we live for this challenge. Whether it’s scaling chatbots, optimizing fraud detection, or deploying cutting-edge computer vision models, we know what it takes to bridge the gap between promise and production. And we’re here to help.

What’s been your biggest challenge in taking AI to production? Let’s discuss in the comments or reach out directly. Because the more we share, the better we all get.