AI Agents for Automated Code Review and Testing: A Deep Dive

Learn how AI tools are transforming software development, boosting efficiency and code quality every day.

Every line of code matters. Every bug costs time and money. As the founder of 1985, an Outsourced Software Development company, I have seen firsthand how technology transforms workflows. One of the most exciting developments today is the emergence of AI agents that automate code review and testing. This blog post is a deep dive into that world. It’s a blend of personal insights, hard data, and practical tips drawn from years of industry experience. The aim is to provide you with an intelligent, yet conversational guide to understanding and leveraging AI agents in our daily codecraft.

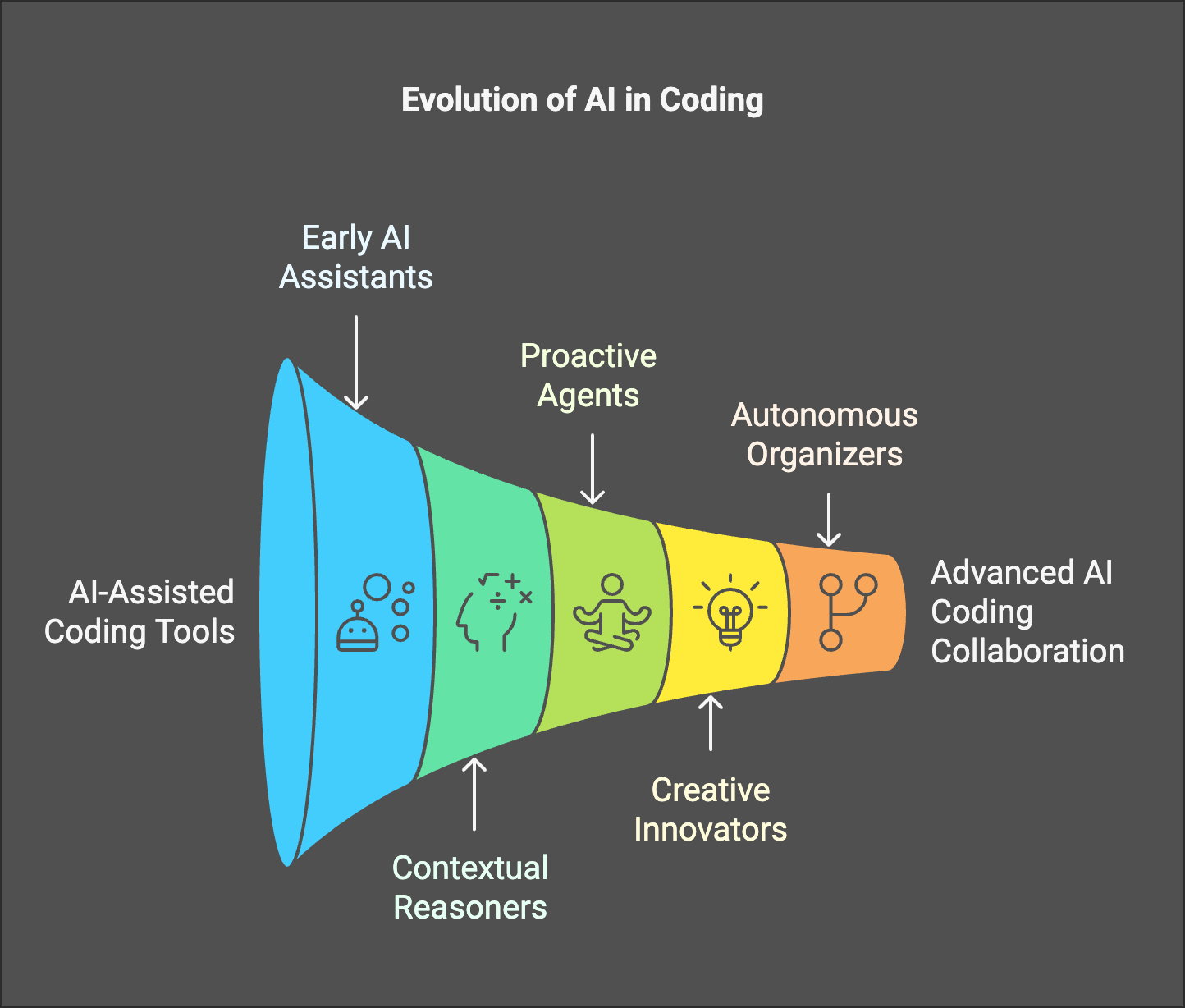

The Evolution of Code Review and Testing

A New Era in Software Quality

Software development has come a long way from the days of manual, error-prone reviews. There was a time when developers relied solely on peer reviews and sporadic testing cycles. These practices, while effective in their era, now struggle to keep pace with modern agile environments. Today, speed is king. Code is pushed to production in seconds. Every delay is costly. This is where AI steps in.

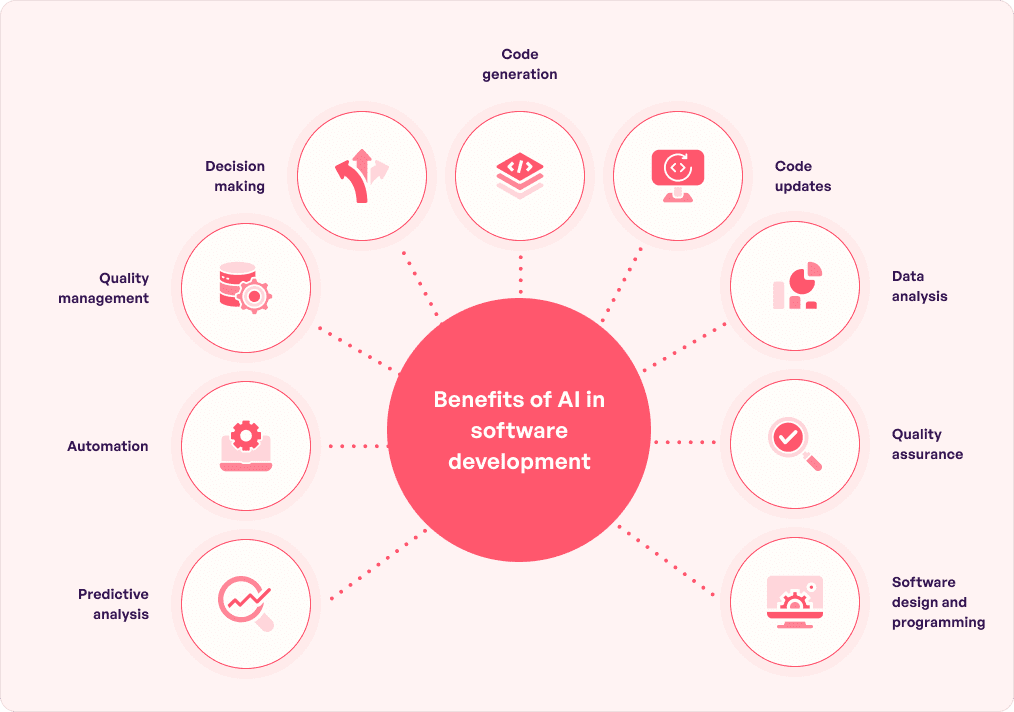

AI agents bring a new dimension to the process. They work tirelessly. They catch issues that human eyes might miss. Their algorithms learn from past errors. They improve with every iteration. The power of AI in this space is profound and transformative. They are not here to replace human insight but to enhance it.

At 1985, we have integrated AI into our workflow. The shift has been dramatic. Our team now spends less time on repetitive checks and more on strategic problem-solving. This has led to better code quality and shorter release cycles. It is not magic. It is data-driven efficiency, powered by AI.

From Reactive to Proactive Quality Control

Traditional code reviews are often reactive. They fix issues after the fact. AI agents change this paradigm. They move us from reactive to proactive quality control. Instead of waiting for a bug to slip into production, AI agents analyze code as it is written, catching issues early.

Consider the classic scenario: a developer writes a block of code. Traditionally, it would sit in a pull request, awaiting human review. Hours might pass. Meanwhile, an unnoticed bug could snowball into a major issue. With AI agents, the process is nearly instantaneous. They scan code for vulnerabilities, performance issues, and adherence to best practices in real-time. This early detection is invaluable. It ensures that potential problems are nipped in the bud before they escalate into critical failures.

At 1985, our teams have embraced this proactive approach. Our clients have noticed fewer production bugs and faster release times. The evolution from reactive to proactive quality control is not just an incremental improvement. It’s a paradigm shift that drives operational excellence in the fast-paced world of software development.

![Popular AI Agents for Devs: Chatdev, SWE-Agent & Devin [Example Project]](https://cdn-blog.scalablepath.com/uploads/2024/10/ai-agents-overview.png)

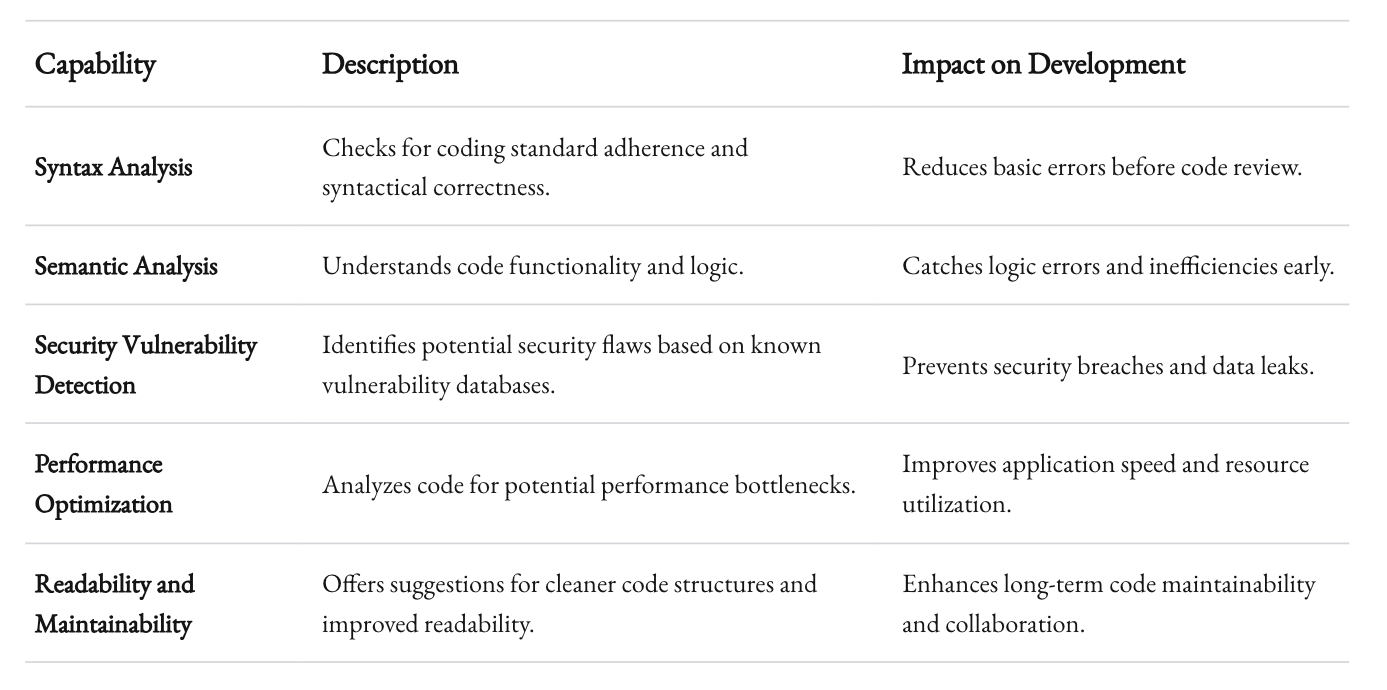

AI Agents in Code Review

How AI Agents Analyze Code

AI agents are built on machine learning algorithms. They are designed to understand patterns, detect anomalies, and predict outcomes. Their role in code review is multifaceted. They analyze syntax, semantics, and even stylistic consistency. Short sentences can be powerful. They communicate clearly. Just like Hemingway’s prose, these agents make the review process crisp and effective.

The core strength of these agents lies in their ability to learn from vast amounts of code. They have been trained on millions of lines of open-source code, learning best practices and common pitfalls. Their algorithms compare new code against these standards. If something looks off, they flag it. They do this with speed and consistency that no human can match.

Imagine having a tireless code reviewer that works 24/7. That’s the promise of AI agents. They work seamlessly alongside human reviewers, acting as a first line of defense. They catch syntax errors, potential bugs, and even security vulnerabilities before the code reaches production. This collaborative process has redefined code quality assurance at 1985.

The Nuances of AI-Driven Code Reviews

Not all code reviews are created equal. Traditional methods often miss context. AI agents, however, excel in spotting patterns that may not be obvious at first glance. They don’t just look for errors; they analyze code structure, variable naming conventions, and logic flow. This level of analysis is particularly crucial in large-scale projects where minor inconsistencies can lead to major issues later.

Consider the challenges in detecting edge-case vulnerabilities. AI agents can simulate multiple scenarios based on historical data. They identify patterns that might lead to security breaches or performance bottlenecks. Their recommendations are data-driven and context-aware. At 1985, we have used these insights to refine our code review process, ensuring that every line of code is optimized for efficiency and security.

These agents can also provide feedback on code readability and maintainability. They offer suggestions that go beyond the surface-level syntax corrections. For example, an AI agent might recommend refactoring a function for better modularity or suggest alternative algorithms for improved performance. Such nuanced insights are invaluable for developers who strive for clean, efficient code.

AI Code Review Capabilities

This table above illustrates just a fraction of the sophisticated capabilities that AI agents bring to the table. Each capability is honed through rigorous training and continuous updates, ensuring that the AI stays ahead of emerging challenges in code quality.

Automated Testing: The AI Advantage

Bridging the Gap Between Code and Quality

Testing has always been the backbone of robust software. In the past, it was a cumbersome, manual process. Testers would write hundreds of test cases, manually checking each functionality. This approach, while effective, is not scalable in today’s fast-paced development environment. Enter AI-powered automated testing.

AI agents revolutionize testing by integrating seamlessly into the development pipeline. They write, execute, and update test cases with minimal human intervention. The pace of testing is accelerated. Bugs are detected in real-time. The result is a dramatic reduction in the time it takes to move from development to deployment.

At 1985, we have seen automated testing streamline our workflows significantly. Our developers are freed from repetitive manual testing tasks. They can now focus on innovation and solving complex problems. The shift to automated testing has not only increased our productivity but also enhanced our code quality. It’s a win-win situation.

How AI Enhances Testing Protocols

AI in testing is not just about automation—it’s about smart automation. These agents analyze code changes and determine which test cases are most relevant. They prioritize tests based on risk assessment and historical data. This intelligent approach minimizes unnecessary test runs while ensuring critical functionalities are thoroughly verified.

Consider a scenario where a minor change in code could potentially have a ripple effect. Traditional testing might run every test suite regardless of impact. AI agents, however, identify the impacted areas. They run targeted tests that are more likely to catch issues. This saves time and computational resources, allowing for more frequent releases without compromising quality.

Moreover, AI agents continuously learn from test results. They adjust their algorithms based on the success or failure of previous test cases. This iterative learning process means that over time, the agents become more adept at predicting potential failure points. At 1985, this learning curve has allowed us to maintain a high standard of quality, even as our codebases grow in complexity.

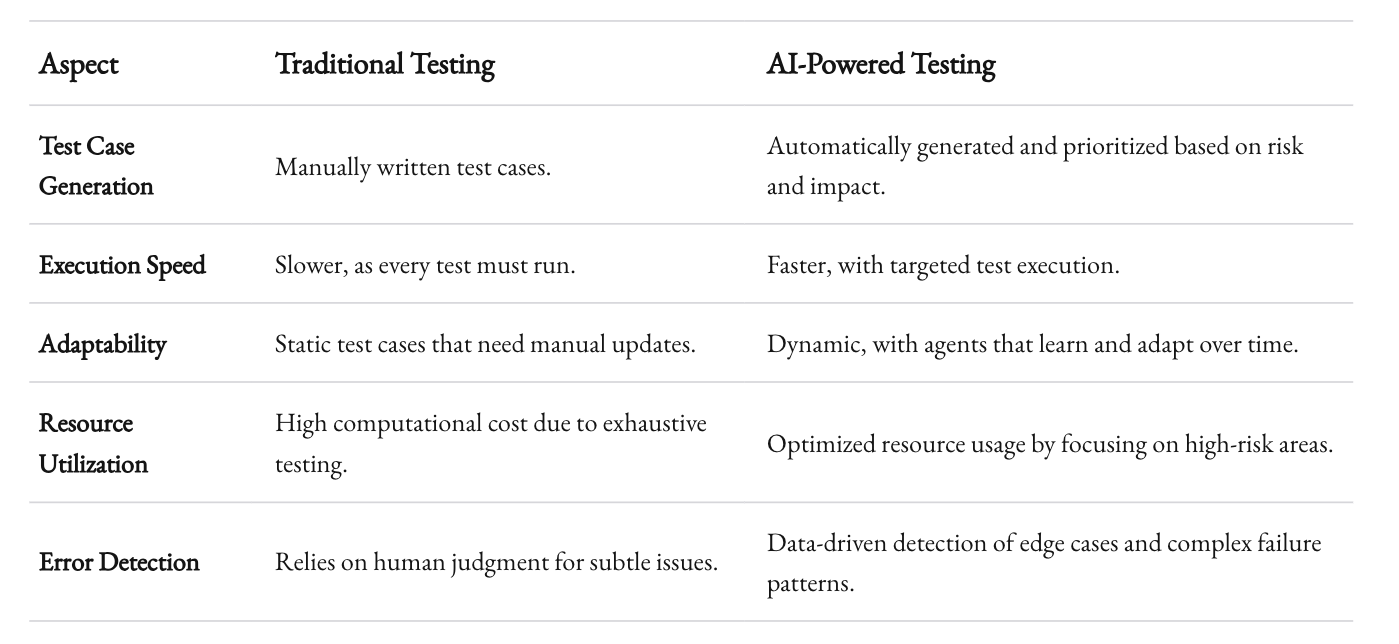

Comparison of Testing Approaches

This table encapsulates the efficiency gains and strategic advantages that AI brings to automated testing. The shift from traditional to AI-powered testing is not just a technological upgrade; it’s a rethinking of how we ensure software reliability and performance.

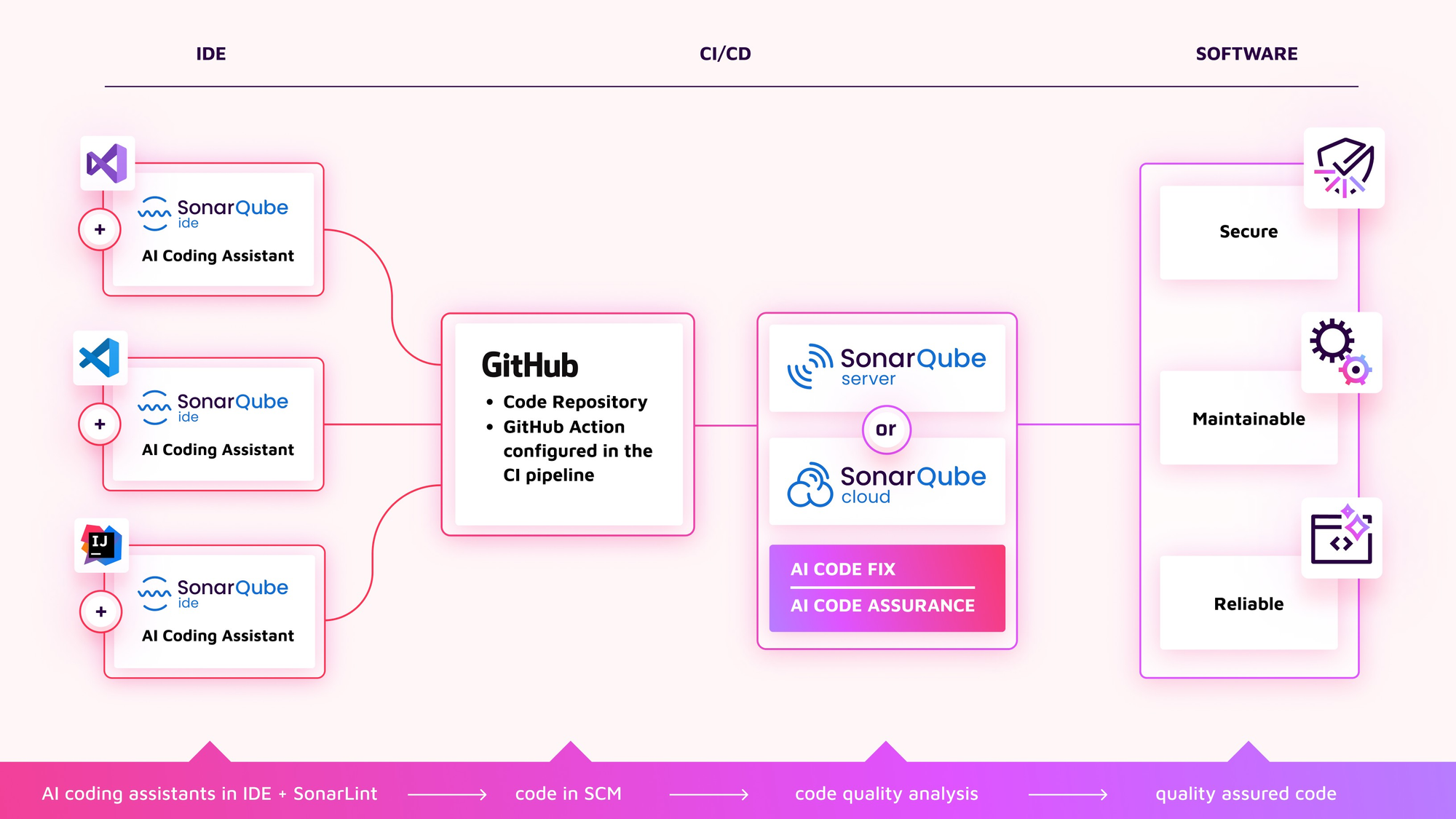

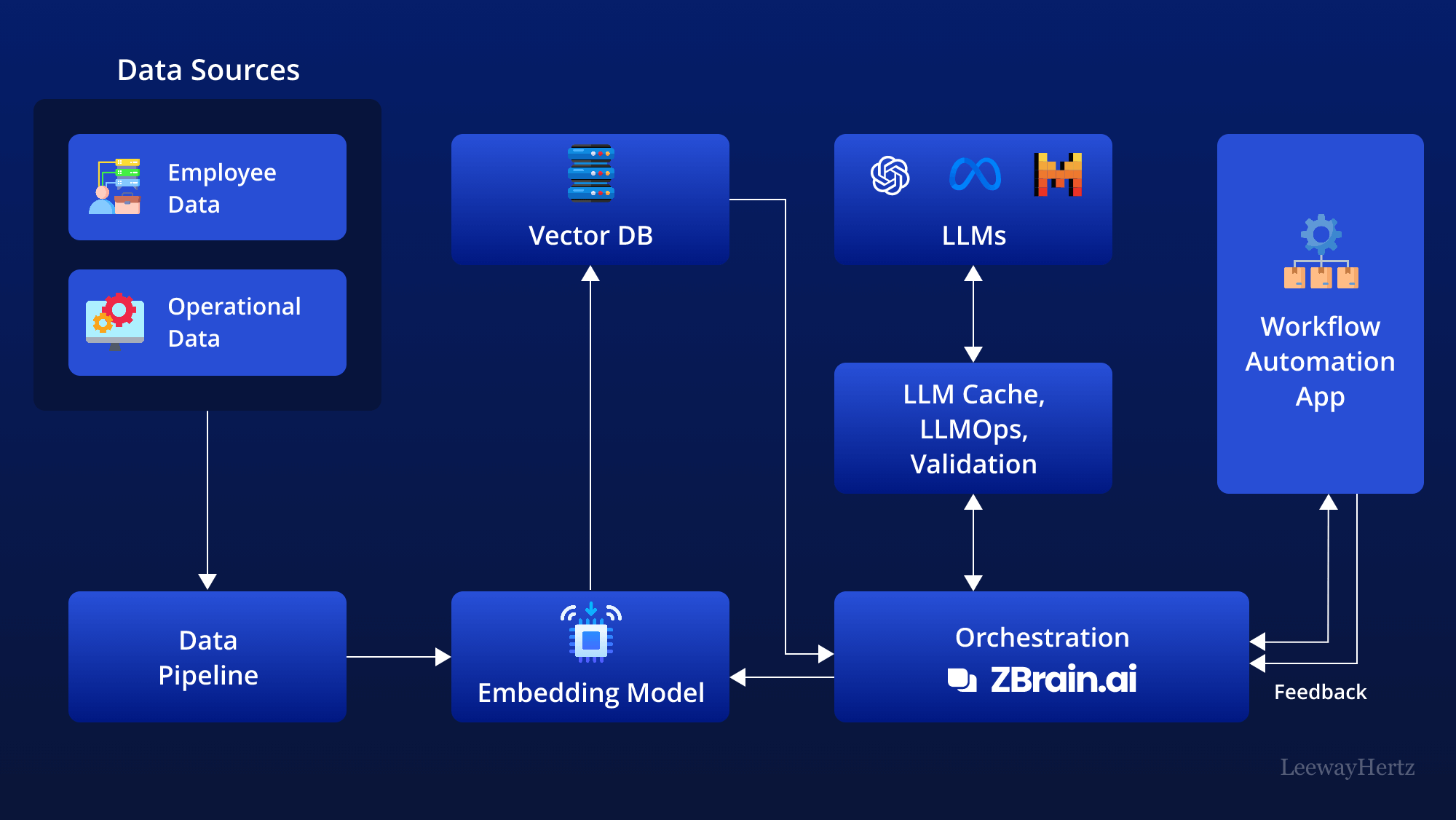

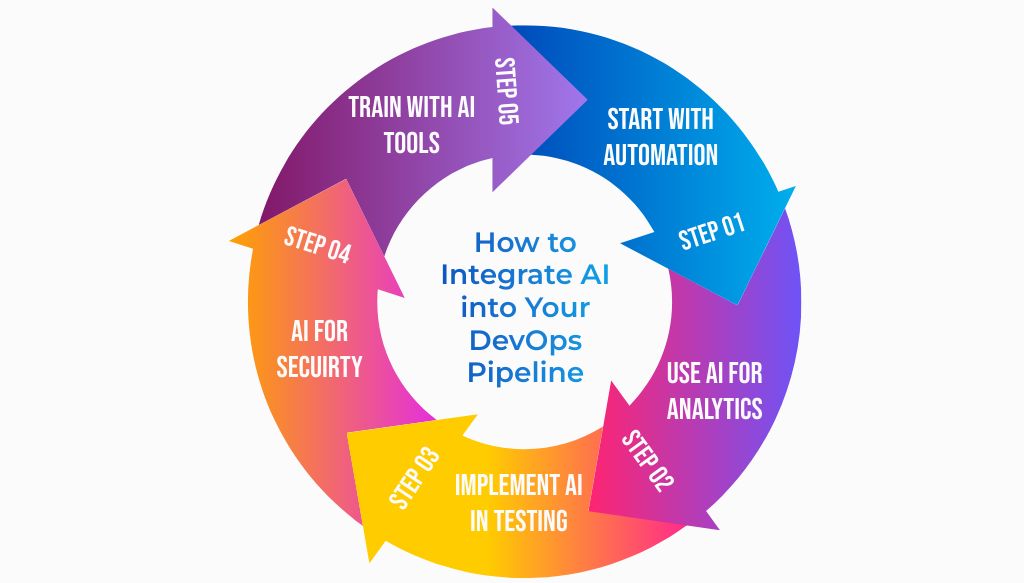

Integrating AI Agents into Your Workflow

Getting Started with AI-Driven Tools

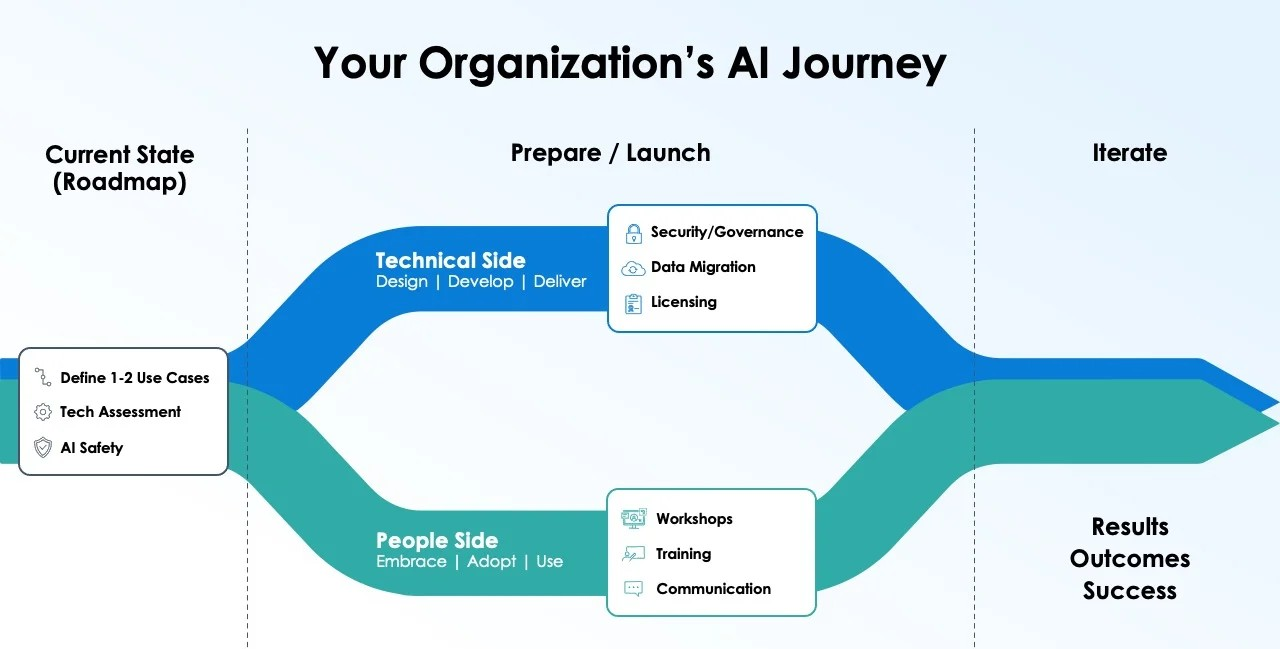

Implementing AI agents in your development pipeline may seem daunting at first. But the journey from skepticism to full adoption is surprisingly straightforward. It begins with understanding the specific needs of your projects and identifying the right tools that align with those needs.

At 1985, we started small. We integrated AI agents into our code review process as a pilot project. The initial results were promising. We observed a reduction in code errors and an increase in developer productivity. Once we saw the tangible benefits, we expanded the AI’s role to include automated testing. Today, AI is an integral part of our workflow.

Start by evaluating your current processes. Identify bottlenecks where errors frequently occur. Look for repetitive tasks that consume valuable time. Once you have a clear picture, research AI tools that cater to these specific challenges. Many vendors offer trial versions or proof-of-concept projects. Leverage these opportunities to test the waters before a full-scale rollout.

Best Practices for a Smooth Transition

A successful integration of AI agents requires a cultural shift. Your team must be on board with the new technology. Training and communication are key. Developers need to understand that AI is a tool for empowerment, not a threat to their expertise. At 1985, we held regular workshops and training sessions to demystify AI and highlight its benefits.

It is important to define clear metrics for success. What does success look like? Fewer bugs? Faster release cycles? Better code quality? Establish these metrics upfront. Then, use them to measure the impact of AI integration. Transparency in these metrics builds trust and encourages continuous improvement.

A phased approach is usually best. Begin with one aspect of your workflow—say, code review—and monitor the results. Once your team sees the improvements, gradually expand to other areas like testing and deployment. Maintain a feedback loop. Encourage your team to share insights and suggest improvements. This iterative process will ensure that your AI implementation evolves to meet the changing needs of your projects.

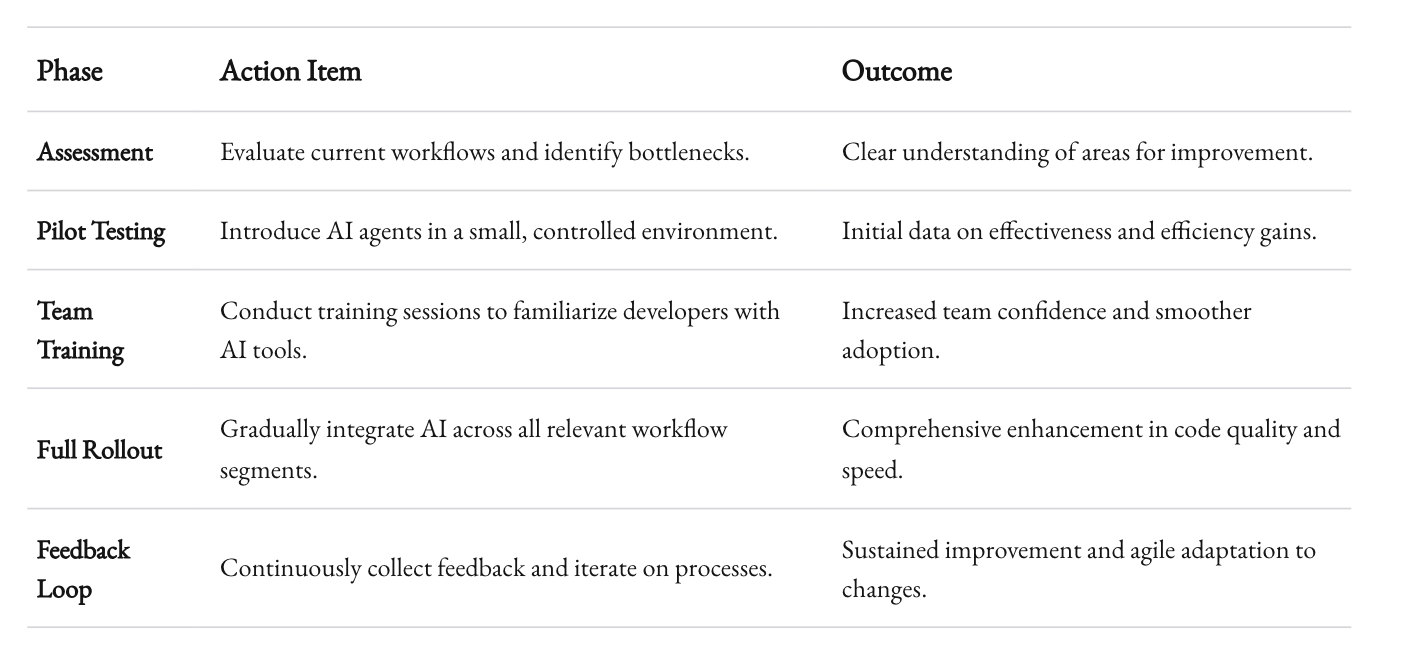

Roadmap for AI Integration

This roadmap serves as a guide for organizations looking to embrace AI agents. It is based on real-world experience and proven strategies that have worked for us at 1985. Each phase is critical, and skipping steps can lead to missed opportunities for optimization.

The Business Case for AI Agents

Financial and Operational Benefits

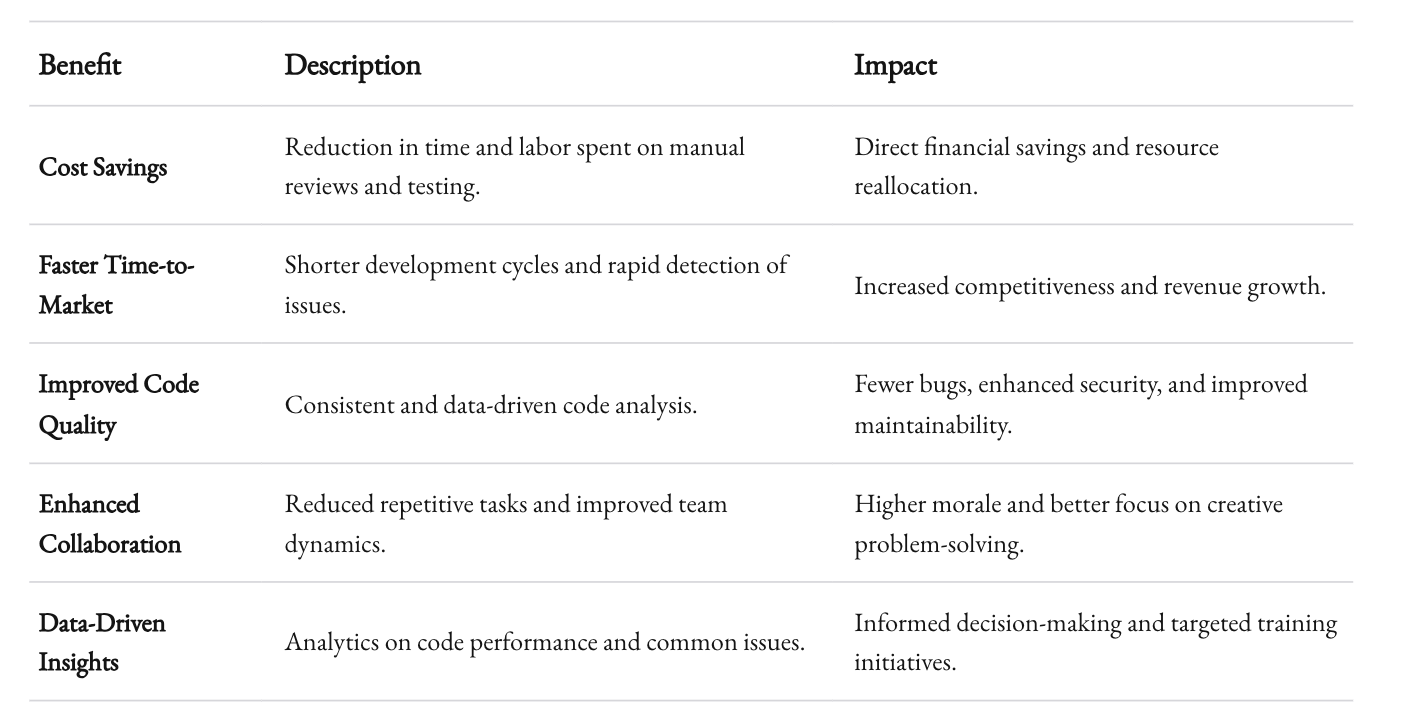

Integrating AI agents into automated code review and testing is not just a technological upgrade—it’s a strategic business decision. There is a clear financial incentive. Fewer bugs and faster development cycles translate directly into cost savings and revenue growth. Companies can release products faster, adapt to market changes, and ultimately stay ahead of the competition.

At 1985, our clients have witnessed a remarkable improvement in project turnaround times. Reduced manual intervention means lower overhead. The savings on time and labor are significant. Moreover, the reduction in post-release bug fixes saves additional resources. According to industry studies, up to 30% of project time can be wasted on bug fixes and manual testing. AI agents cut through this inefficiency, freeing teams to focus on innovation.

Operationally, the benefits are equally compelling. AI agents provide a consistent, objective assessment of code quality. They eliminate the variability inherent in human reviews. This consistency builds a reliable baseline for continuous improvement. The data-driven insights from AI can also inform strategic decisions. For example, analytics on recurring code issues can lead to better training programs for developers or prompt revisions to coding standards.

Enhancing Team Collaboration and Morale

Beyond financial savings and operational efficiency, AI agents have a positive impact on team dynamics. Developers often face the tedium of repetitive tasks and the pressure of last-minute bug fixes. AI takes over these burdens, allowing human talent to focus on creative and challenging problems. This shift can boost morale and enhance collaboration within teams.

When teams at 1985 see the benefits of AI, skepticism quickly turns to enthusiasm. Developers appreciate having a tool that supports them in catching subtle errors and improving code readability. This not only leads to better code but also fosters a culture of continuous learning and improvement. Teams are more agile, responsive, and ultimately more competitive in the market.

Business Benefits of AI Agents

The table above outlines the multifaceted benefits of adopting AI agents for code review and testing. These benefits are not merely theoretical. They are backed by data and real-world success stories from the software development industry.

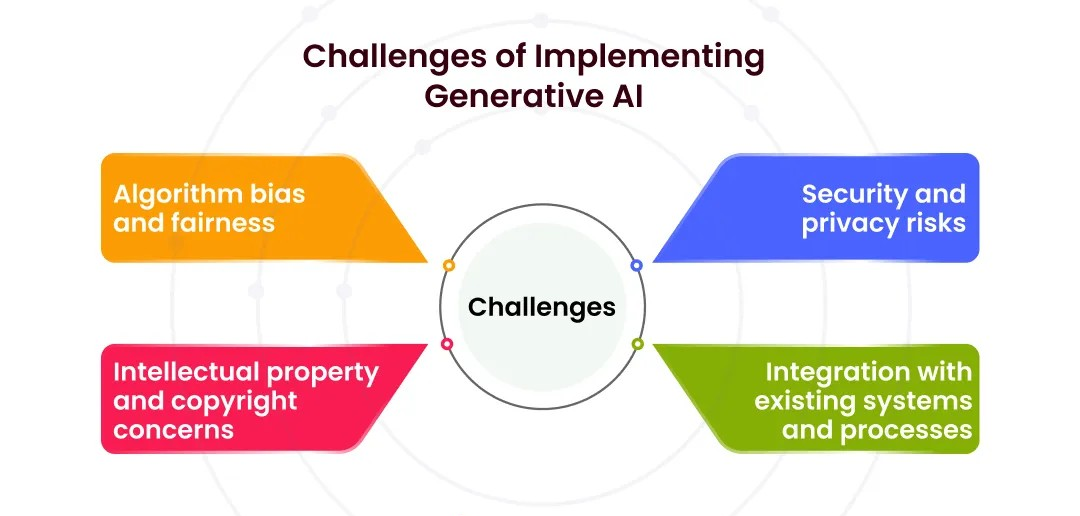

Challenges and Considerations

Navigating the Learning Curve

Adopting any new technology comes with challenges. AI agents are no exception. There is a learning curve. Developers must learn to interpret AI feedback and integrate it into their workflow. The initial phase may require more effort than expected. However, the long-term benefits far outweigh the short-term disruptions.

In our experience at 1985, the key challenge was not the technology itself but the mindset shift required. Some team members were initially resistant to the idea of an automated system reviewing their code. The solution was clear communication and demonstrable results. Once the team saw how AI could catch issues that human reviews had overlooked, acceptance grew. Training sessions, paired programming with AI insights, and transparent metrics helped smooth the transition.

Balancing Automation with Human Oversight

Automation is powerful. But it is not infallible. There is always a need for human oversight. AI agents are excellent at detecting patterns and anomalies. They are not as adept at understanding context, especially in complex, innovative code scenarios where human intuition plays a crucial role. It is essential to strike a balance. AI should be seen as a partner rather than a replacement for human expertise.

At 1985, we maintain a hybrid review model. The AI handles the heavy lifting of routine checks and flagging potential issues. Human reviewers then focus on higher-order concerns like architectural integrity and innovative problem-solving. This balance ensures that the efficiency of automation does not come at the expense of creativity and nuanced judgment. It is this symbiotic relationship that drives the best outcomes in our projects.

Ethical and Security Considerations

With great power comes great responsibility. AI agents, while incredibly powerful, also raise questions about data security and ethical implications. These systems often require access to codebases that may contain sensitive or proprietary information. Ensuring that these agents operate in a secure and ethical manner is paramount.

We take security seriously at 1985. Our AI tools are deployed with strict access controls and encryption protocols. Additionally, ethical guidelines govern how AI feedback is implemented. Developers are encouraged to critically evaluate AI recommendations rather than blindly accepting them. This thoughtful approach ensures that the benefits of AI are realized without compromising the integrity or security of our projects.

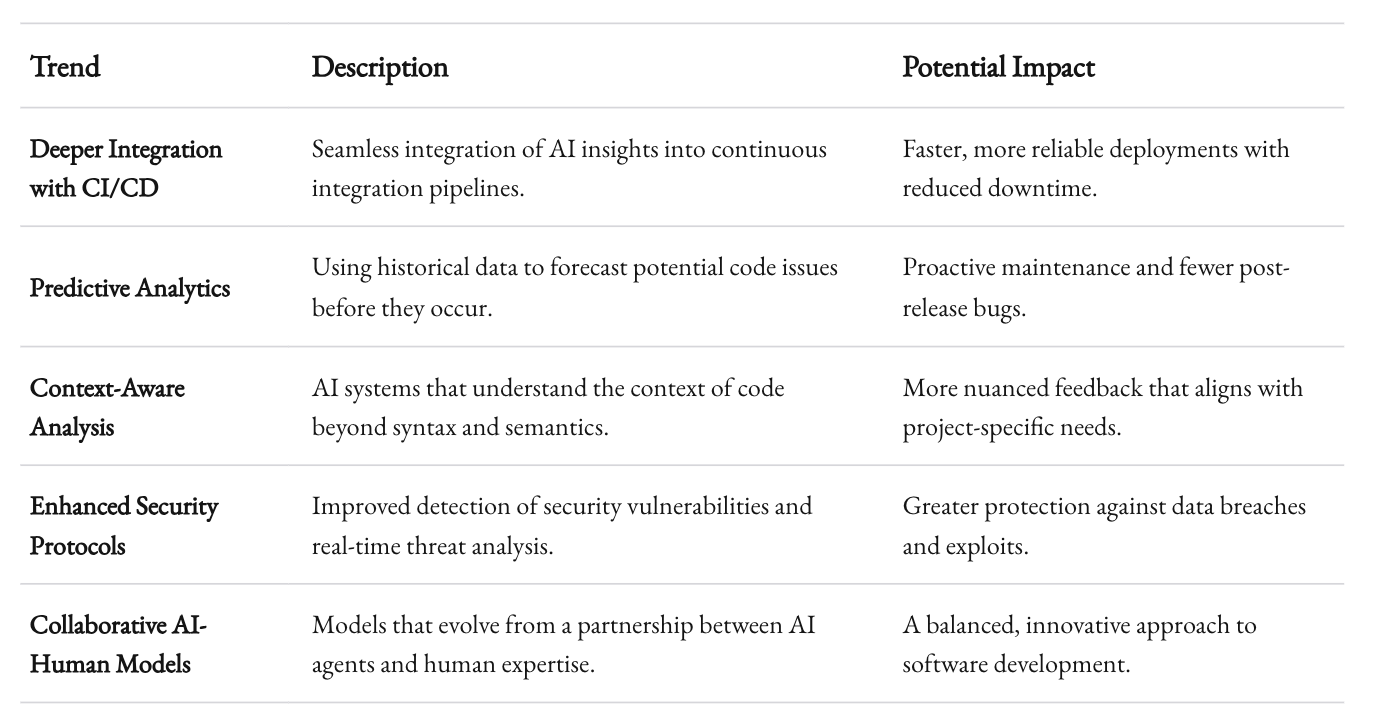

Future Trends in AI for Code Review and Testing

The Road Ahead

The future is bright for AI in software development. Trends indicate that AI agents will become even more sophisticated. They will not only analyze code but also predict potential future issues based on historical patterns and emerging technologies. The integration of AI with DevOps practices is expected to deepen. This means more seamless workflows, where continuous integration and continuous deployment (CI/CD) pipelines are enhanced by real-time AI insights.

At 1985, we are already experimenting with next-generation AI tools. These tools are designed to offer even deeper analysis, such as integrating with real-time monitoring systems to predict and mitigate production issues before they occur. The potential is enormous. The evolution of AI in code review and testing is a testament to how technology can drive both efficiency and innovation in software development.

Embracing Predictive Analytics

One of the most promising areas is predictive analytics. AI agents are increasingly capable of forecasting potential problem areas. They use historical data to predict which modules might be vulnerable to bugs or performance issues. This forward-looking approach is invaluable in complex projects where unforeseen issues can be particularly costly.

Predictive analytics in AI is not science fiction. Several industry reports highlight that predictive models have reduced post-release bugs by up to 25% in some organizations. As these models become more accurate, developers can plan proactive maintenance and refactoring sessions, leading to more resilient codebases. This proactive stance transforms how companies approach software quality and maintenance.

Future Trends in AI-Powered Software Development

The table above highlights some of the future trends that are set to redefine software development. These trends not only promise technical improvements but also open new avenues for collaboration between human developers and AI systems.

Practical Tips for Implementation

Start Small and Scale Up

If you are considering integrating AI agents into your workflow, the key is to start small. Begin with a pilot project that addresses a specific pain point in your development cycle. For instance, use an AI agent solely for syntax checking and basic error detection. Monitor the results. Gather feedback from your team. Once the benefits become clear, gradually expand the role of AI in your processes.

At 1985, we began with a limited scope. Our initial pilot focused on automated code reviews. The success of that project paved the way for a broader adoption that included automated testing and even deployment monitoring. The incremental approach allowed us to build trust and understand the nuances of the technology in a controlled environment.

Invest in Training and Continuous Improvement

Introducing AI agents is not a one-off event. It requires ongoing investment in training and process improvement. Organize regular sessions where developers can learn about the latest updates in AI tools and share their experiences. Encourage a culture of experimentation. Provide a safe space for your team to raise concerns and suggest improvements.

Continuous improvement is the name of the game. As AI agents learn from your codebase, they evolve. Their recommendations will become more accurate and tailored to your specific needs. Make sure to integrate this feedback into your development practices. This dynamic interaction between human and machine is where true innovation happens.

Monitor, Measure, and Adapt

No implementation is perfect out of the box. You need to monitor the performance of your AI tools rigorously. Set clear metrics for success. Are you reducing bug counts? Is the development cycle shortening? Use dashboards and regular reviews to track progress. Be prepared to adapt your strategy based on what the data tells you.

At 1985, we have set up detailed monitoring dashboards. These dashboards track key performance indicators such as bug detection rates, code review turnaround times, and test coverage improvements. The data has been invaluable in refining our approach and justifying further investments in AI technologies.

Recap

AI agents for automated code review and testing are not just a futuristic vision—they are a reality that is reshaping software development today. They provide a powerful, efficient, and reliable way to enhance code quality, reduce development cycles, and improve overall team productivity. At 1985, our journey with AI has been transformative. We have witnessed firsthand how these technologies can revolutionize the way we work.

The benefits are tangible. The financial savings, operational efficiencies, and improved collaboration all point to a future where AI is an indispensable partner in software development. There are challenges. There are learning curves. But the rewards far outweigh the initial hurdles.

For those looking to stay ahead in the fast-paced world of software development, embracing AI agents is a strategic move. They are not a replacement for human ingenuity but a powerful complement to it. They free us from repetitive tasks, allowing us to focus on creativity and innovation. They ensure that our code is not just functional, but robust, secure, and scalable.

In this journey, the key is balance. Maintain human oversight. Invest in training. Monitor progress and adapt as needed. The future of code review and testing is bright, and with AI as a partner, we are better equipped than ever to meet the challenges of tomorrow.

Thank you for joining me on this exploration. I hope this post has provided you with not just insights, but also practical steps to consider as you integrate AI into your development workflow. The world of automated code review and testing is evolving rapidly. Stay curious, stay informed, and most importantly, embrace the change. The future of software development is here—and it’s intelligent, efficient, and incredibly exciting.